/bin/bash A-to-Z

Aug 05, 2022

Looking at many different commands on the bash command line interface.

/bin/bash A through Z 🧵 #bashthread #bash

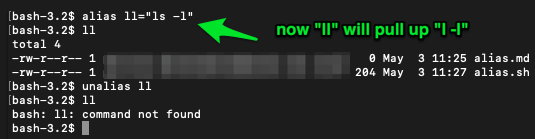

alias … creates a nickname for a command which makes it easier to invoke that command. E.g. instead of doing, “ls -l” you can replace it with simply, “ll” - this is a good way to make funny inside joke command names.

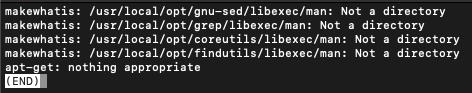

apropos … searches the manual pages. bash has a built in manual which can be invoked with, “man -k” – apropos can search through the entire manual. Here’s a search for apt-get:

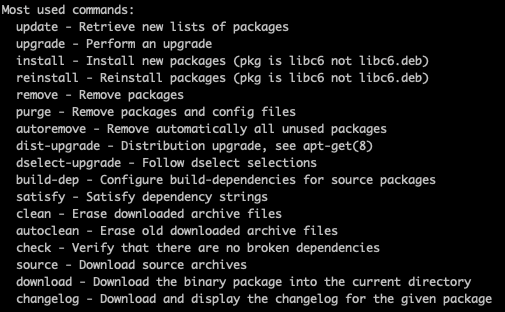

apt-get … it’s a package installation tool, but remember that it’s not just apt-get update/install - there are all sorts of dependency tools, as well as the ability to, “clean” which is helpful for creating smaller Dockerfiles.

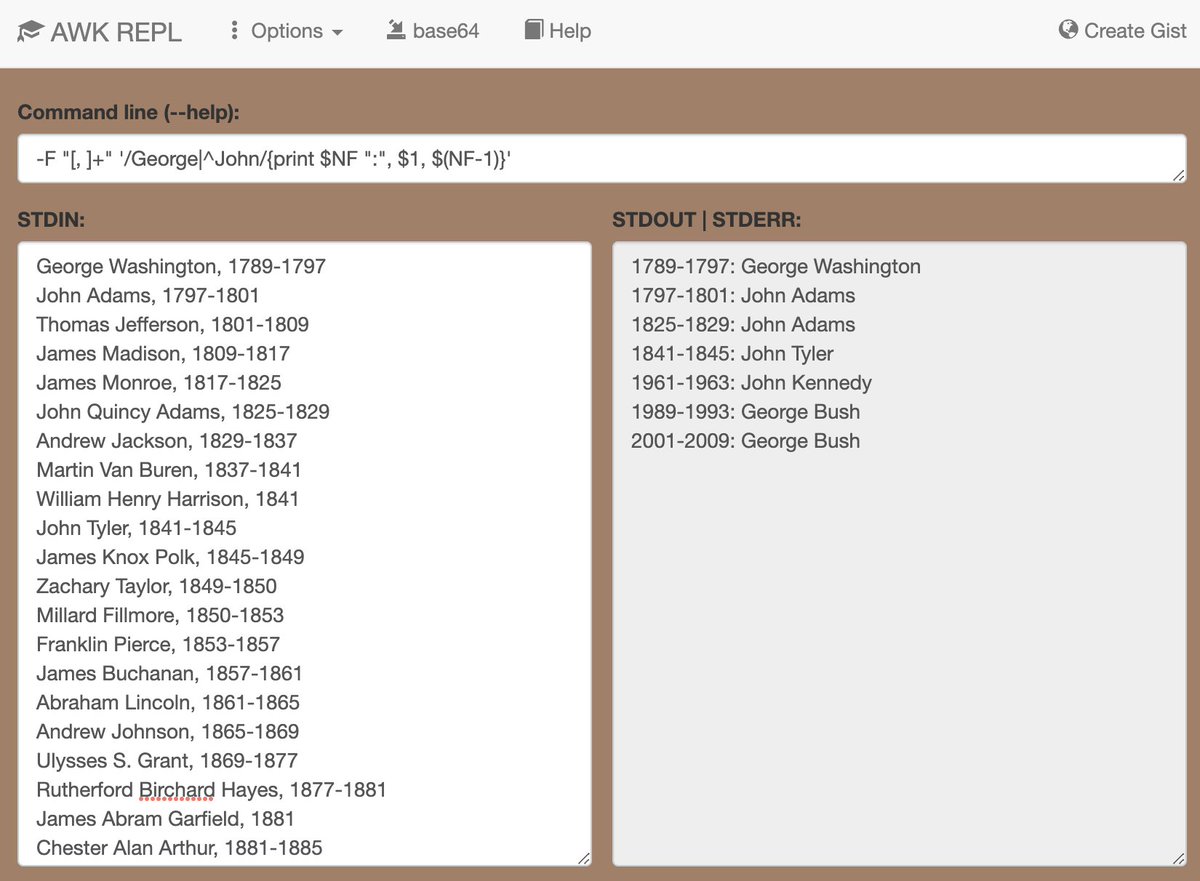

awk … it’s not awkward, it’s an entire language in a tool to help munge data from the Standard Input (STDIN) - you can do, “awk CODE” and use https://awk.js.org/

to build out your awk expression to use on bash.

basename … it’s kind of like the opposite of pwd. Whereas pwd gives you the directory you’re in and cares nothing about filenames, with basename /path/to/file.txt it will spit out file.txt only.

bash … bash, or often /bin/bash is the path on most linux distributions to the bash shell, another option being /bin/sh - shells have these nice handy commands, while tty, terminal, is the environment for translating computer code to characters.

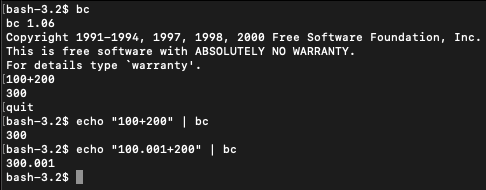

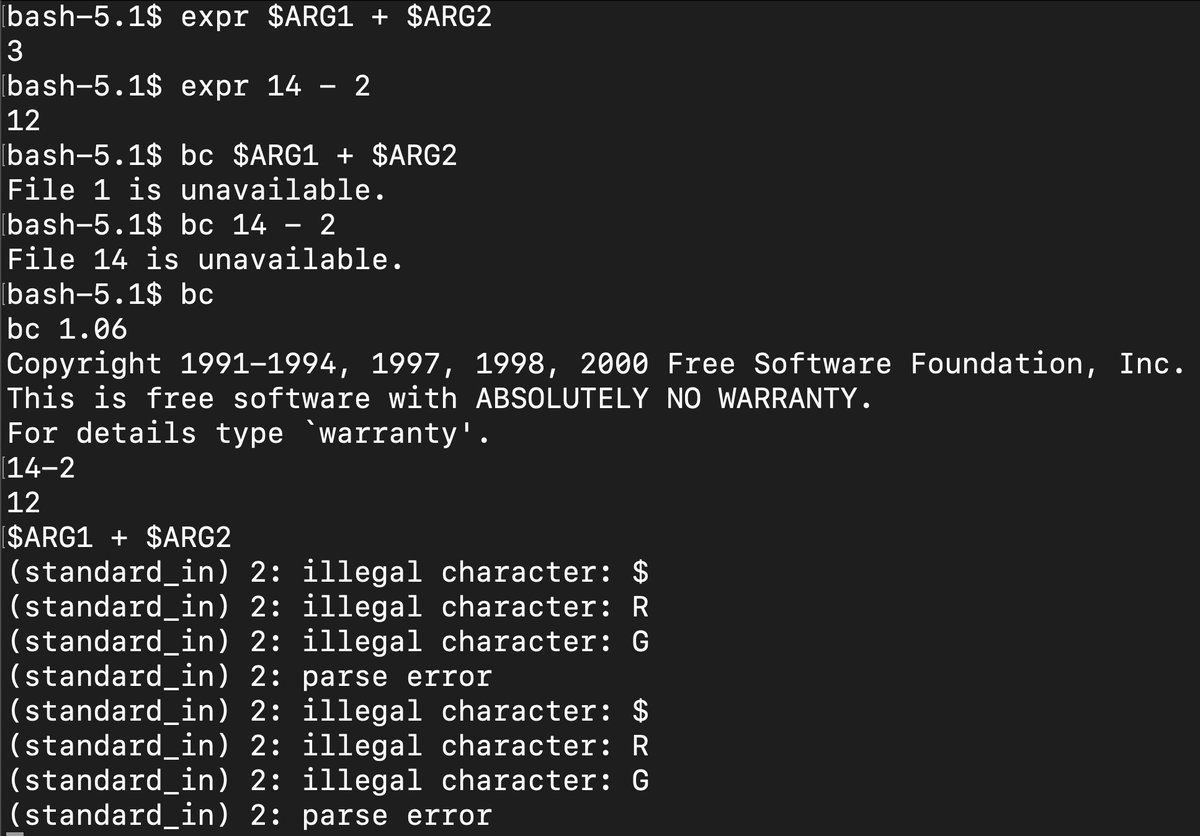

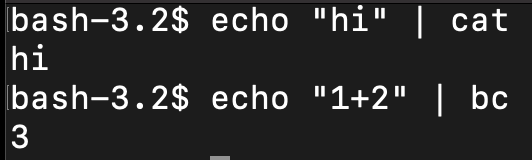

bc … an arbitrary precision calculator. You can use it to open it up a little calculator application, or you can pipe a string into bc as shown and it will return the result for you.

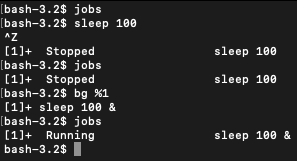

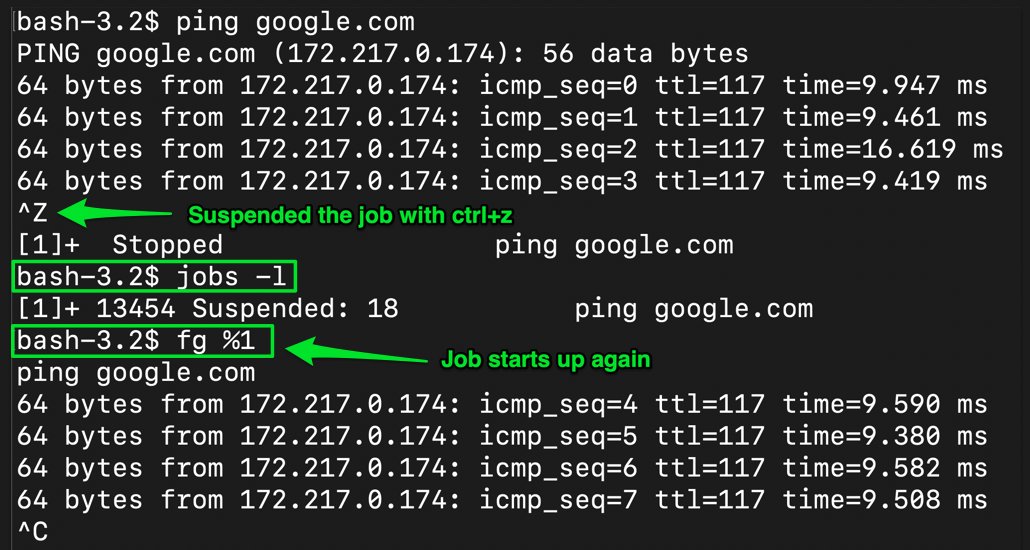

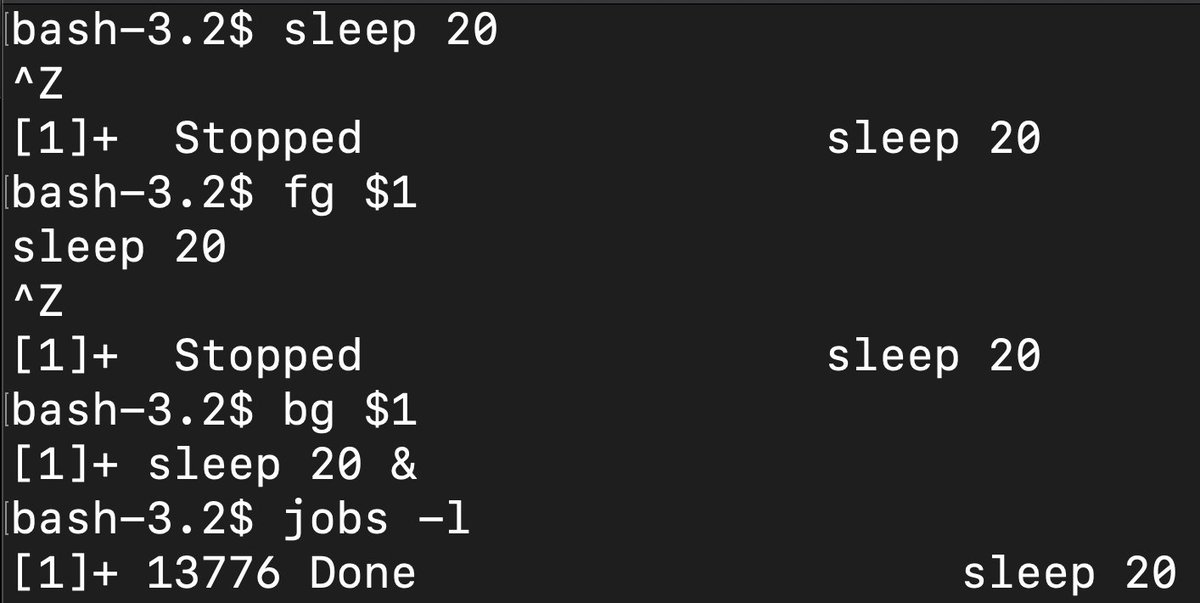

bg … run a process in the background. Basically if you run something like, “sleep for 100 seconds” you can throw that into the background. This is different than daemon, bg is user initiated whereas daemon is system initiated.

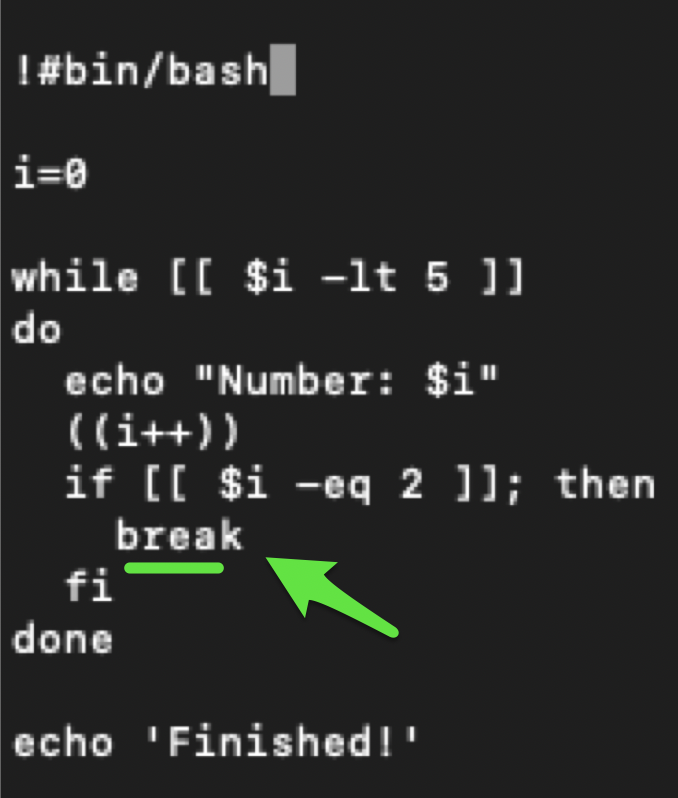

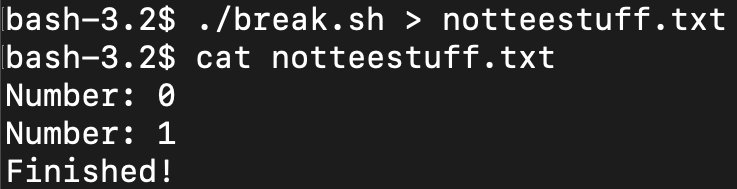

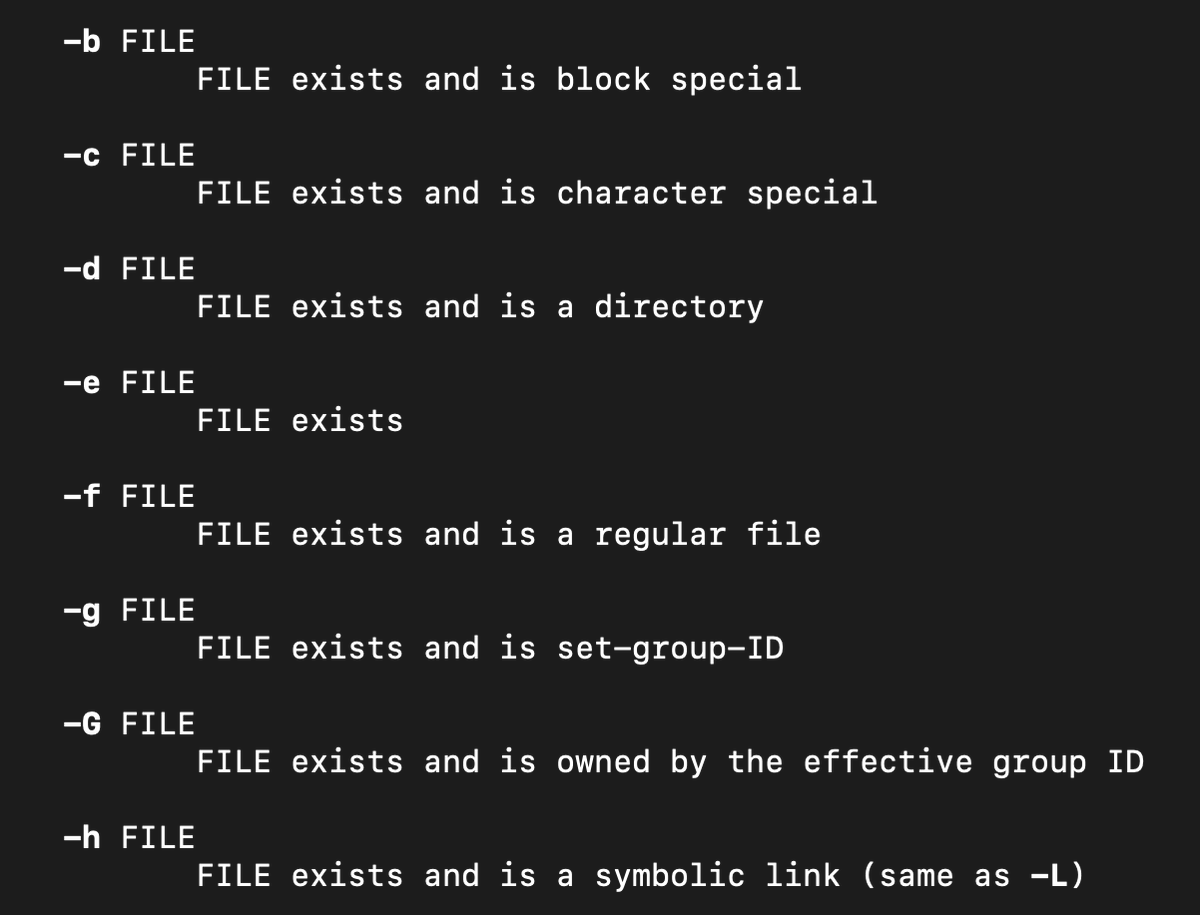

break … There are loops in bash scripting. You can use break to jump out of the loop without doing any operation. In this example we break out of the loop if i equals 2. Here’s a cheat sheet for bash operators … https://kapeli.com/cheat_sheets/Bash_Test_Operators.docset/Contents/Resources/Documents/index

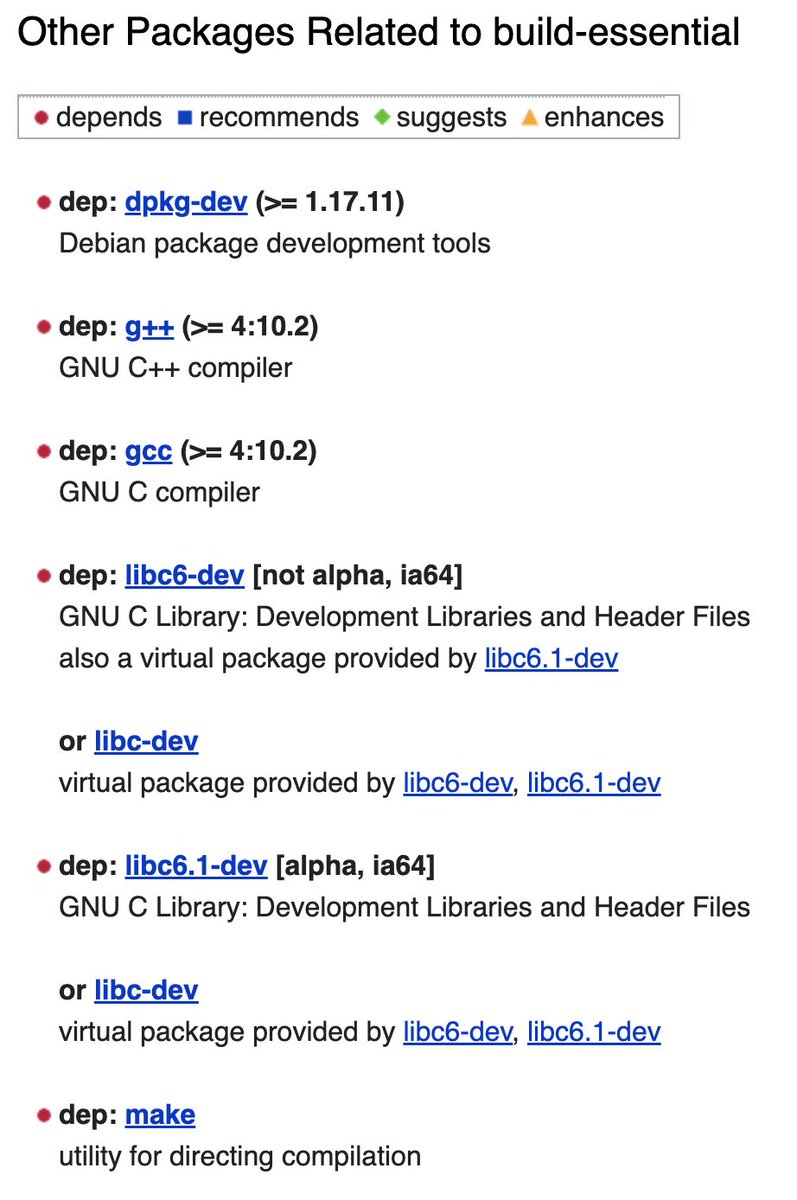

build-essential … not really a bash tool as much a thing that installs several bash tools for debian/ubuntu type systems. https://packages.debian.org/sid/build-essential

- includes dpkg-dev, g++, gcc, libc6-dev and make, all important tools for compiling packages on debian/ubuntu.

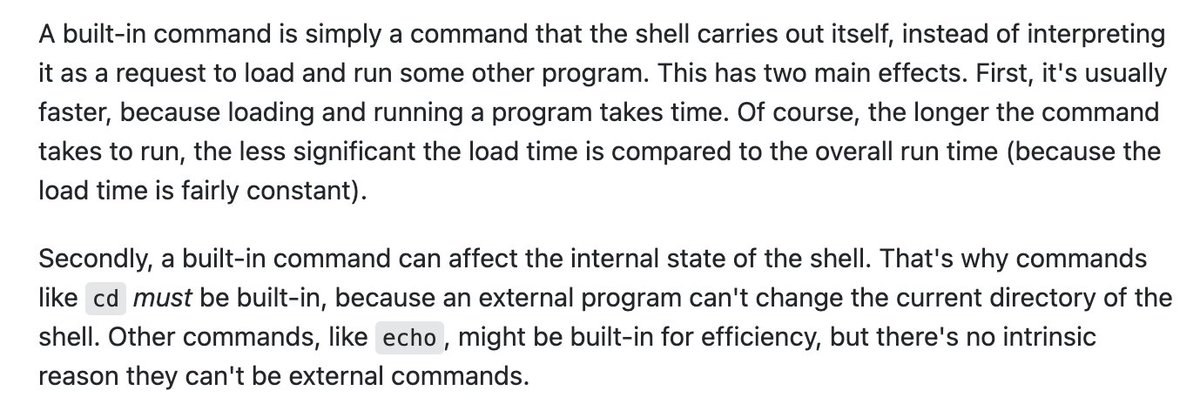

builtin … to understand builtin, you first have to understand what a shell is. The shell is a, “shell” around a terminal - in unix-like systems, there can be several shells, bash, zsh, sh, or even the python shell. https://unix.stackexchange.com/questions/11454/what-is-the-difference-between-a-builtin-command-and-one-that-is-not

builtin (continued) … the, “builtin” of a shell depends upon the shell you are using, so you have to check the documentation, in this case, the bash documentation. Here’s the bash shell builtins: https://www.computerhope.com/unix/bash/index.htm

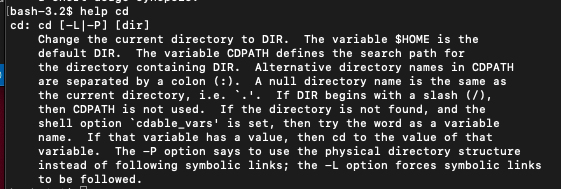

builtin (continued) … builtins don’t have the usual -h flag help files, so you can use, “help help” or “help cd” for example, to get the help file for that command, for example:

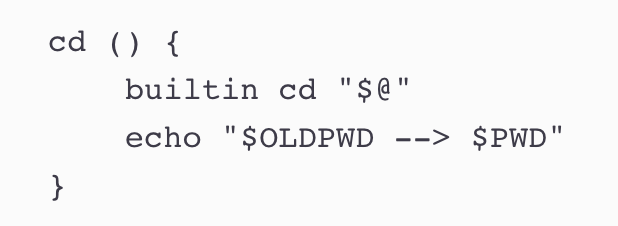

builtin (continued) … you can use, “builtin” to redefine a builtin, as shown. Why do this? Maybe you want a builtin to have a different default behavior for convenience sake. Maybe you always want, “ls” to display, “ls -1” every time (one file per line).

cal … displays a nifty little calendar.

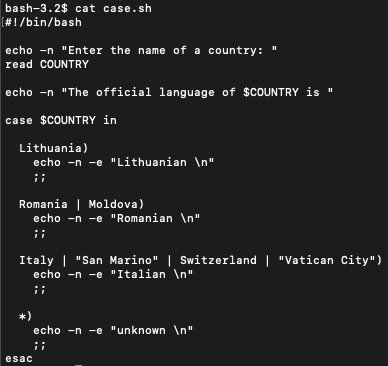

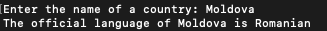

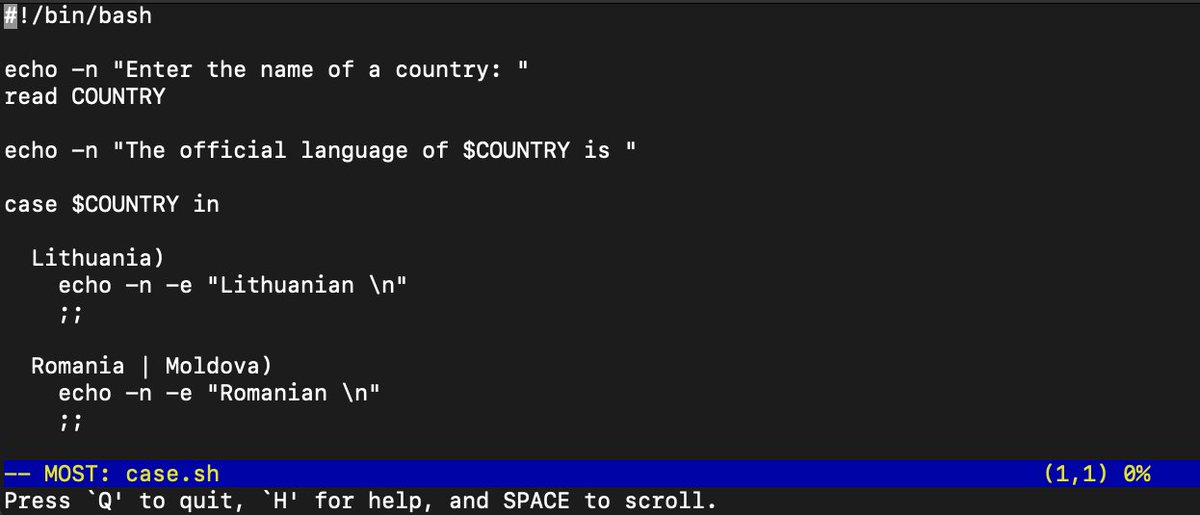

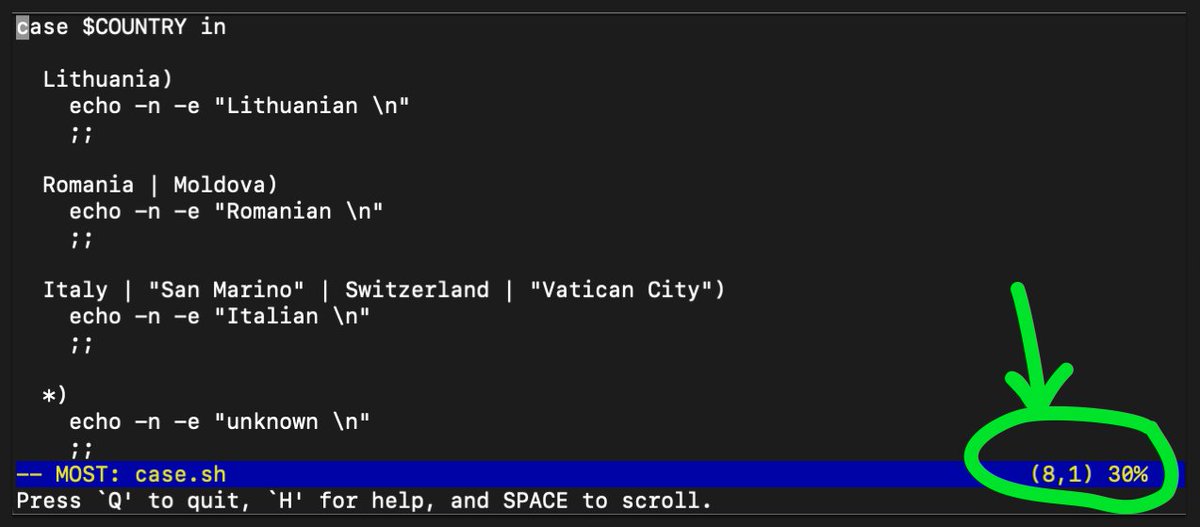

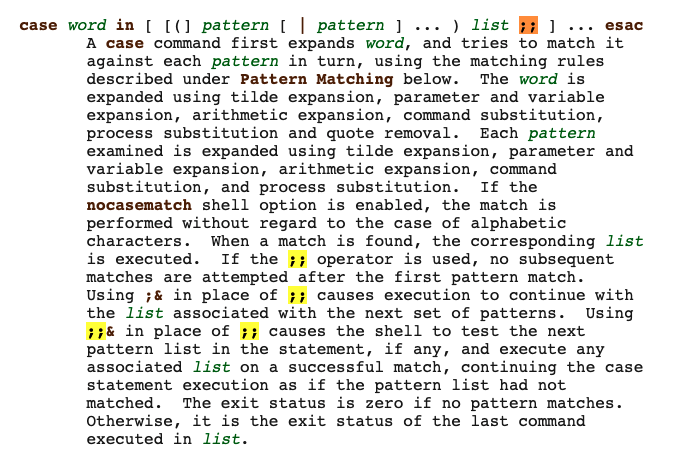

case … is like an if statement, which matches a particular expression or logical state with an operation. You list out the possible cases, as well as an *) catch-all, and end the case statement with, “esac” - case spelled backwards.

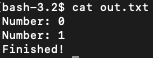

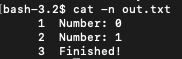

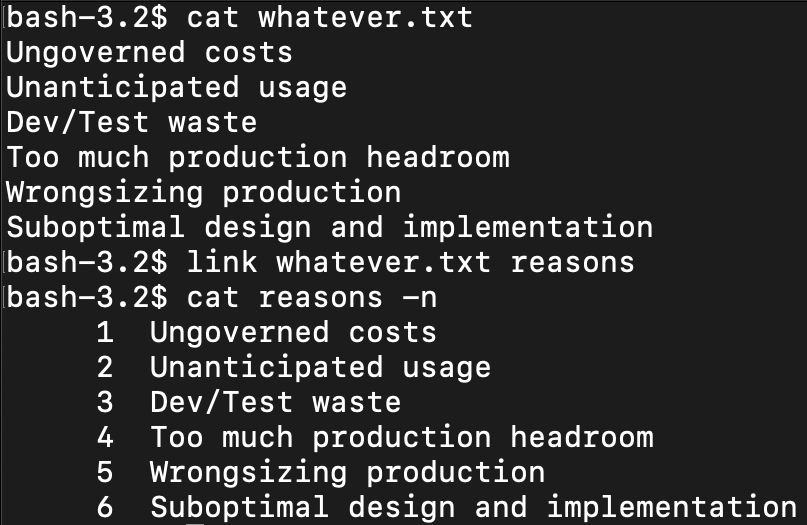

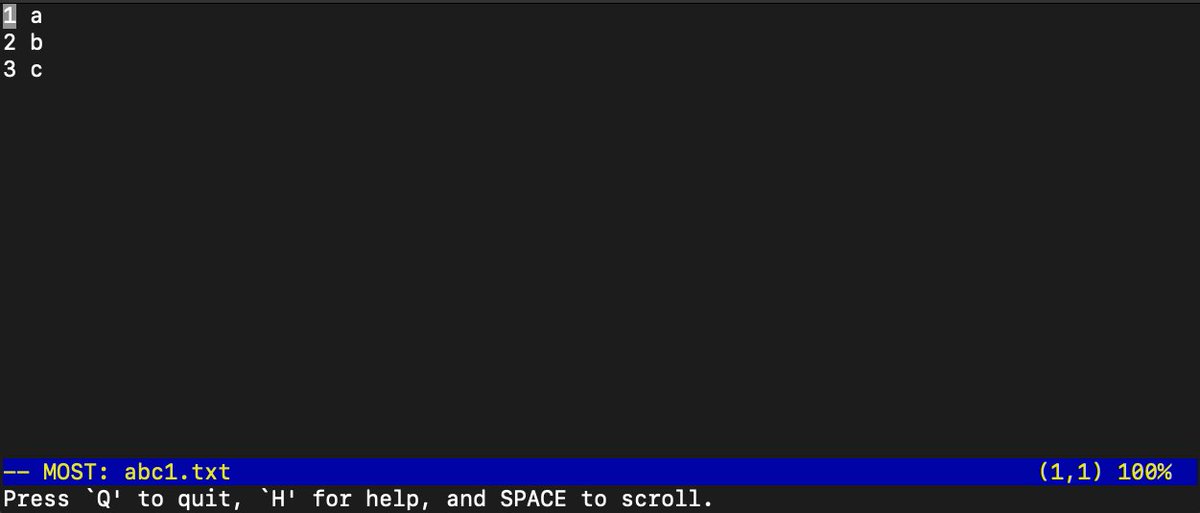

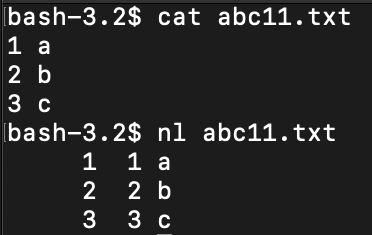

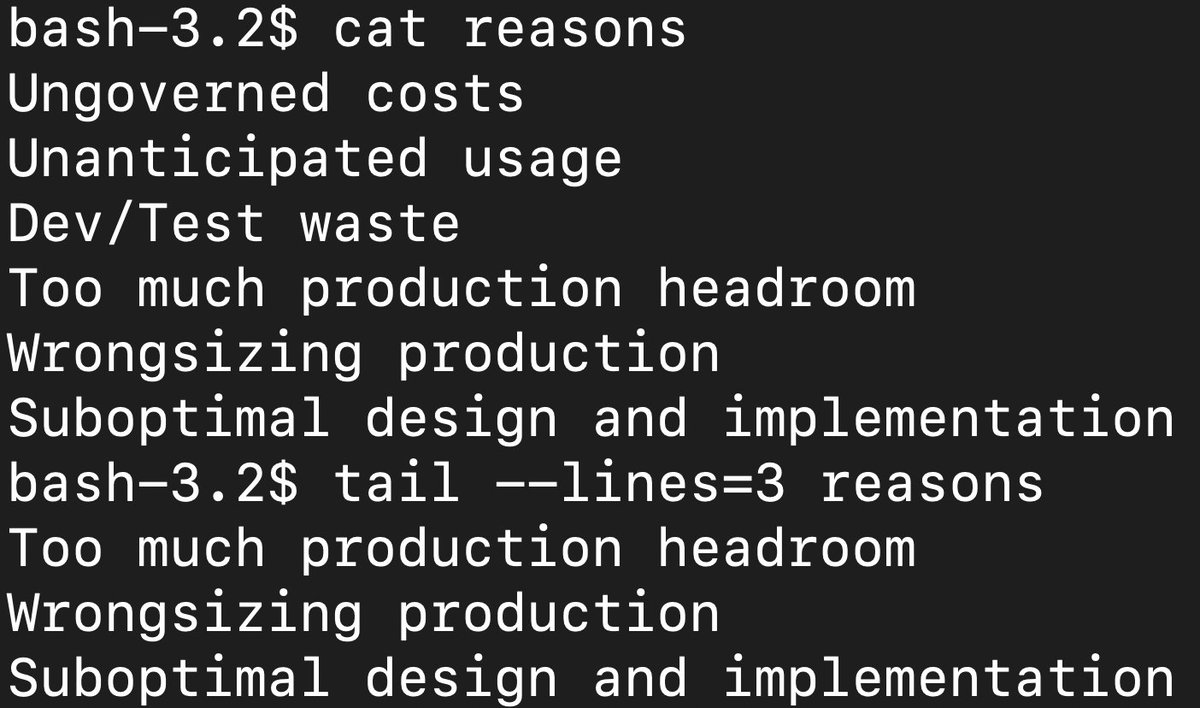

| cat … just prints out the contents of a file, -n shows the line numbers of each line. So if you wanted to get the number of lines (rows) in a file with awk, you can do: cat -n out.txt | awk ‘END{ print $1; }’ |

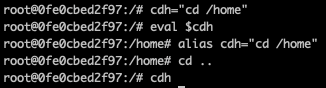

cd … changes the directory. You can do cd /path/to/directory to jump in, or cd .. or cd ../../ to jump back an arbitrary number of directories. Less known is cd -P and cd -L which turns on and off the use of symbolic links (shortcuts).

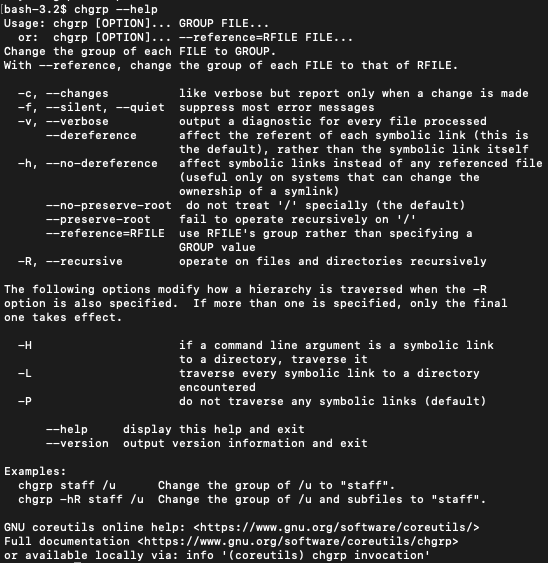

chgrp … change the group ownership of a file. So you could have a group of users, user1, user2 in group group1, then you could have a file hello.txt, or folder /hello, you could change access to that file/folder with chgrp group1 hello.txt or chgrp group1 /hello

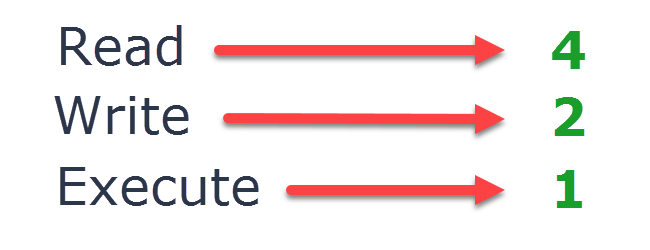

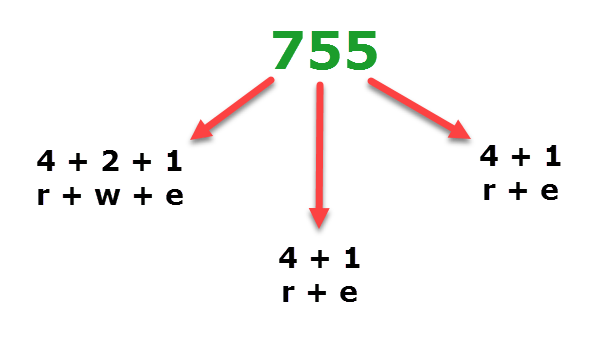

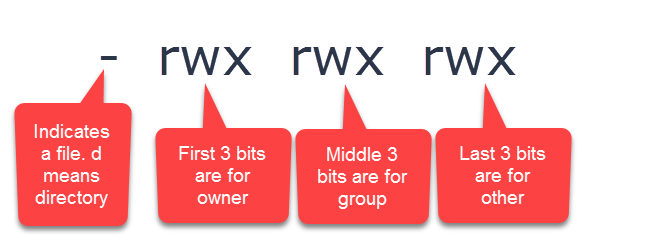

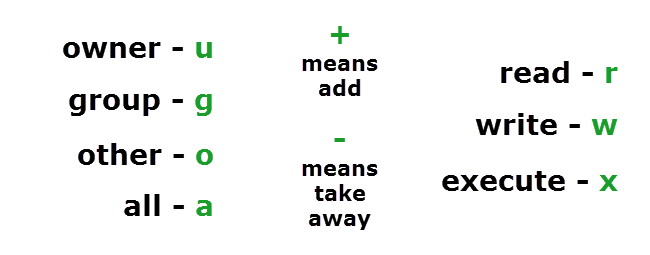

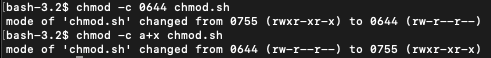

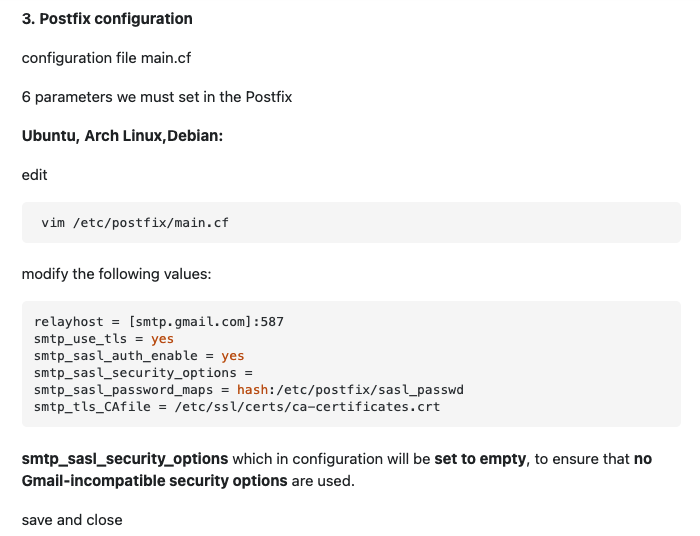

chmod … there are three different numerical ways to show permission levels, the sums of which indicate levels of ownership. There are also letter abbreviations for these numerical representations. You can show output of the chmod command with the -c flag.

chmod (continued)

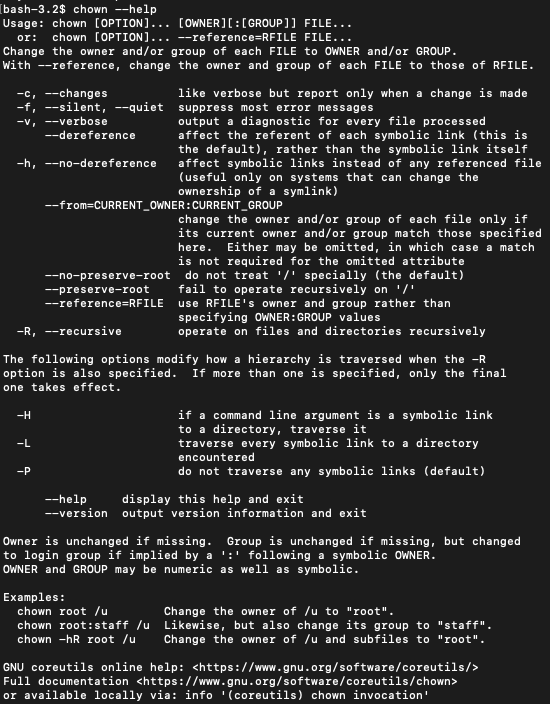

chown … change the owner of a file to an individual or group. Only root can use chown. Different than chgrp, the root has the ultimate permissions over who owns what, including group assignments, but then other users can have group assignment permission https://www.oreilly.com/library/view/running-linux-third/156592469X/ch04s14.html

chroot … can be used to set up a virtual environment, kind of similar to using docker, I haven’t used it much, I prefer just using docker. https://www.howtogeek.com/441534/how-to-use-the-chroot-command-on-linux/

chkconfig … is like systemctl, allows you to start and stop services, can be used to start services on boot. Here’s a translation guide for systemctl. https://bencane.com/2012/01/19/cheat-sheet-systemctl-vs-chkconfig/

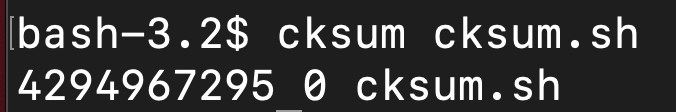

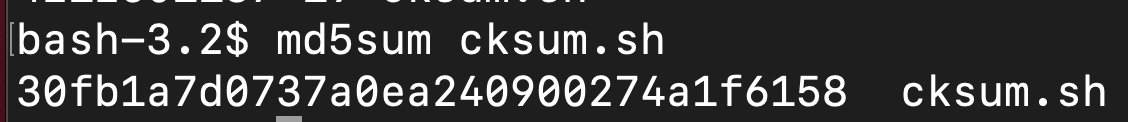

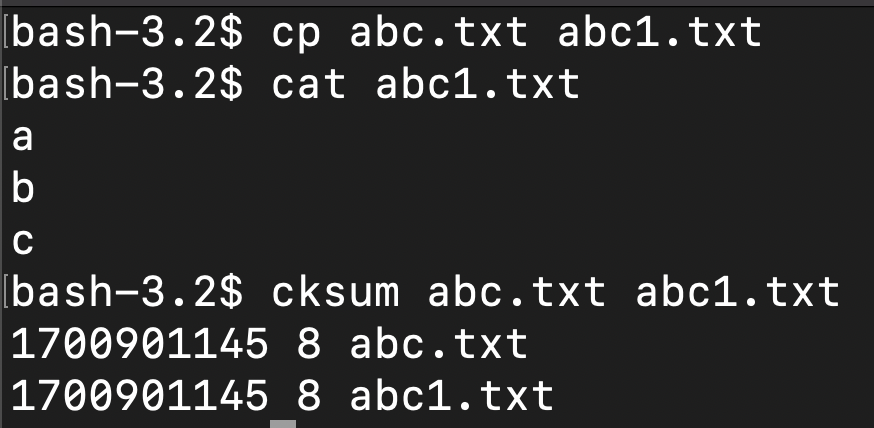

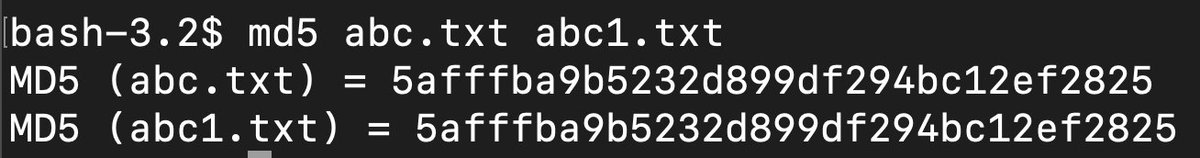

chksum … files can get corrupted or changed. cksum uses an algo called cyclic redundancy check (crc) to spit out a unique code for a file, as well as the filesize for consistency checking. Another algo would be md5sum which involves stronger encryption. https://en.wikipedia.org/wiki/Cyclic_redundancy_check

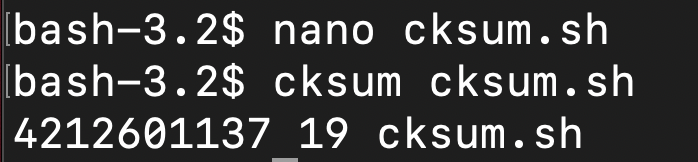

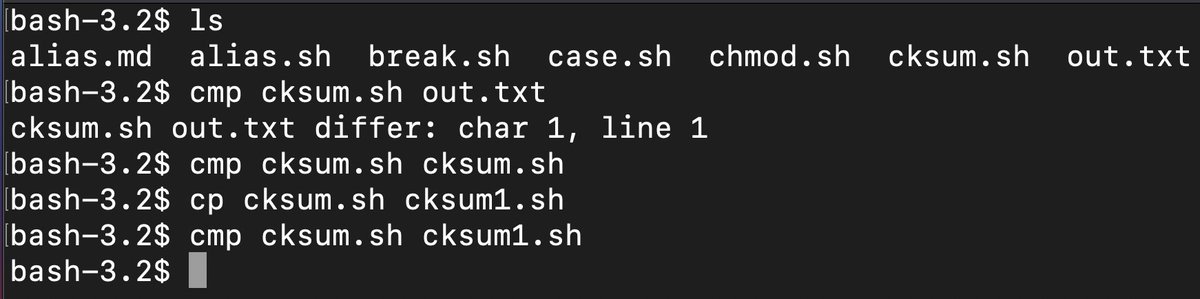

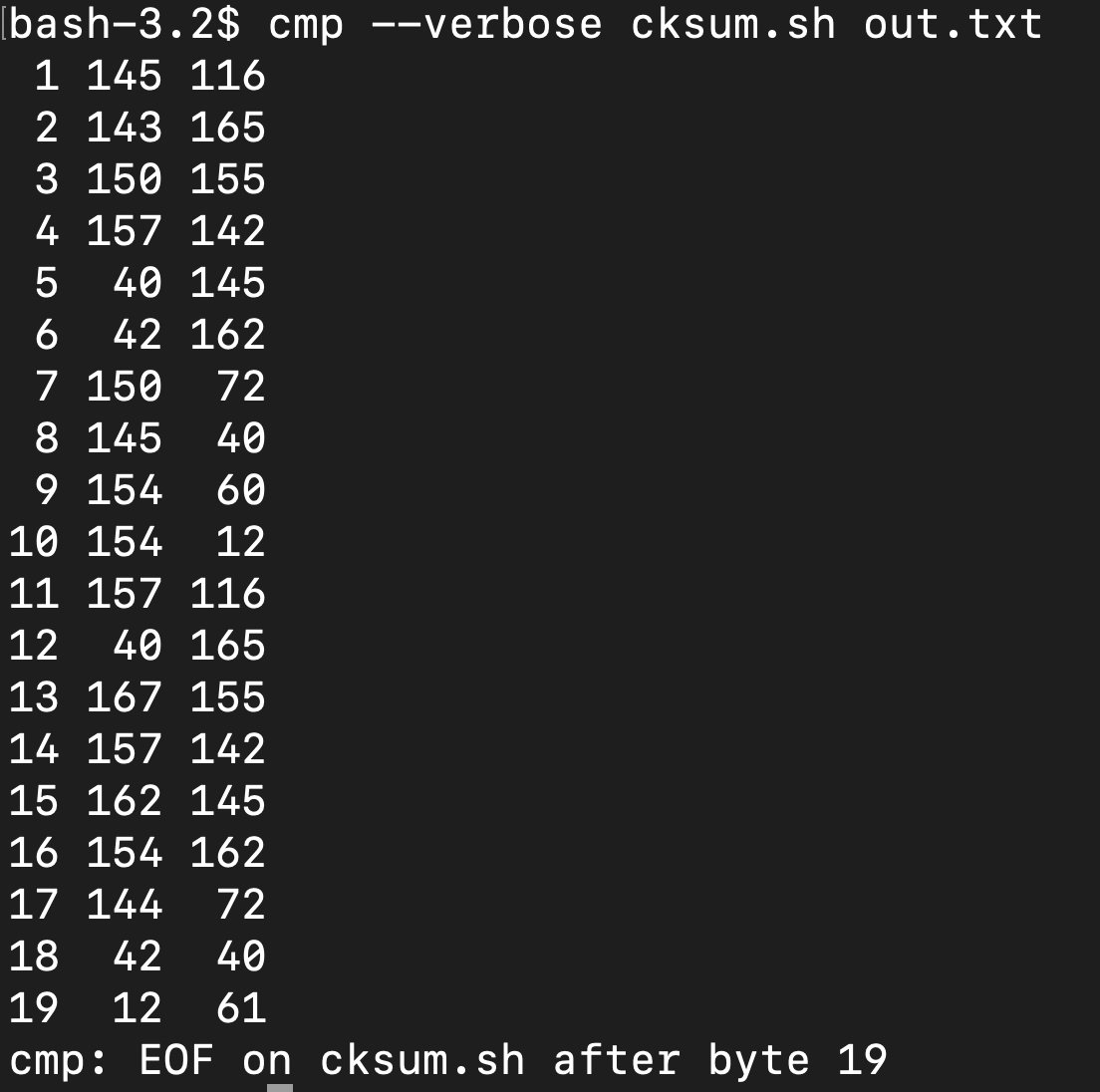

cmp … compares the contents of a file. If the files are the same it spits out nothing. The –verbose option shows a line by line comparison.

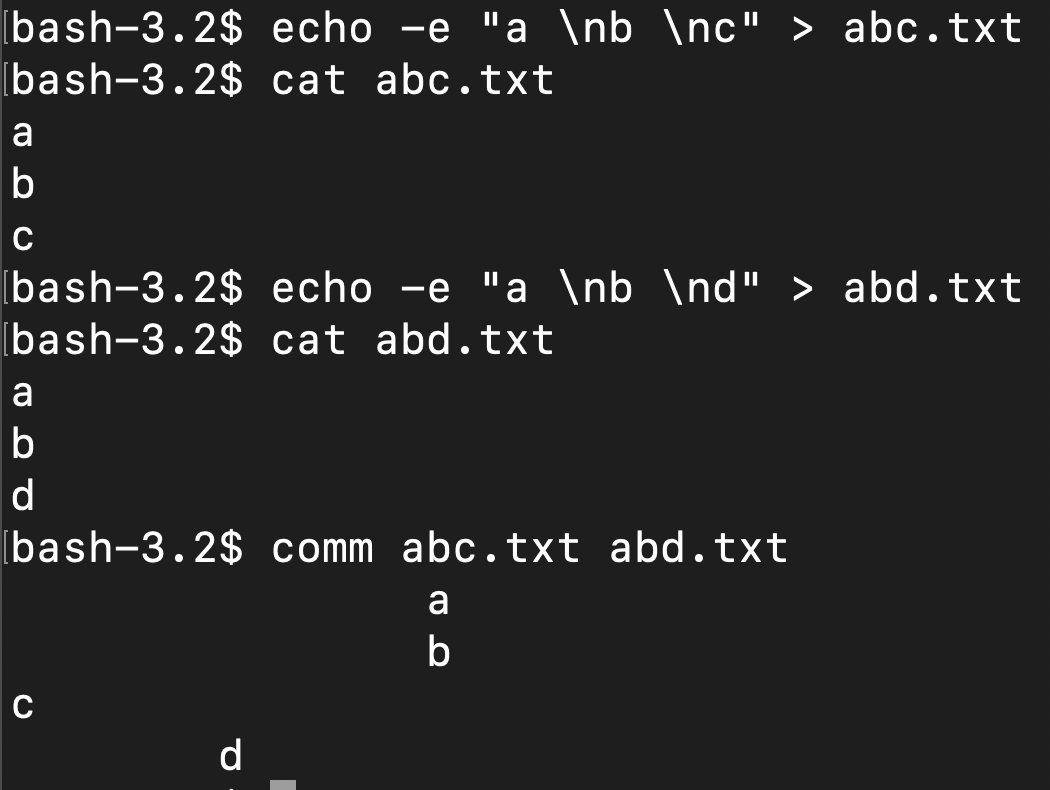

comm … compare files line by line. “With no options, produce three-column output. Column one contains lines unique to FILE1, column two contains lines unique to FILE2, and column three contains lines common to both files.”

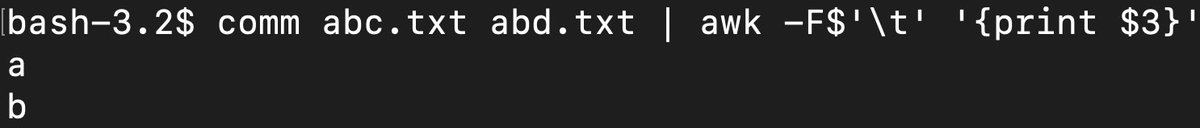

| comm (continued) … if you just wanted to print the lines in common between the two files you could do: comm abc.txt abd.txt | awk -F$’\t’ ‘{print $3}’ |

command … to understand command you have to understand $PATH and .bashrc, which are different places the bash shell looks for programs, utilities to run. .bashrc is the most surface level, stuff here runs first.

command (continued) $PATH is a list of directories in order, separated by : which bash looks into each, one after another, looking for a matching program to run, such as https://t.co/QLBgnFohPH.

So if https://t.co/QLBgnFohPH

isn’t already in your bashrc, it goes to $PATH.

command (continued) … what, “command” does is it tells bash to look in $PATH first, and just skip .bashrc, so it’s kind of like, going to your system default, $PATH first, although $PATH can be customized.

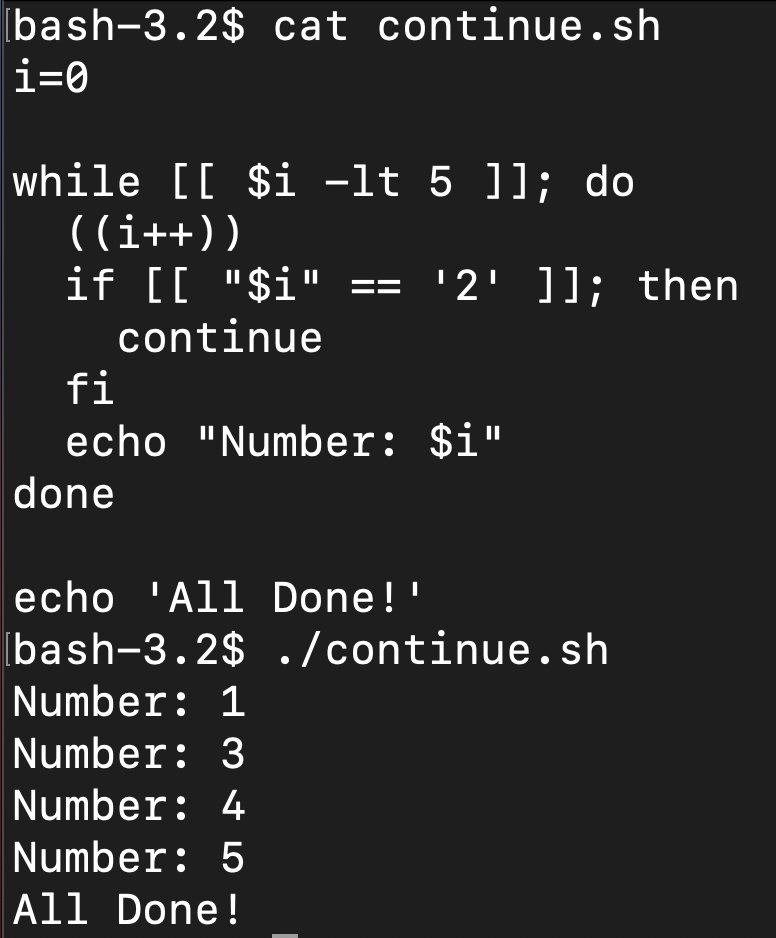

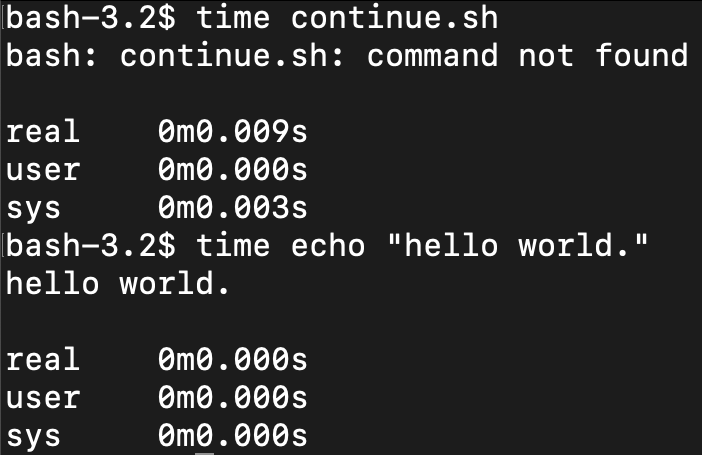

continue … related to break … however instead of jumping out of the loop completely, it goes and does the next iteration of the loop. Here it’s used to skip i=2 only:

cp … copy, can also be used to copy a file. Remember we can use cksum to verify that a copy is an exact copy. md5 can also be used for this as well.

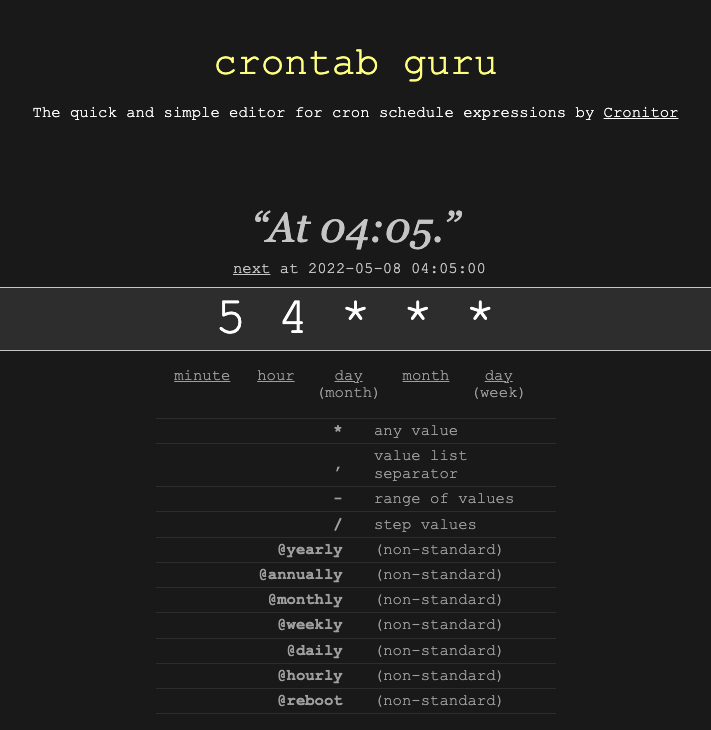

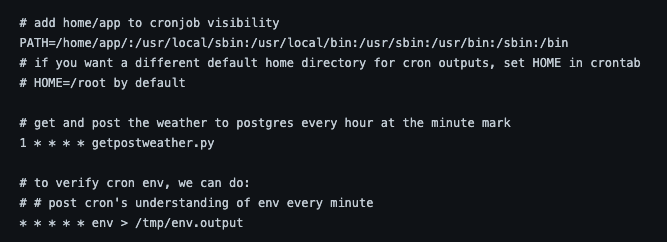

cron … is a way of scheduling scripts on a regular basis, minutely, hourly, daily, yearly, whatever. You can edit cron expressions using https://crontab.guru/

crond is a daemon which runs and activates whatever script based upon the crontab (next)

crontab … is a file with all of the cronjobs that need to run, each is a cron expression followed by a command or script. One catch is that crontab doesn’t see the normal $PATH variable by default, so you have to define it within the crontab.

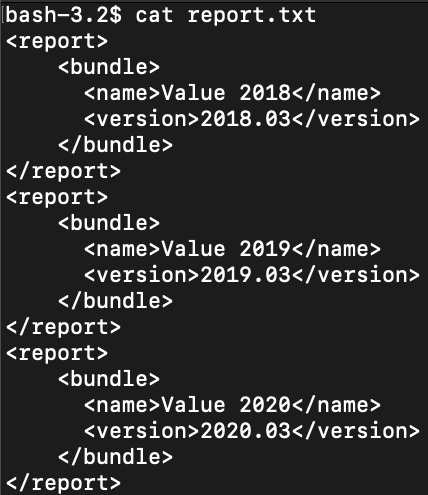

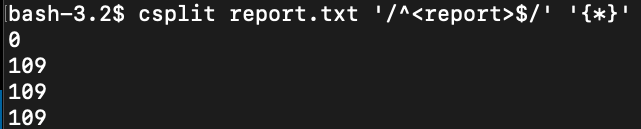

csplit … if you have a file separated by any pattern such as

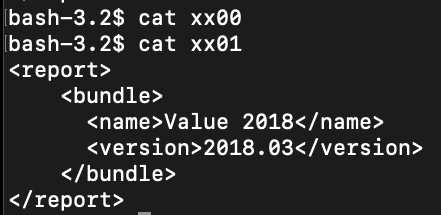

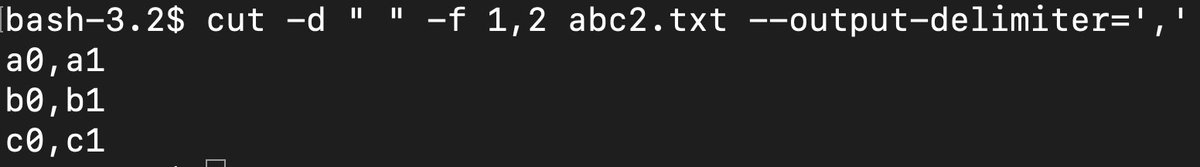

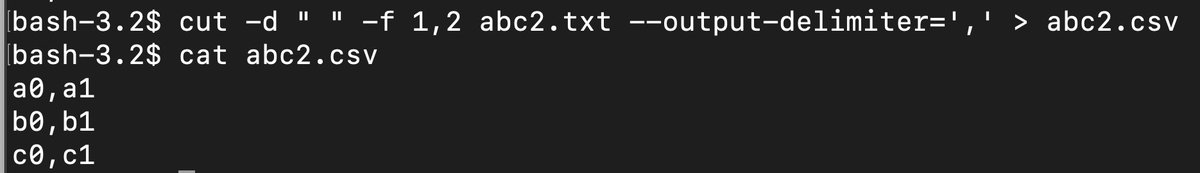

cut … cuts out sections from each line of files and writes the result to a standard output - can use byte or character counts or delimiters and can also replace characters. It could be used to change space delimited data into a csv file.

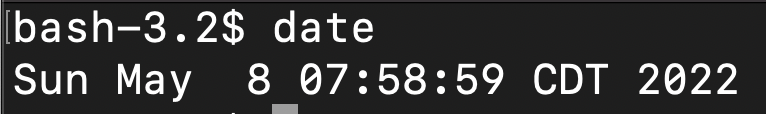

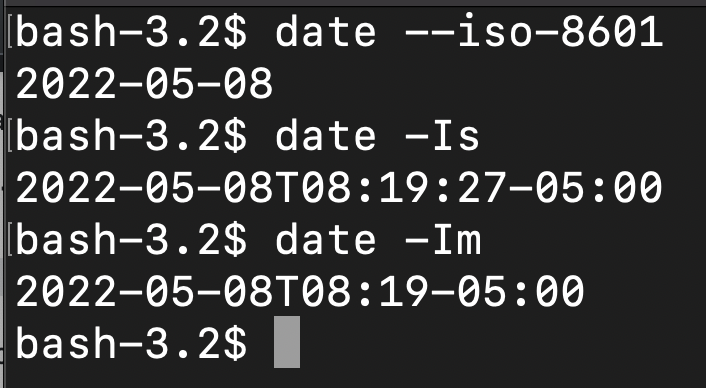

date … pretty self-explanatory, however date comes in a lot of formats and date –help comes with lots of options. Getting the date in iso8601 time, with seconds requires the -Is flag.

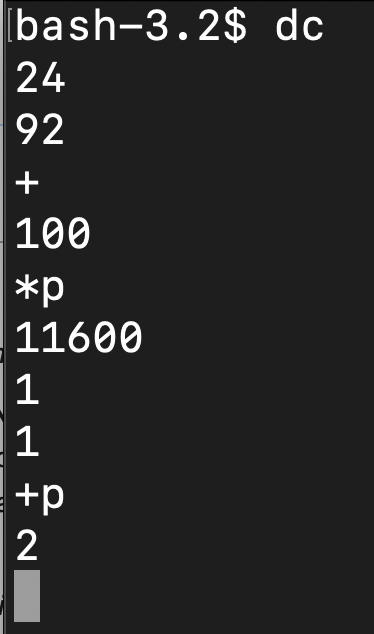

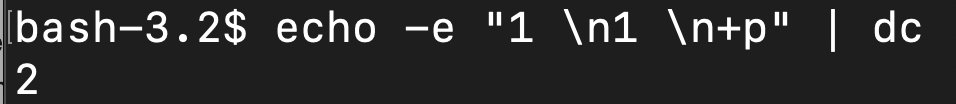

dc … polish notation calculator - https://en.wikipedia.org/wiki/Polish_notation

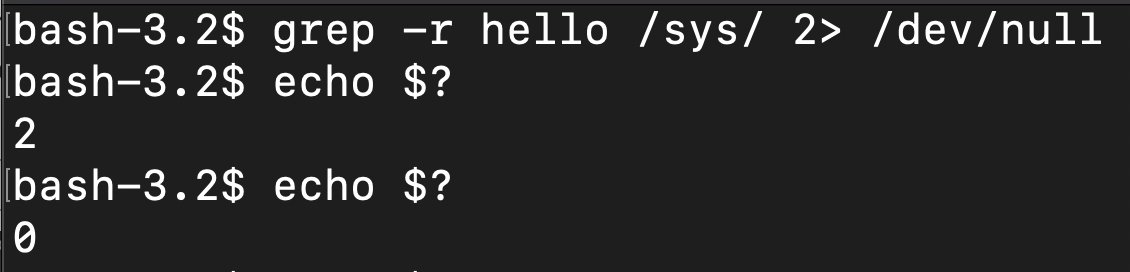

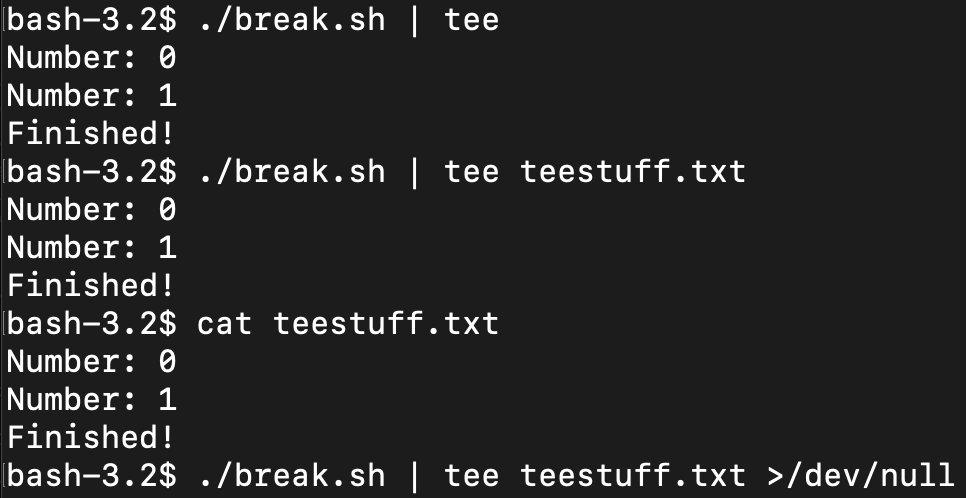

/dev/null … Whatever you write to /dev/null will be discarded. You get to use the results in the variable $?, the exit status of the previous command, one time, then after that it’s back to exit status 0. More on i/o redirection https://tldp.org/LDP/abs/html/io-redirection.html

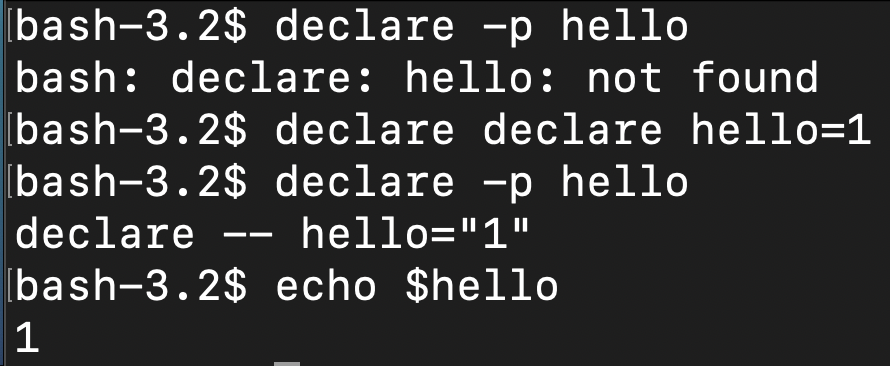

declare … check if a particular variable exists with the -p flag. You can also declare a variable with declare whatever=value

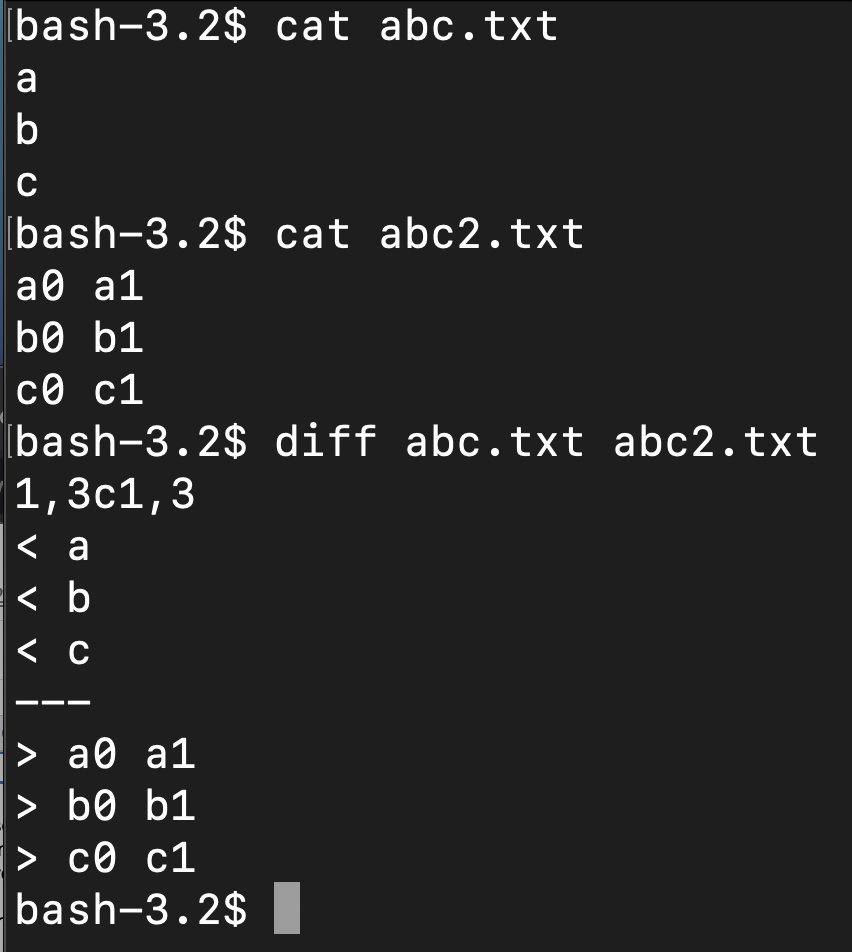

diff … similar to git diff, shows the differences on each line of a file.

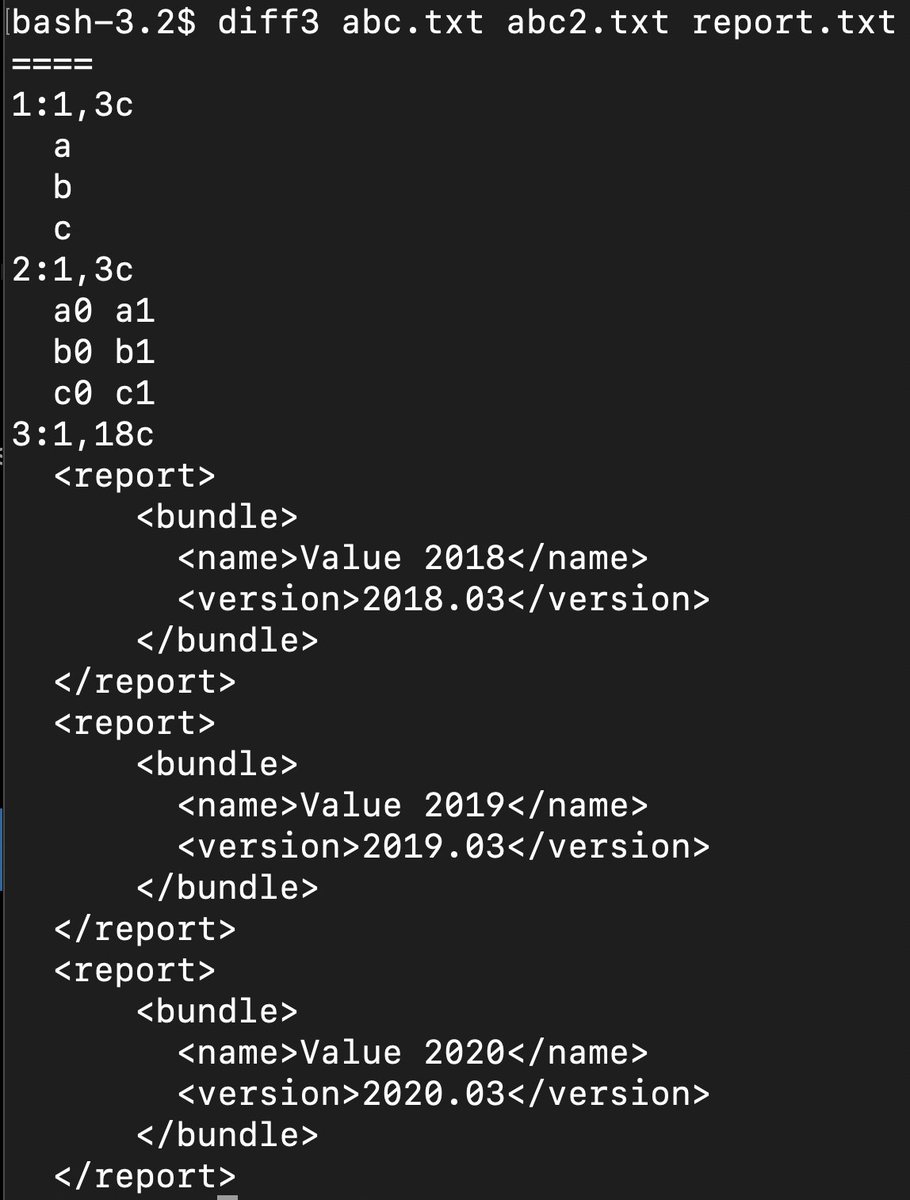

diff3 … compare 3 different files in the same manner as diff

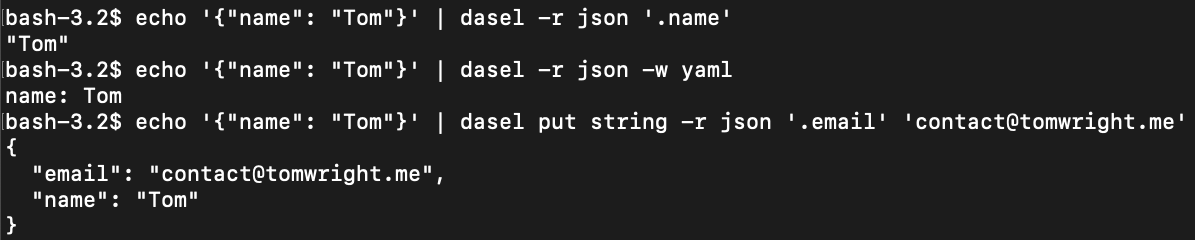

dasel … allows conversions between JSON, YAML, TOML, XML and CSV, similar to yq/jq. https://github.com/TomWright/dasel#quickstart

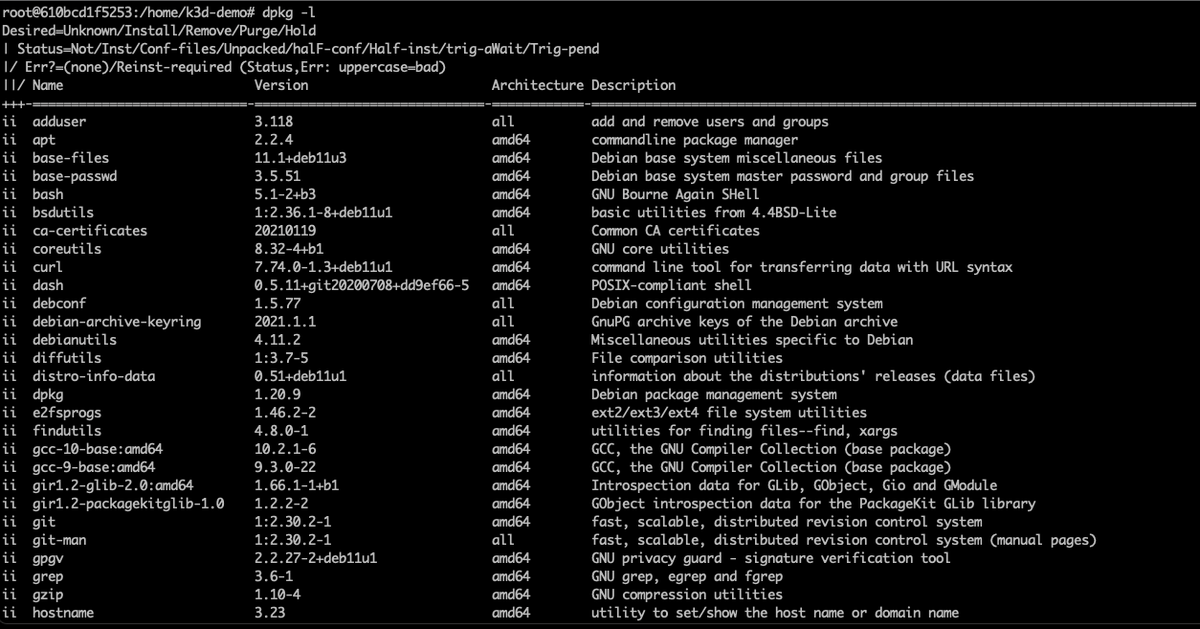

dpkg … package management tool (for debian operating system specifically), if you do dpkg -l you can see all of the packages installed.

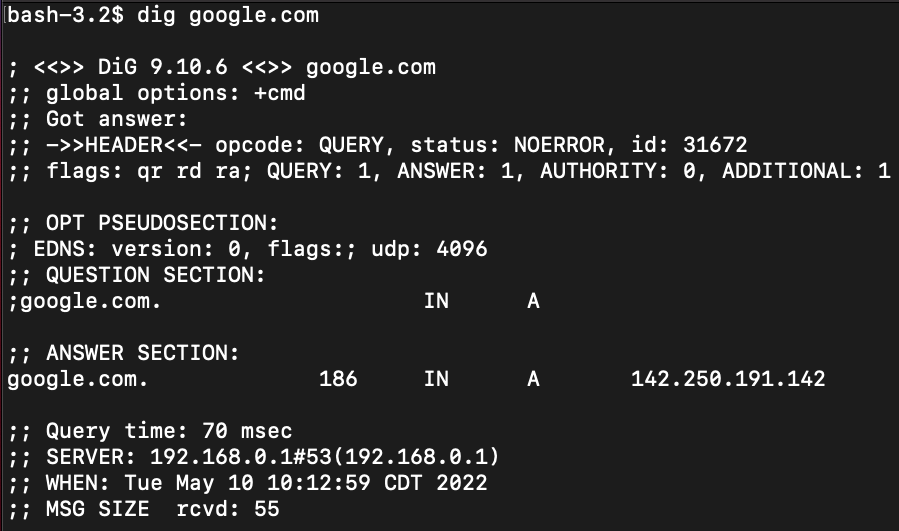

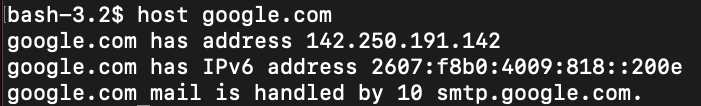

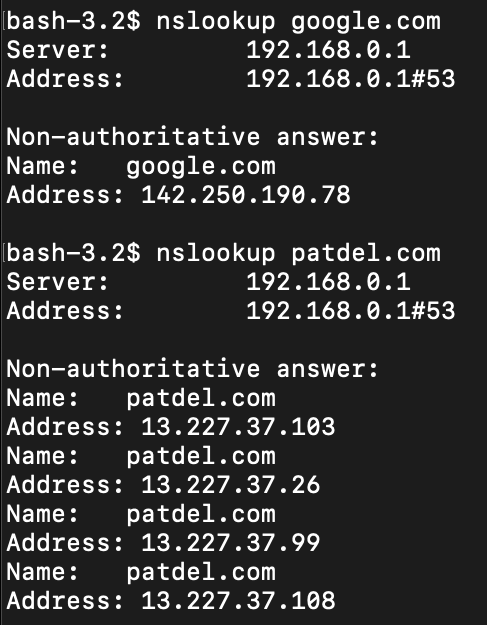

dig … similar to, “host” gives you dns information.

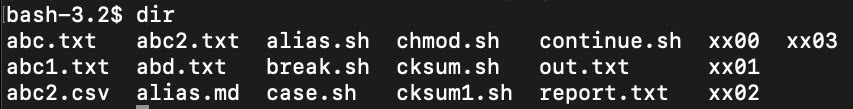

dir … similar to ls, but ls doesn’t work on certain systems and dir doesn’t work on some systems - it’s good to know both.

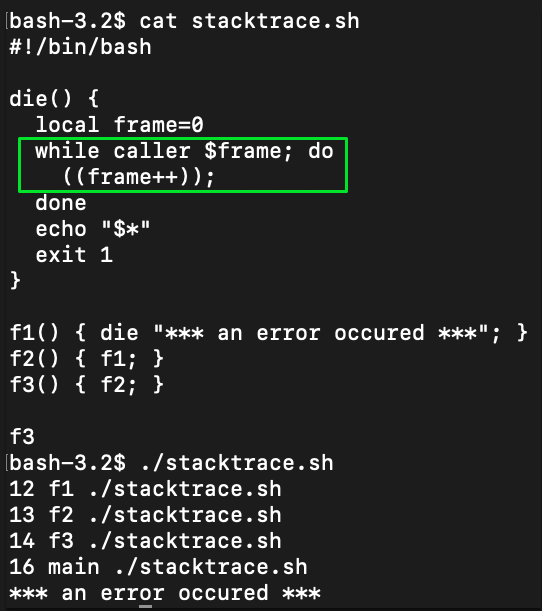

A few “c” commands I missed previously: caller … used to print execution frames of subroutine calls, can be used to create a decent die function to track down errors in moderately complex scripts.

curl … used to download stuff from a server. Very common.

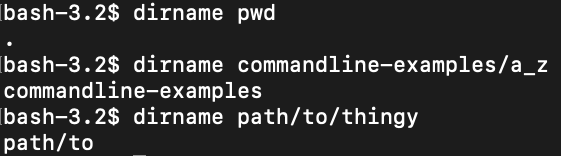

dirname … used to get the directory name, basically convert a full pathname to just a path.

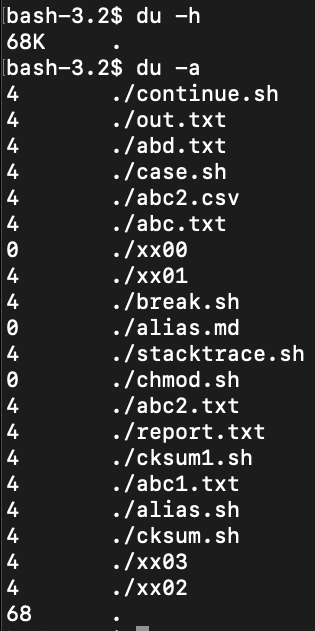

du … print out the disk usage in kilobytes of a directory, optionally everything in the directory (as shown).

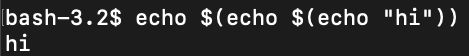

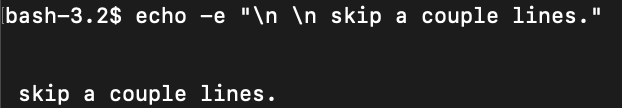

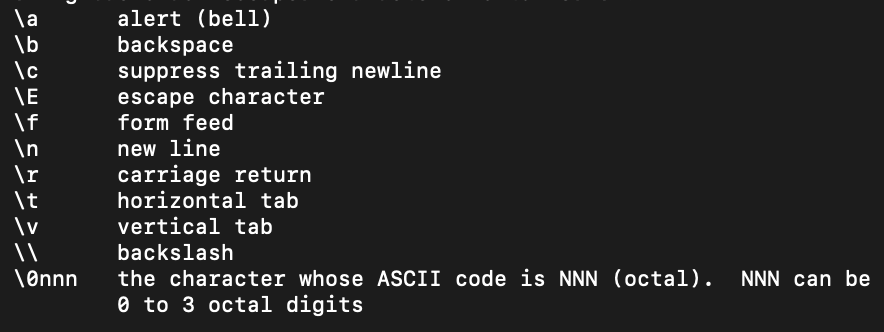

echo … echo, echo, echo - just kidding, it’s just echo, the print to standard output function. If you use echo recursively, it’s just going to show the output once because it’s really just echo’ing whatever you put into it. echo -e allows various escape characters.

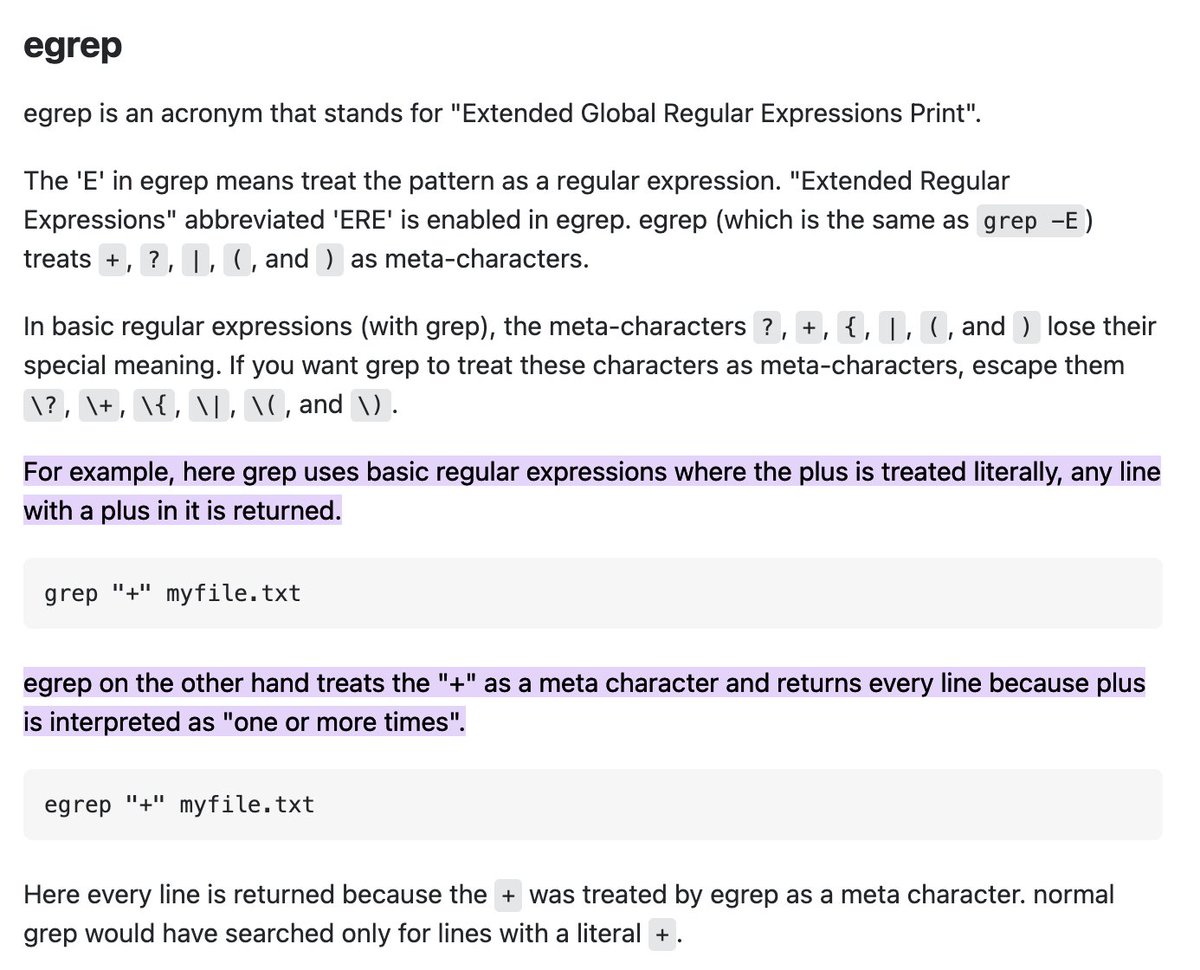

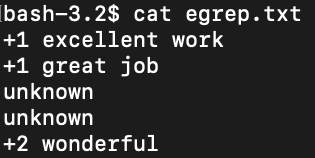

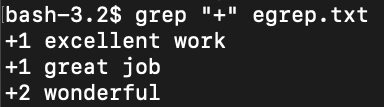

egrep … if you grep a file with a meta character such as +, it only returns lines with the literal “+” whereas egrep is the same as grep -E, it will return all lines in the file under the assumption that + is a meta character. https://superuser.com/questions/508881/what-is-the-difference-between-grep-pgrep-egrep-fgrep

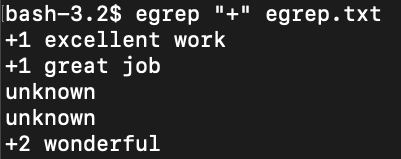

enable … enable and disable builtin shell commands.

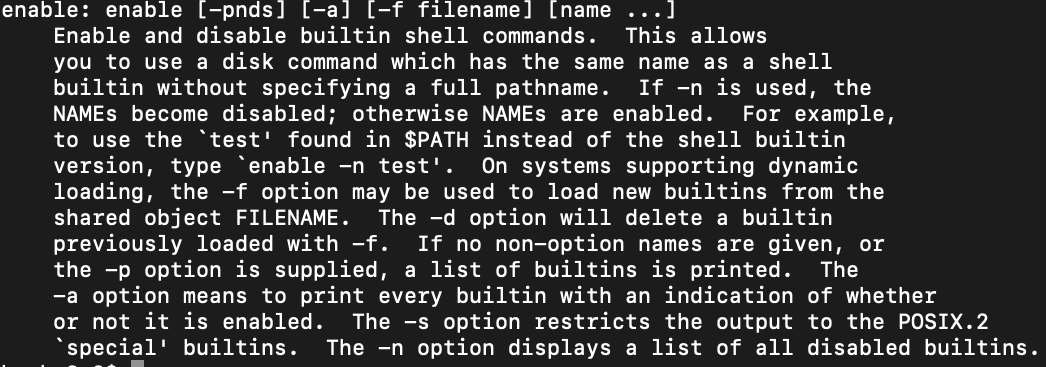

env … shows your environmental variables. Note the $PATH variable is in there.

eval … set an ENV to a particular command, then do eval $VAR and it will execute the command within the variable. Kind of similar to alias, but alias just sets the command permanently like a shortcut, not within a variable.

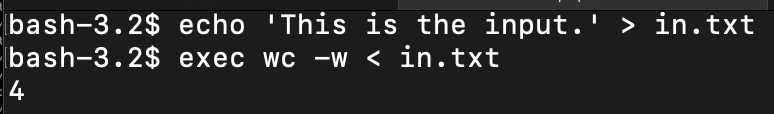

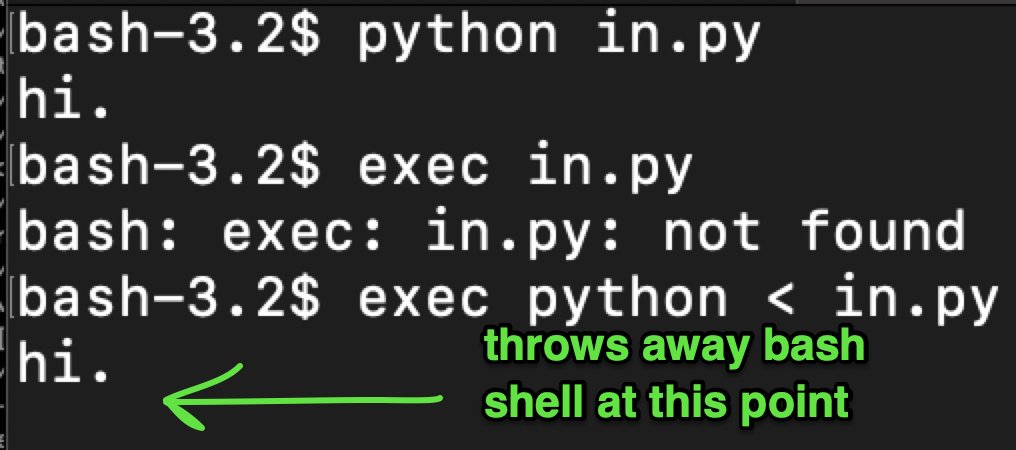

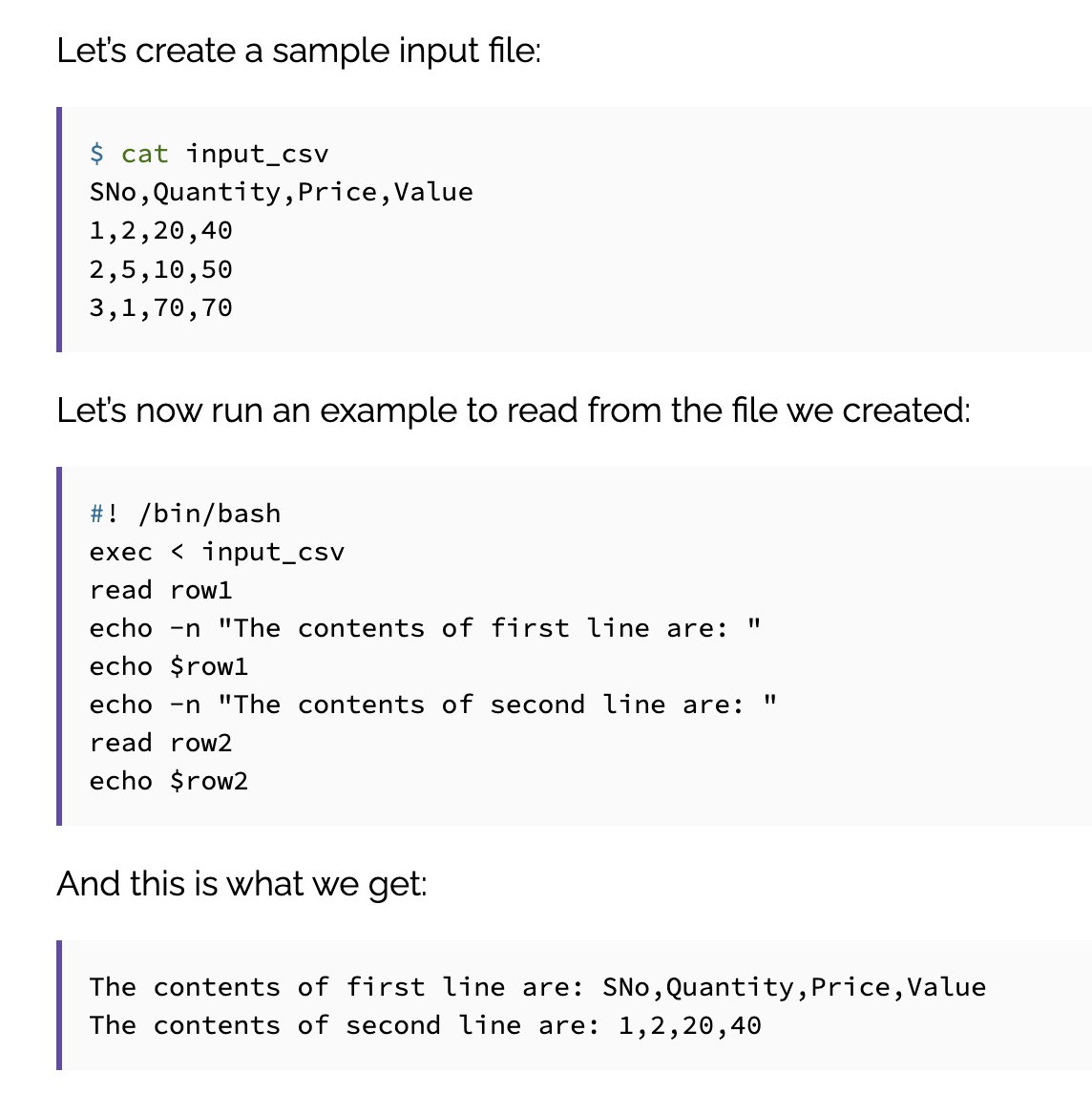

exec … execute a particular command on an input, the input can be a file. exec actually replaces the shell with a new executable image which eventually exits and returns an exit code.

exec … (continued) really what exec does is help you create a disposable shell, it’s designed to invoke long-running programs in non-shell languages, so you don’t really need that shell running, you can discard it to free up system resources.

exit … exits a shell or process.

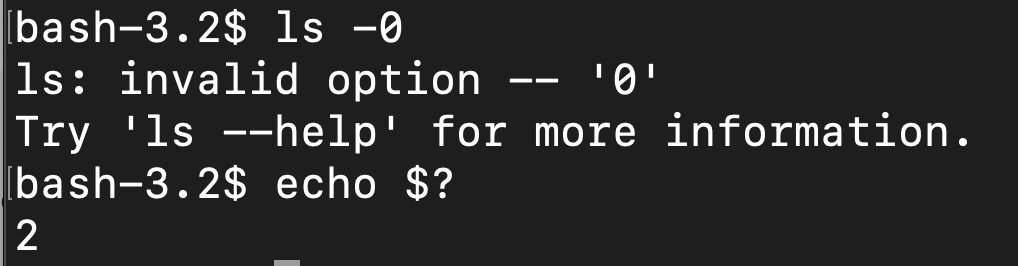

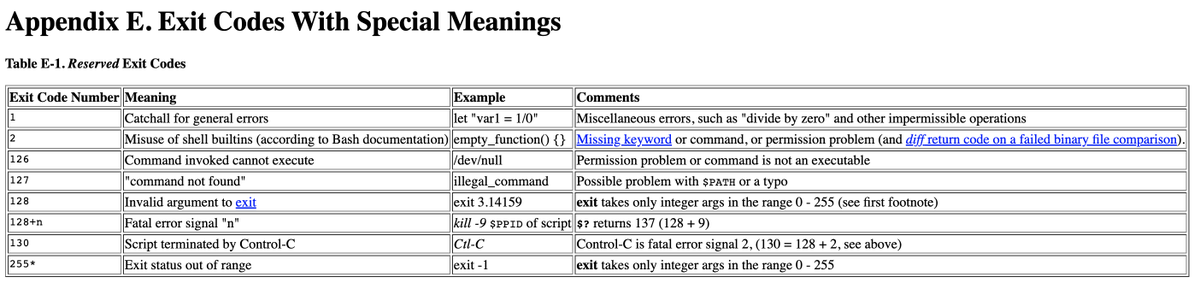

exit (continued) … exit 0, exit 1, exit 2, etc., while 0 is success and 1 is a general error catch-all, different exit codes can have different meanings. https://tldp.org/LDP/abs/html/exitcodes.html

exec (continued from above) … more on exec : https://www.baeldung.com/linux/exec-command-in-shell-script

there’s a lot you can do with exec. “exec bash” replaces the shell to bash. Within scripts, program calls, logging, allow stdin to read from a file, running a clean environment with exec -c

exec (continued) … there’s also exec $@ which executes the input into a function within a script, so you can output, “whatever” and then send it to another function as an input, and then doing exec $@ will run that input.

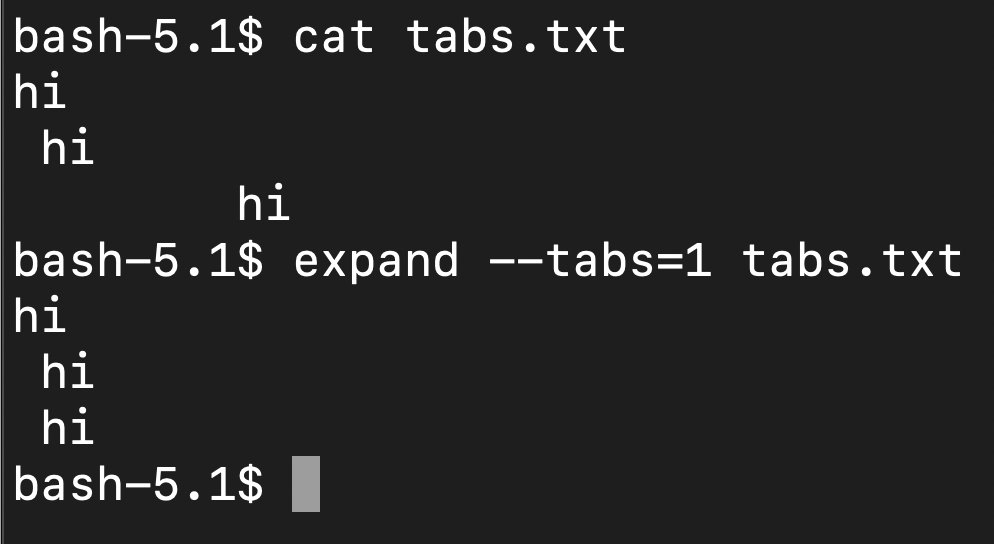

expand … officially ending the tabs vs. spaces war, you can use expand to convert tabs to spaces. You just have to pick what ratio of spaces:tabs you want with –tabs=N , I picked N=1 in this example.

expr … expression evaluator, similar to bc and dc but instead of needing to feed in a string, you can just feed in expressions. bc also has exponentiation and square root operations, which expr lacks, and expr maxes out at 2^63-1

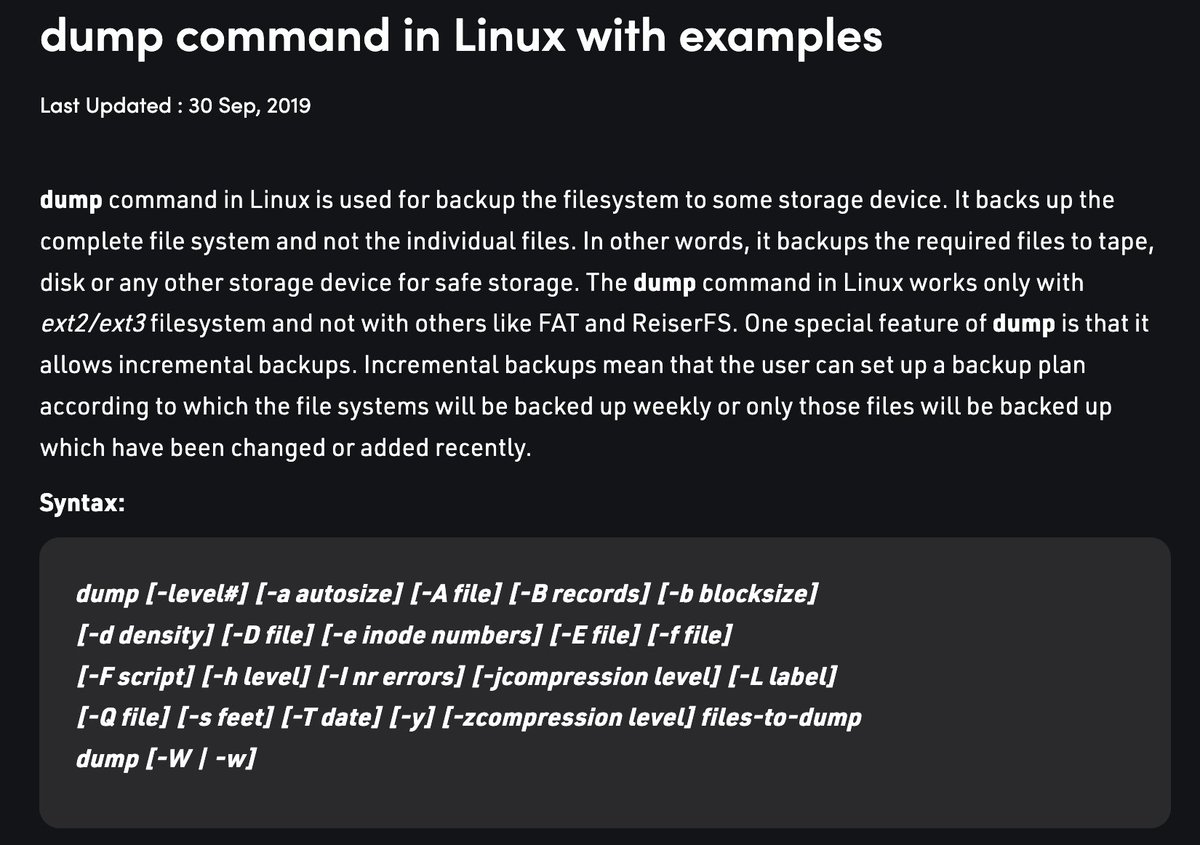

dump … (forgot this one) - can be used to create system backups. So if you have an Rpi with a flash drive, you could back the system up using a cron job and dump.

dd … similar to dump, there seems to be debates online going back to the year 2010 at least debating which one is better. Here’s an article on using dd as a backup tool in the RPi scenario. https://opensource.com/article/18/7/how-use-dd-linux

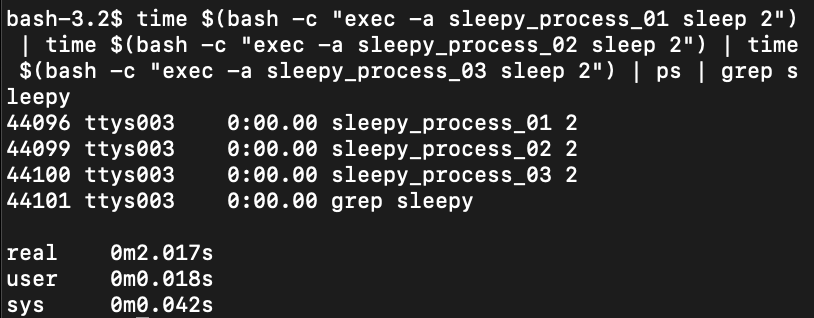

| export … makes variables and functions available to subprocesses. But what is a subprocess? Here is an example of three named, “sleep” subprocesses, which run in parallel by using the | function. As you can see they run in parallel, only taking 2 seconds to do all three. |

| export (continued) … that command was: time $(bash -c “exec -a sleepy_process_01 sleep 2”) | time $(bash -c “exec -a sleepy_process_02 sleep 2”) | time $(bash -c “exec -a sleepy_process_03 sleep 2”) | ps | grep sleepy |

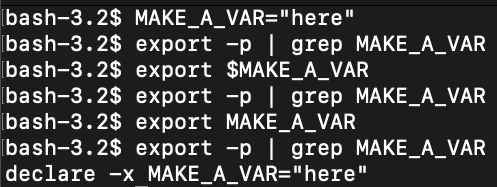

export (continued) … if you want to export a variable, you do, “export VAR_NAME” not “export $VAR_NAME”

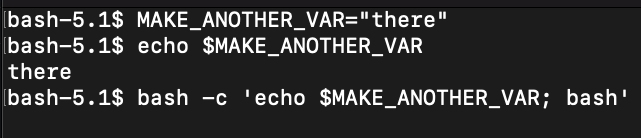

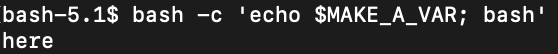

export (continued) … if you don’t export the variable, and if you attempt to use it in a sub-process, such as a bash within a bash, the variable will not be accessible. However the exported variable will be available across the machine, all different terminals and subprocesses.

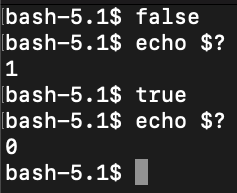

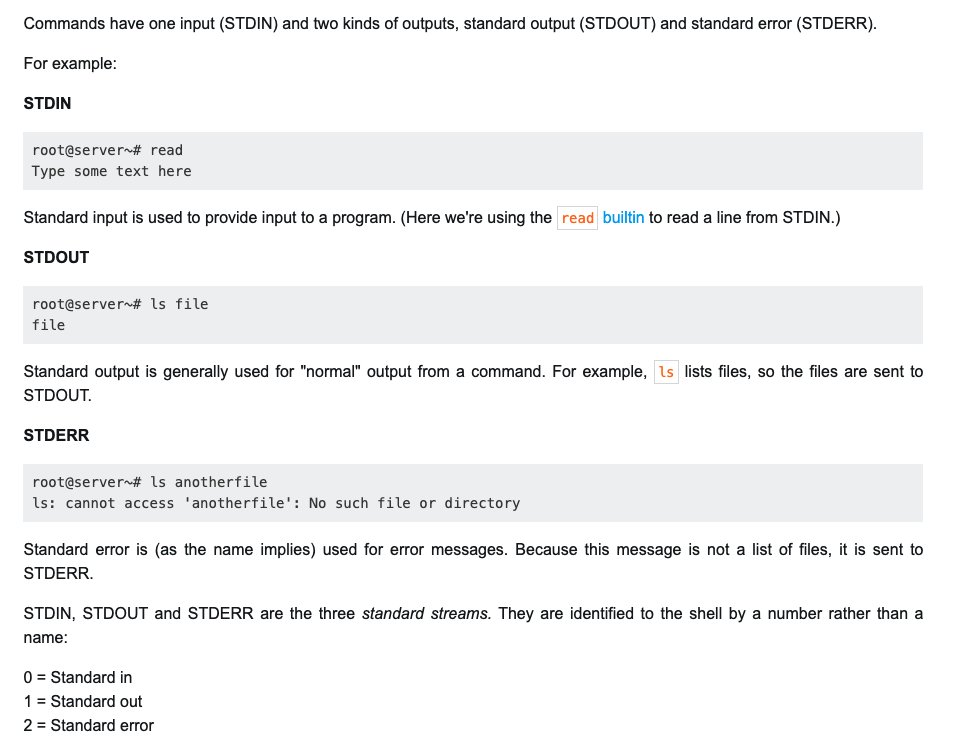

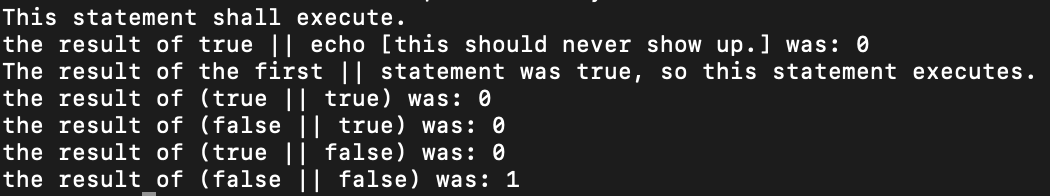

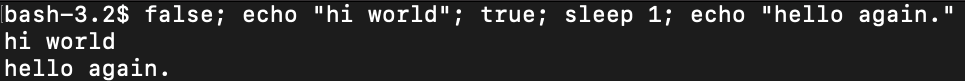

false … produces an exit code of 1 and nothing else. true produces an exit code of 0 and nothing else. We can produce arbitrary exit codes with the exit command, but some codes have pre-assigned meanings. and https://www.redhat.com/sysadmin/exit-codes-demystified

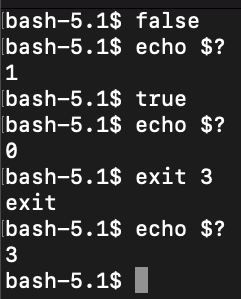

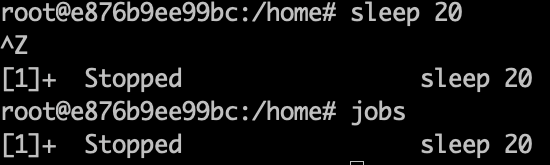

fg … send job to the foreground. After suspending a job with ctrl+z, it can be restarted in the foreground with fg %1. Jobs can be restarted by %NUMBER, %COMMANDNAME or %+ %% for the current job, %- for the previous job.

fg (continued) … you can push things back and fourth into the foreground and background.

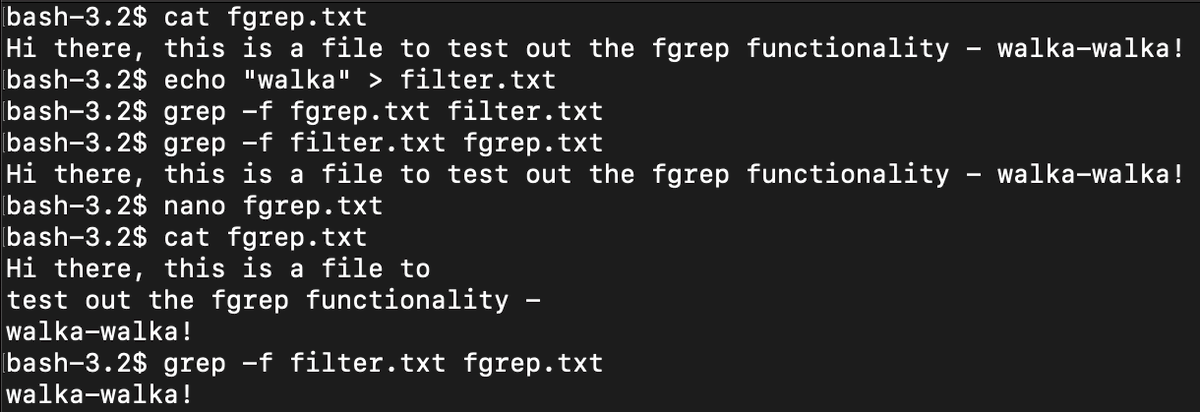

fgrep … equivalent to using grep -f , it filters the lines in a file that share words with lines in another, filter file. e.g. grep -f FILTER_FILE.txt TARGET_FILE.txt

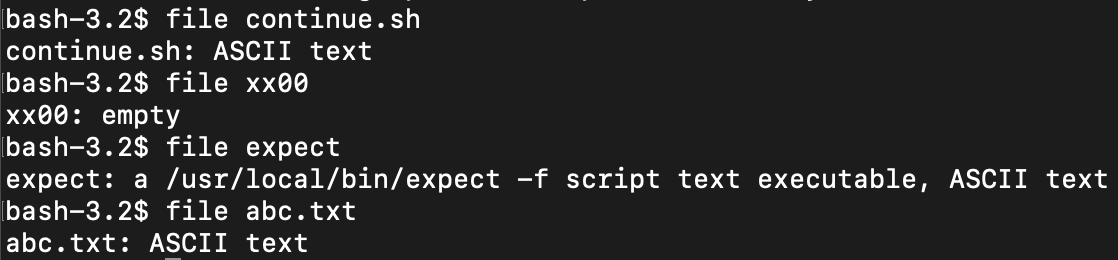

file … gives an output of the file type and what’s in the file.

find … find things within a directory tree structure based upon various options. One I like is find ./ -name ‘filename_you_want’ which looks in the current directory for any filename you specify. There’s lots of information under “man find”

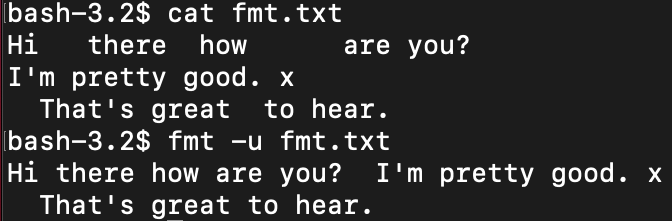

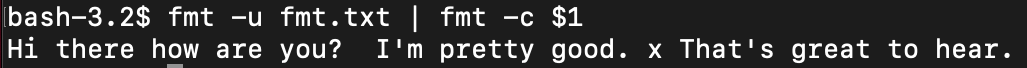

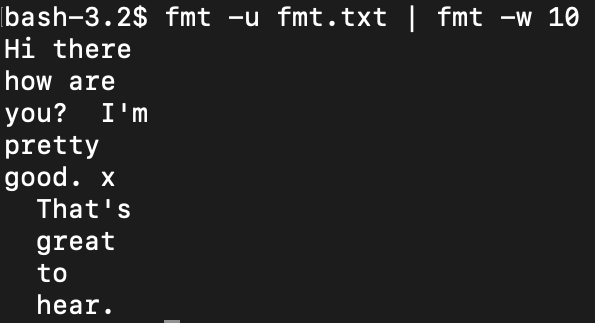

fmt … format paragraph text according to certain rules selected by options.

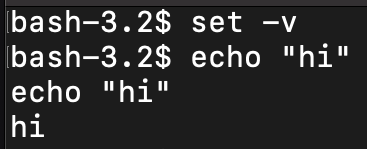

ftp … don’t use it, it’s not secure. Instead use scp or sftp, will talk about this later in the s’s.

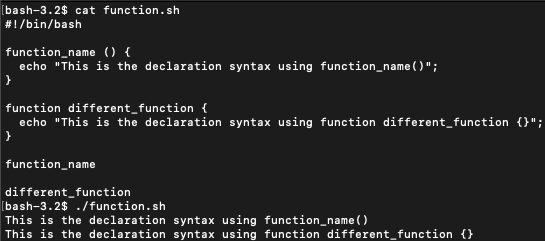

function … allows you to declare a function with a different type of syntax. You can either use function_name() {}, the more common way, or use function new_function {} without the parenthesis.

gawk … is the gnu implementation of the awk programming language. There may be different options available depending upon what you need, check the manual pages with, “man gawk”

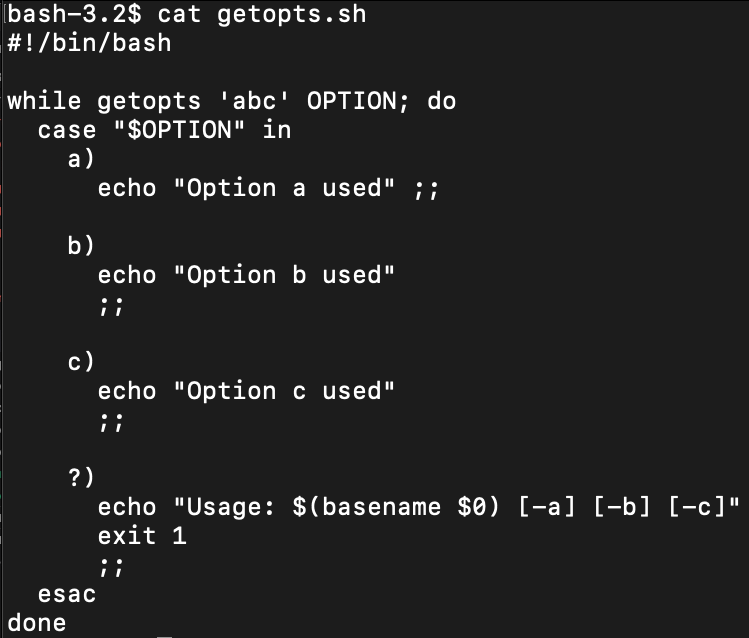

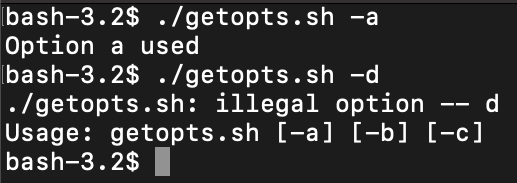

getopts … different than getopt, which is a less capable version of getopts. getopts handles options for you. While arguments are a matter of looping through variables with $1, $2, etc. using options is harder, so getopts allows you to just list out the options.

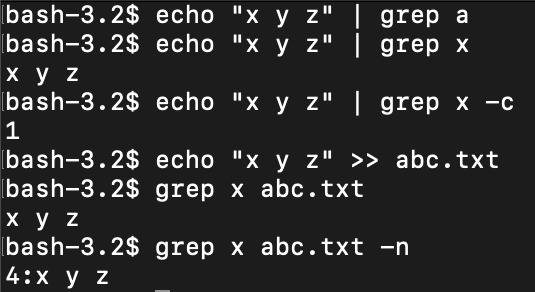

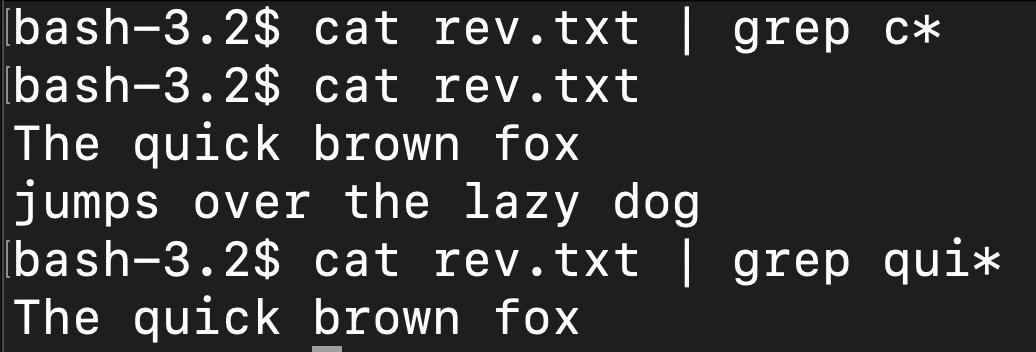

grep … is a filter or search command, which can search across the output of another command, or among files or a directory or directory tree structure. Don’t forget that it has useful flag options like -c for count or -n to show the line number of each search within a file.

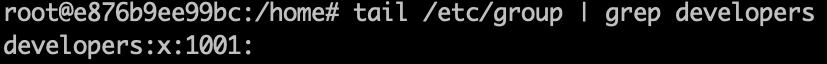

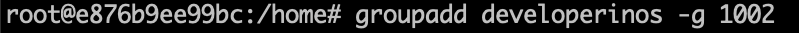

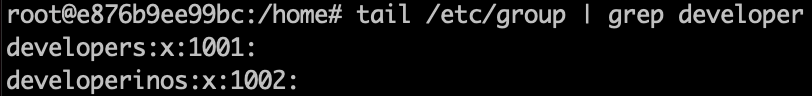

groupadd … add a new user group. You can explicitly add a group id number (GID) with the -g flag.

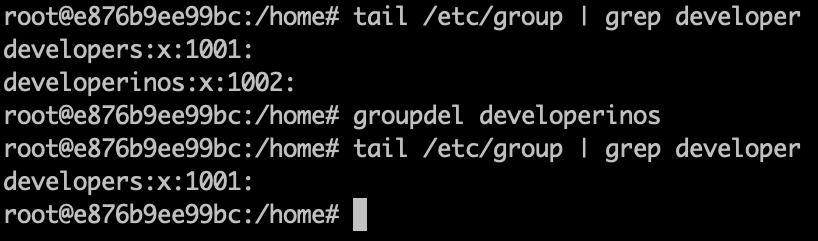

groupdel … used for deleting groups.

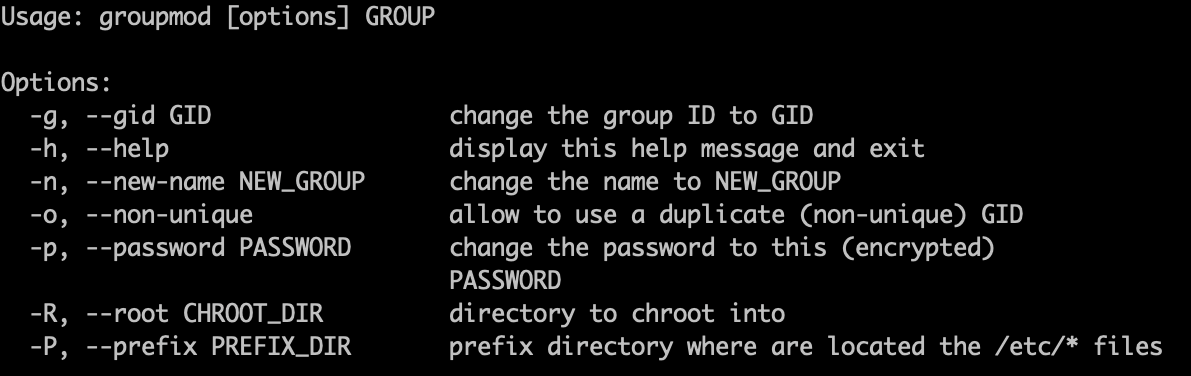

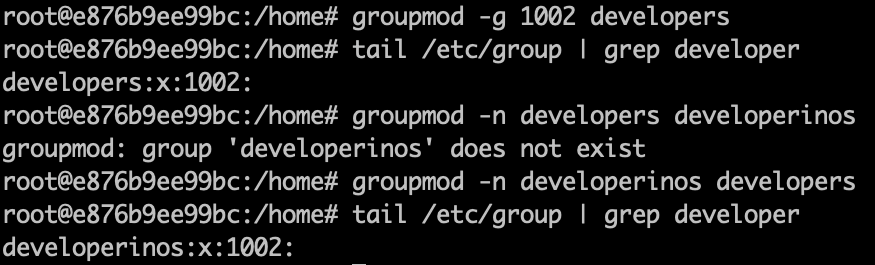

groupmod … modify the group name, password, root directory, and so on.

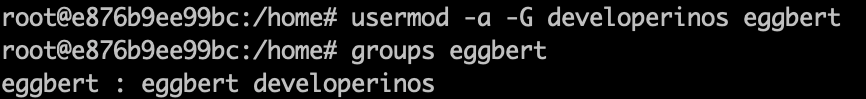

group … list out the group that a user is in. So if you add a user to a group with, “usermod -a -G” and then run group on that user, you can see that they will have been added to the group specified.

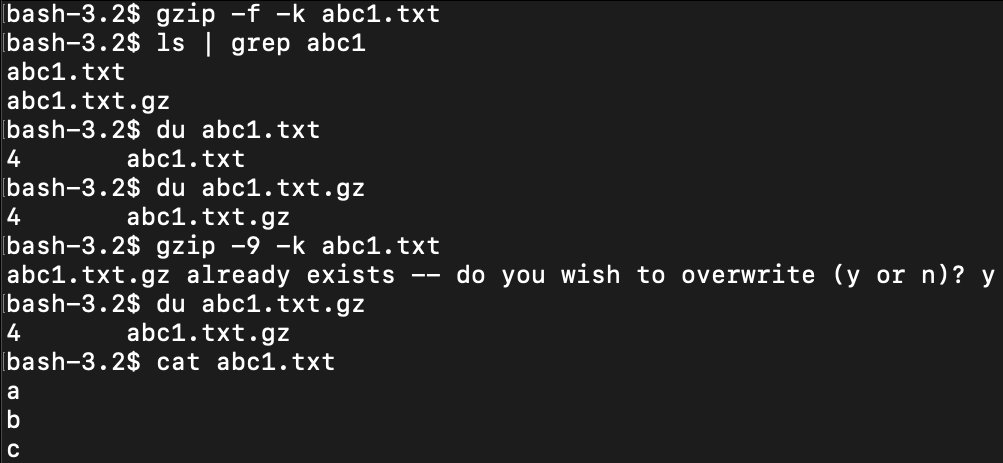

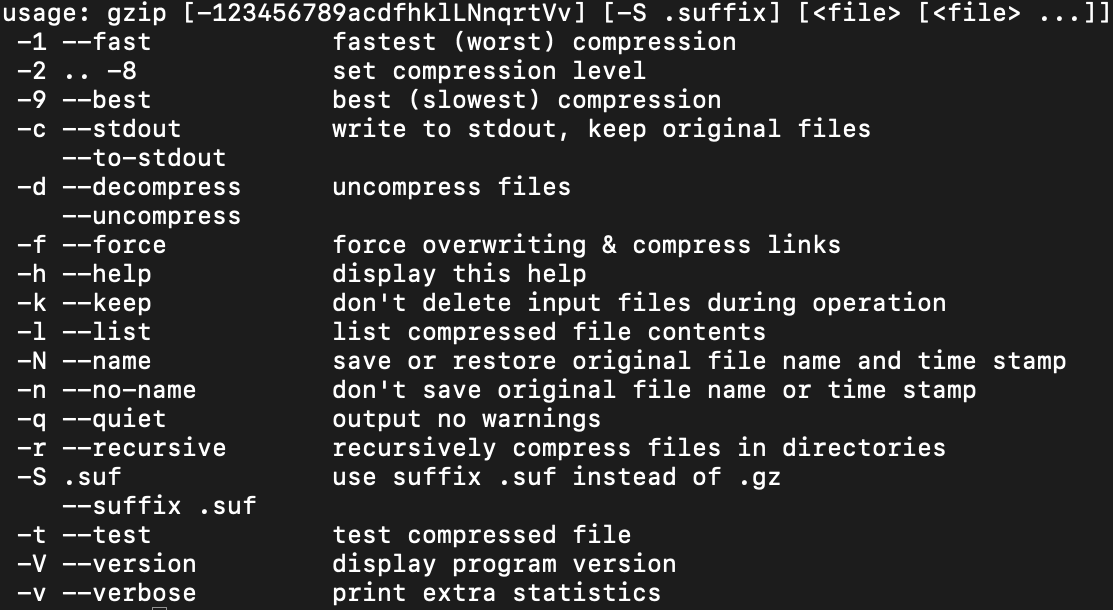

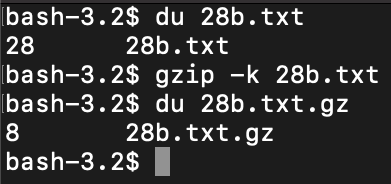

gzip … is the compression tool which gives the famous .gz output. Using -k keeps the original file, -f is fast compression, -9 is maximum compression, -d is decompress. It doesn’t really make sense to gzip super tiny files, as we show here. https://stackoverflow.com/questions/46716095/minimum-file-size-for-compression-algorithms

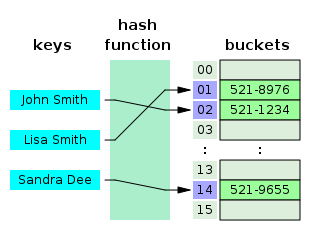

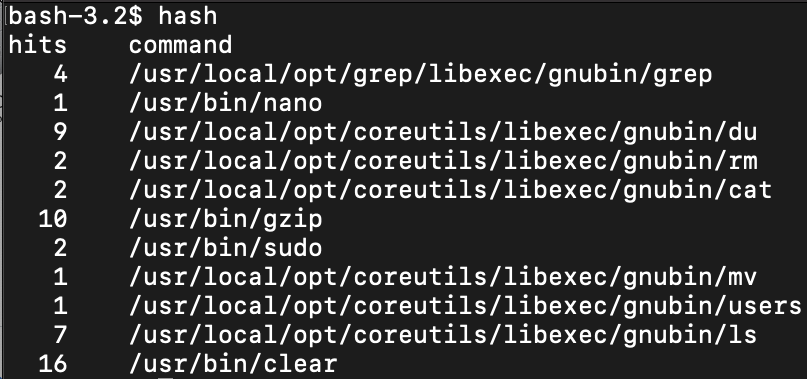

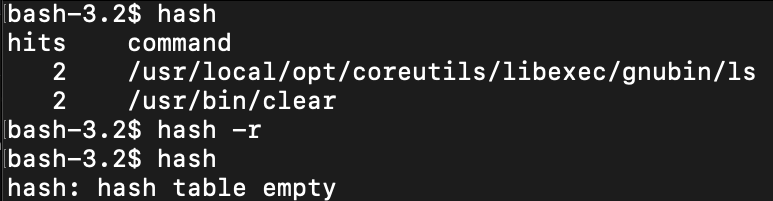

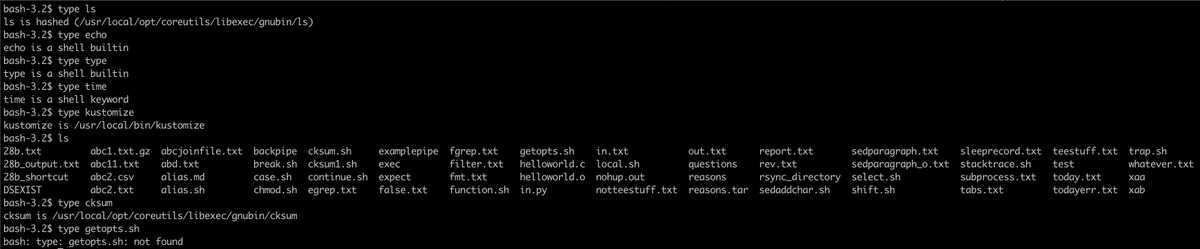

hash … is a hash table of recently executed programs. You can use -r to clear the hash table. hash prevents bash from having to search $PATH every time you type a command by caching the results in memory. Could be useful if there’s single executable in a directory besides $PATH

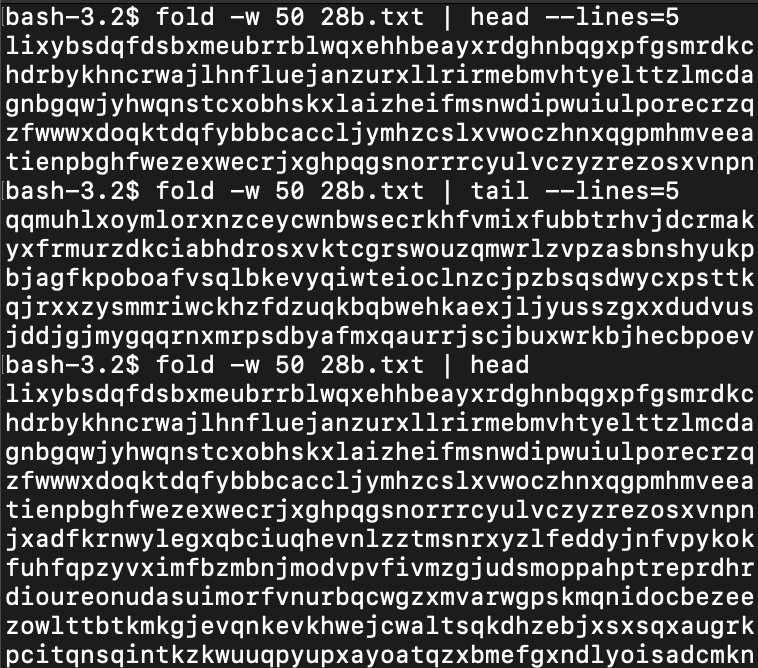

fold / head … fold can wrap a long string into a specified number of character length while head can list out a specified number of lines, similar to tail. The default number of lines head and tail prints out is 10.

history … shows a history of all recent commands on bash.

hostname … shows the hostname

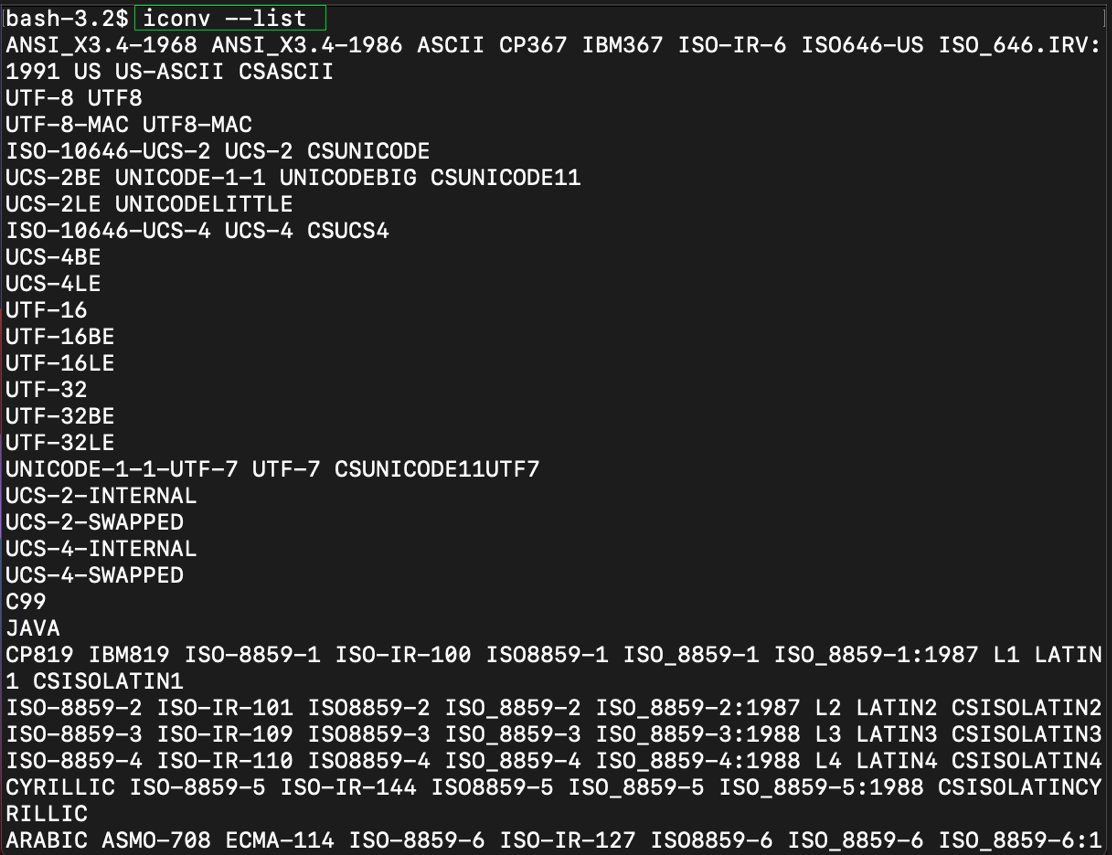

iconv … character encoding, encode or decode characters from different standards.

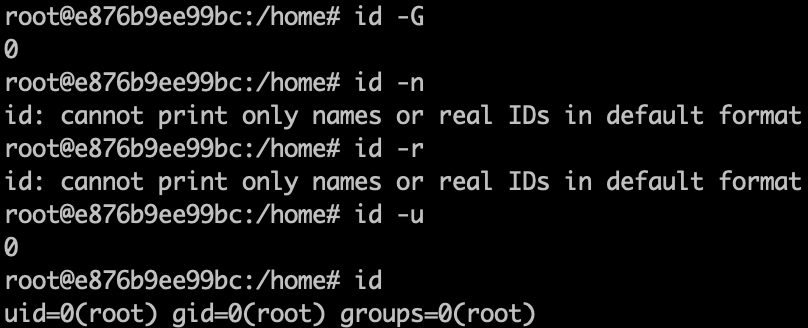

id … show the id of a user, or -G for group of that user, -u for the user number. In this example, I’m showing the root user so it’s all 0.

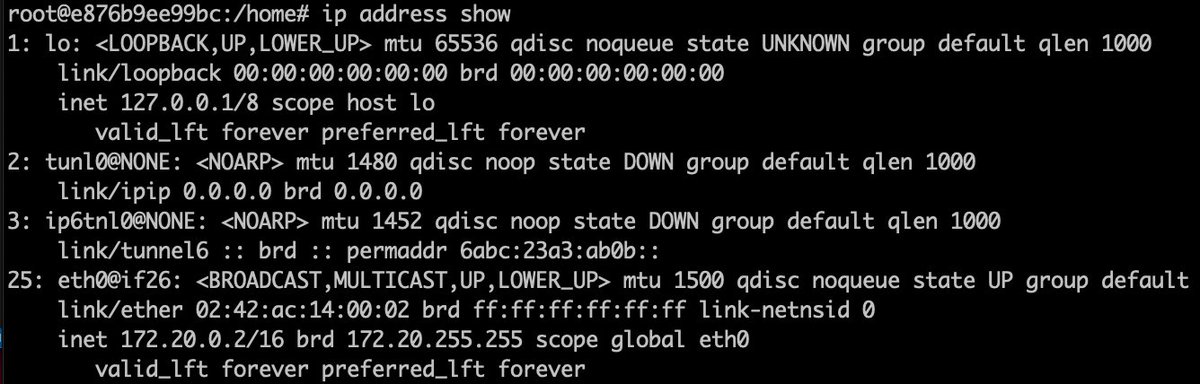

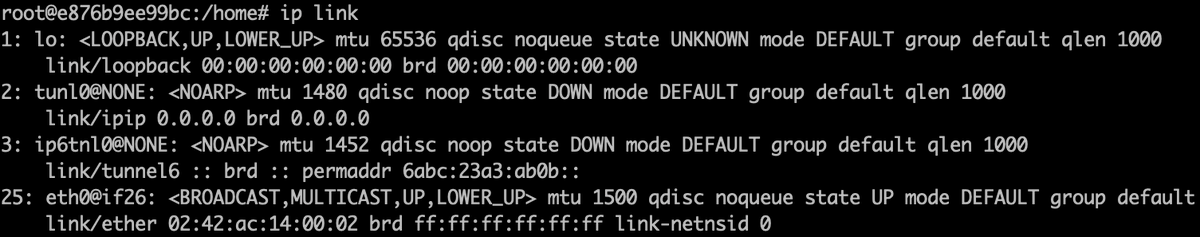

ifconfig … a way to configure network interfaces, the software that connects to networking devices. To use, must install net-tools. -s flag shows short list. eth0 represents a network card. It’s been superseded by ip. https://codewithyury.com/demystifying-ifconfig-and-network-interfaces-in-linux/

ip … you must install iproute2 to use ip. Replacement for ifconfig with more capability.

jobs … lists active jobs. Note, you can’t easily set a job name as you can a process name, but you can set it indirectly. https://stackoverflow.com/questions/389473/how-do-i-control-a-jobs-name-in-bash

and https://stackoverflow.com/questions/11130229/start-a-process-with-a-name

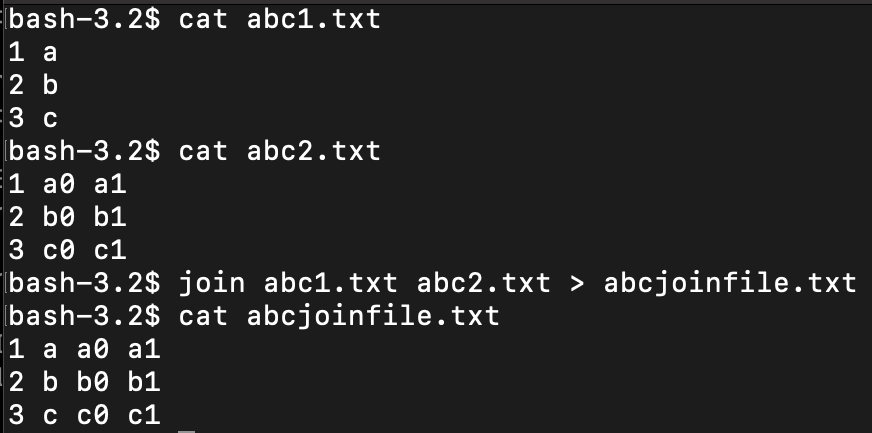

join … kind of similar to an SQL join, you take two files which have the same index structure, join will join based upon that index. You can select different fields to join on with -1, -2. You can check that the input is correctly sorted with –check-order.

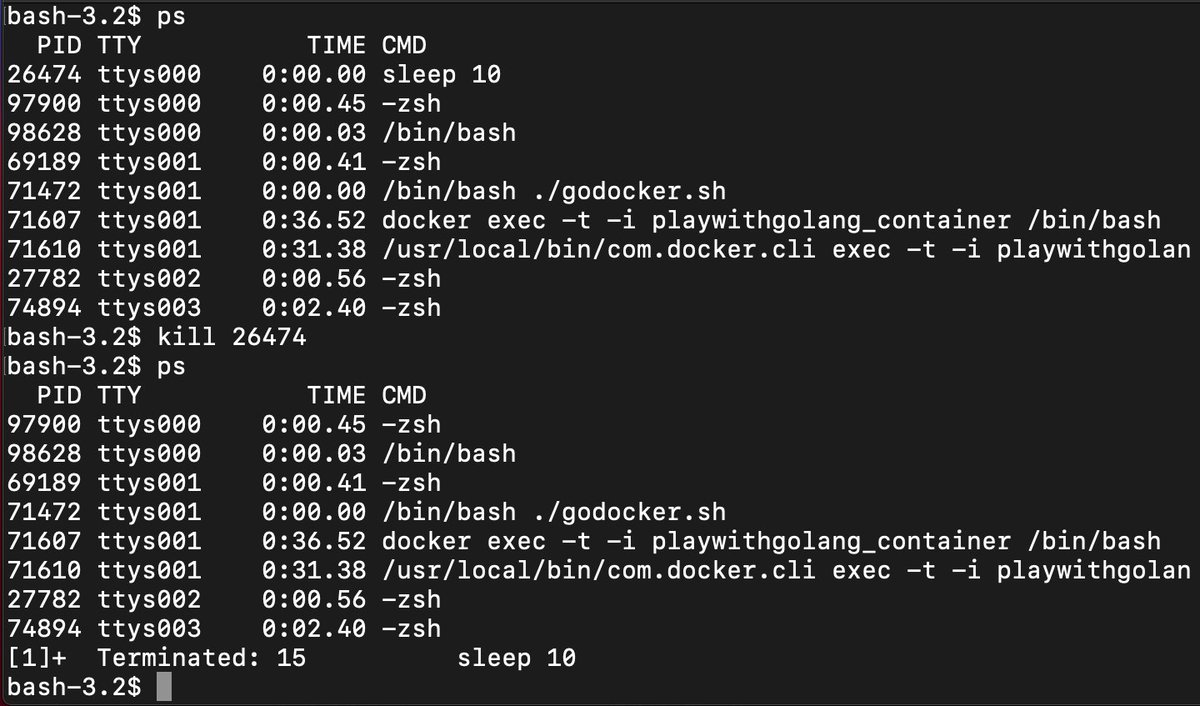

kill … kill a process by PID number.

killall … kill ‘em all, not the Metallica album from the 80’s, it kills all the processes.

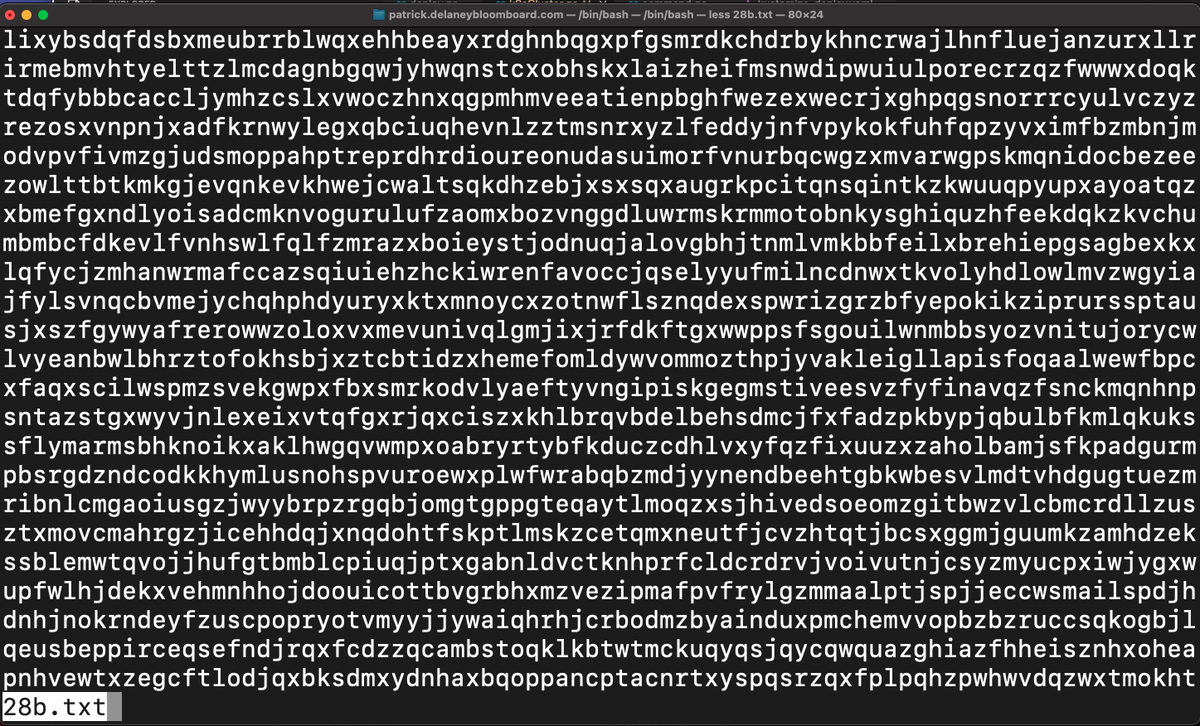

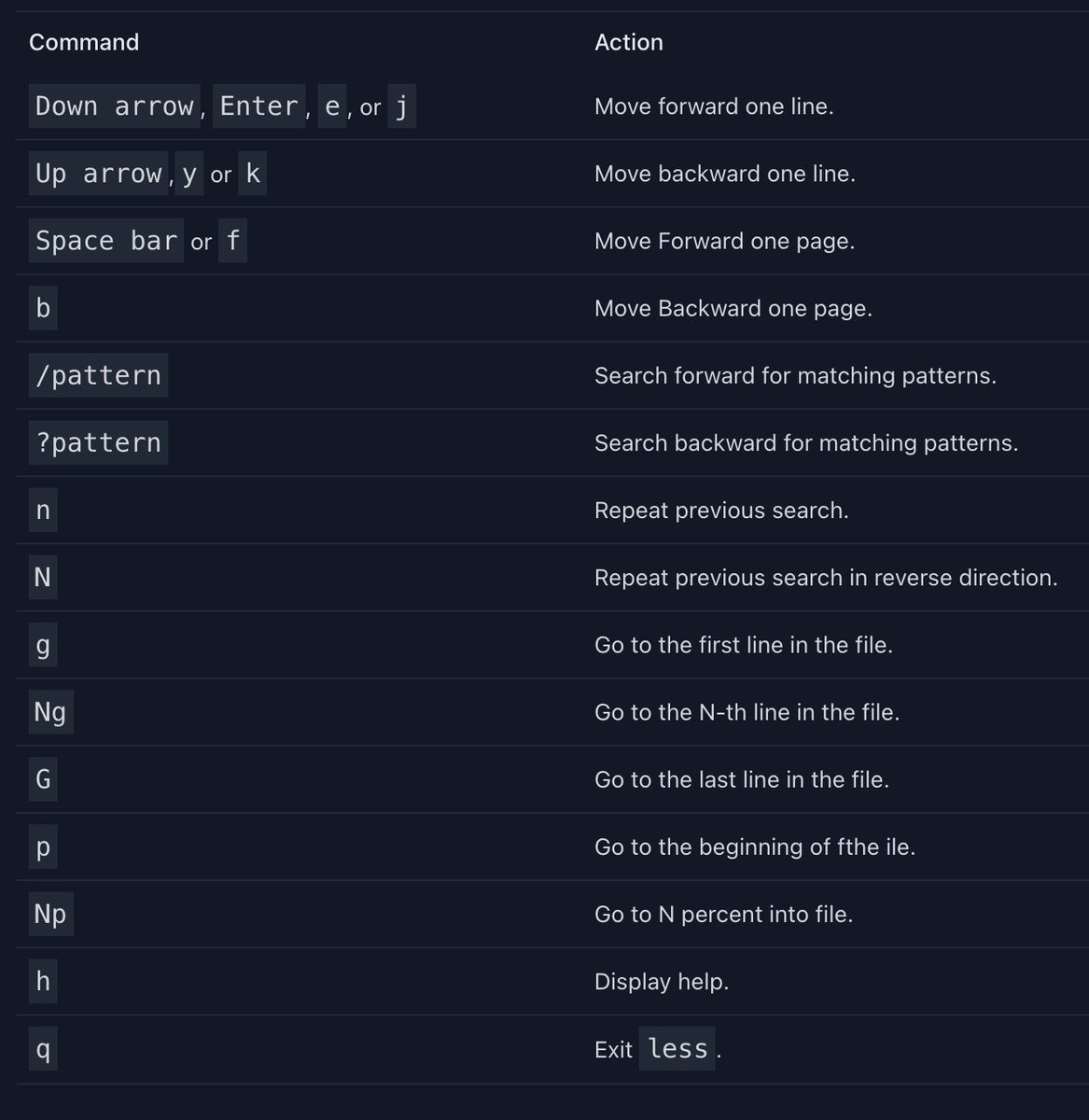

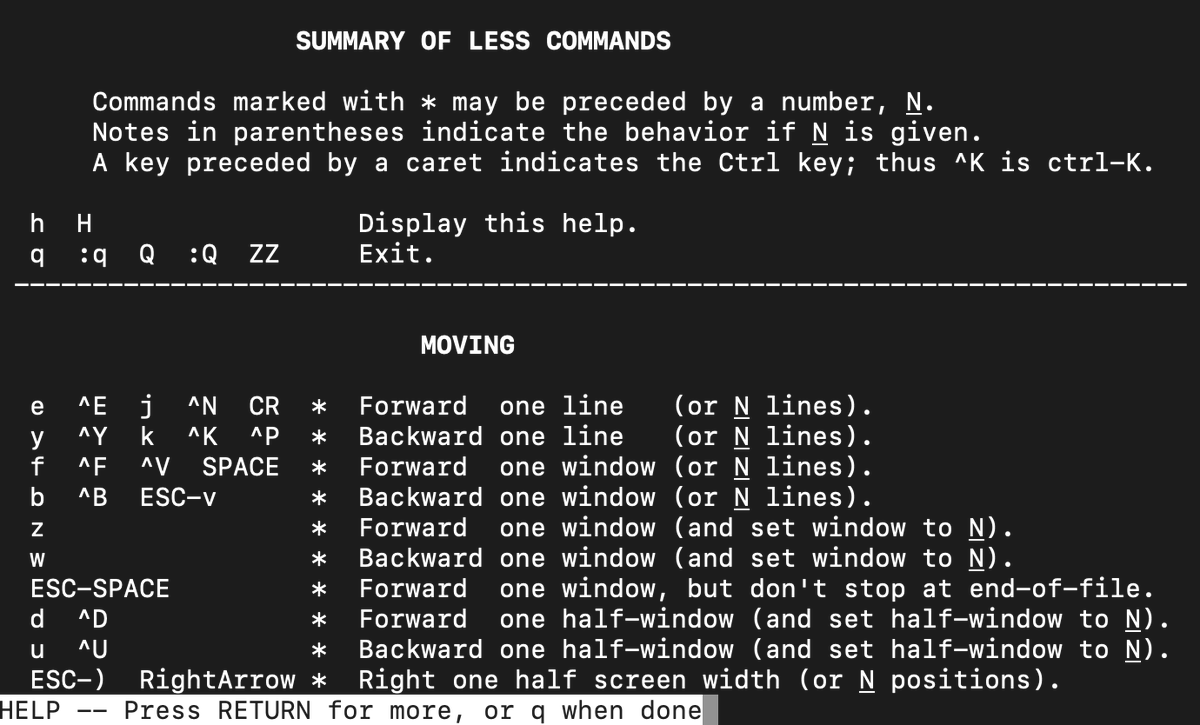

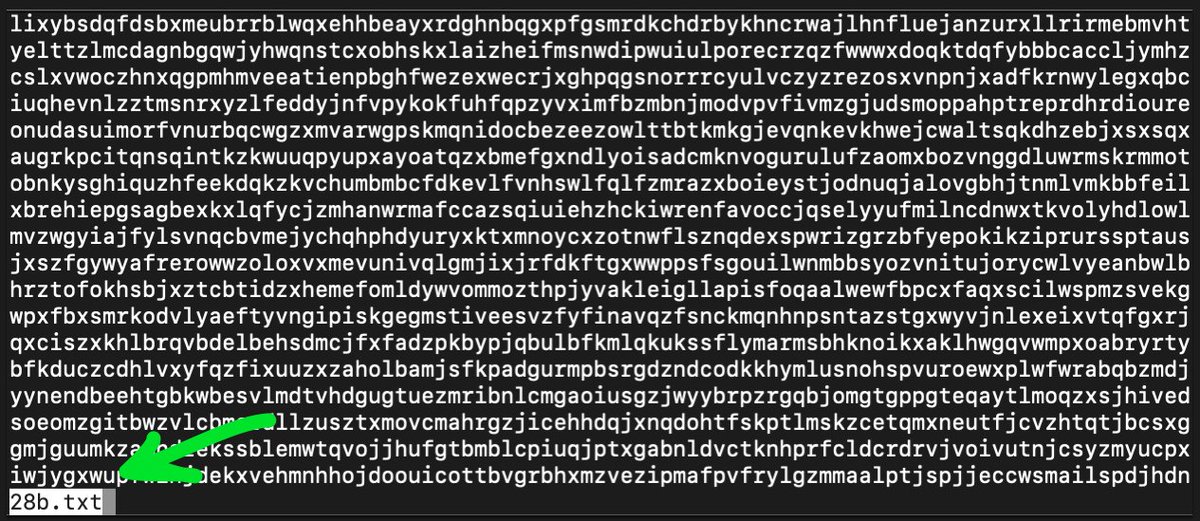

less .. shows you more about a file in a format you can scroll through, so here I did, “less 28b.txt” and I can scroll through this exceedingly long incomprehensible file. The most important command is, “q” to get out of there, but you an do other fancy stuff too, press, “h”.

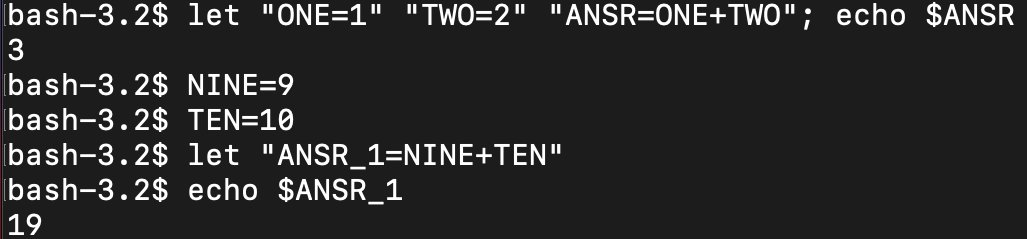

let … perform arithmetic either in a one-liner or separately on env variables. Other calculators include expr (expression evaluator), bc (arbitrary precision), dc (polish notation)

link … create a shortcut to a file. You can operate on links the same as you could the file itself.

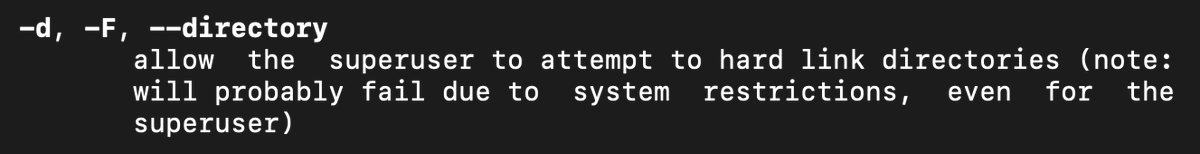

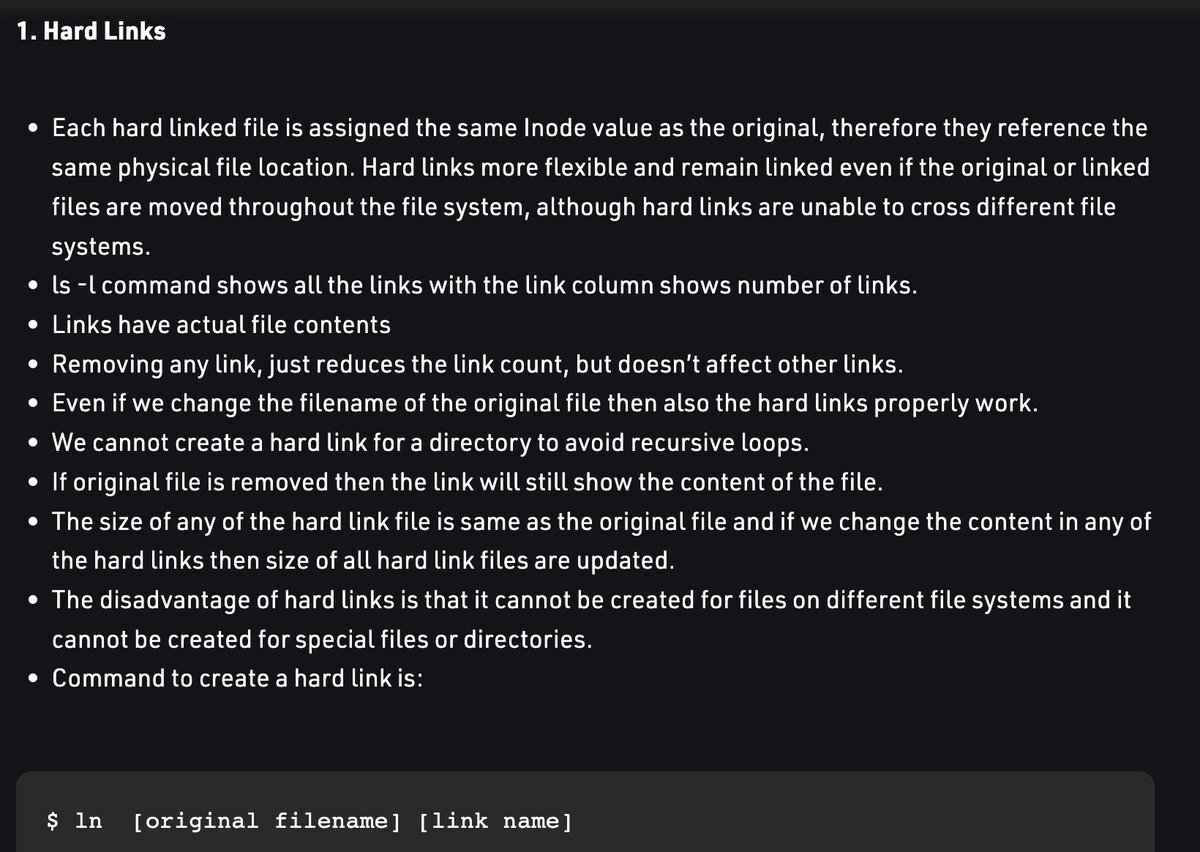

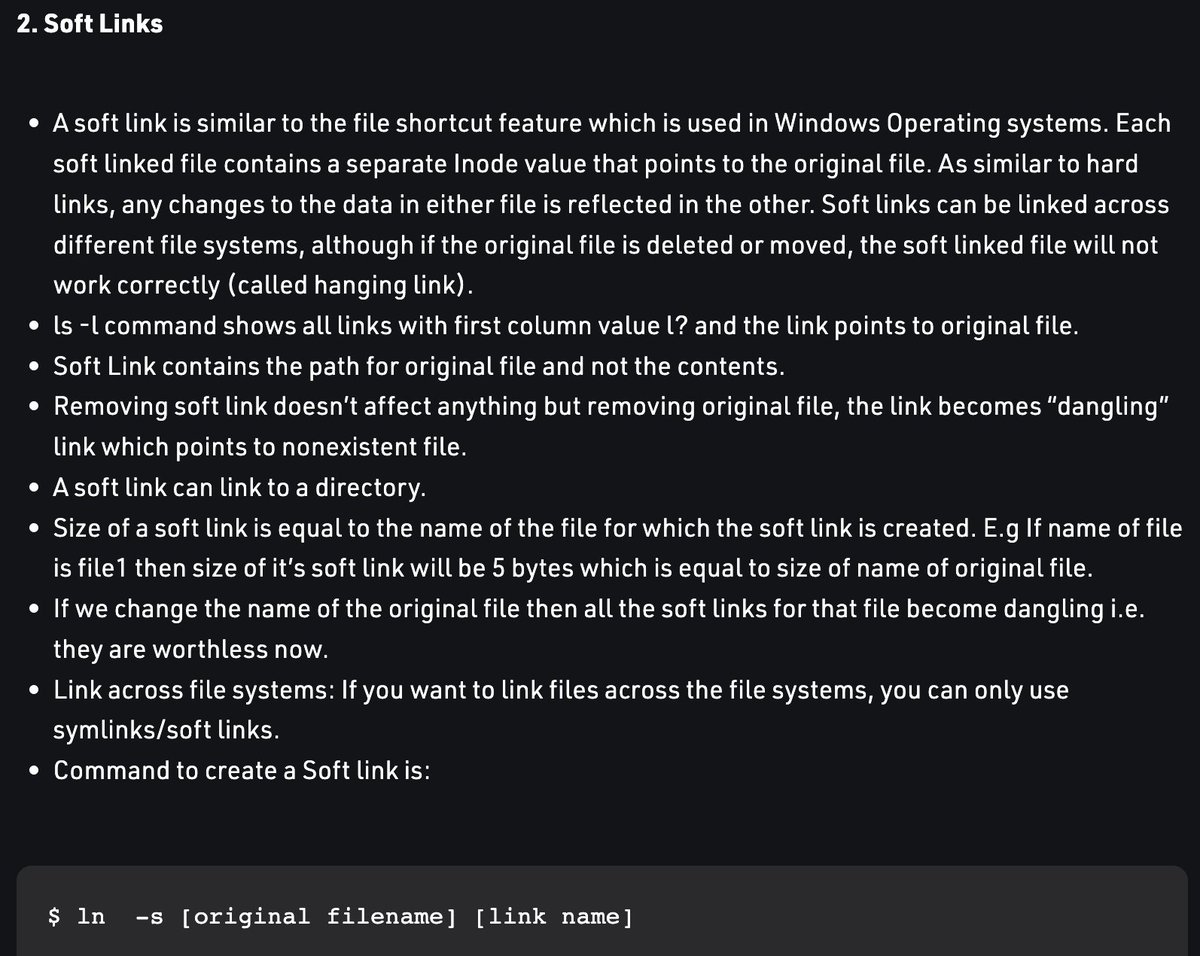

ln … the difference between link and ln is that link is the same as, “ln –directory –no-target-directory FILENAME LINKNAME”. link calls the link function to create a link to a file. ln is for soft/symbolic links while link is for hard links.

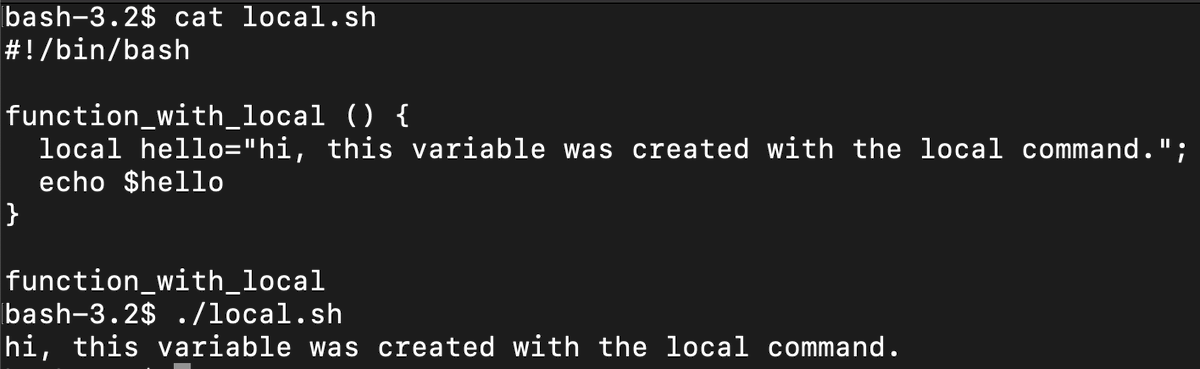

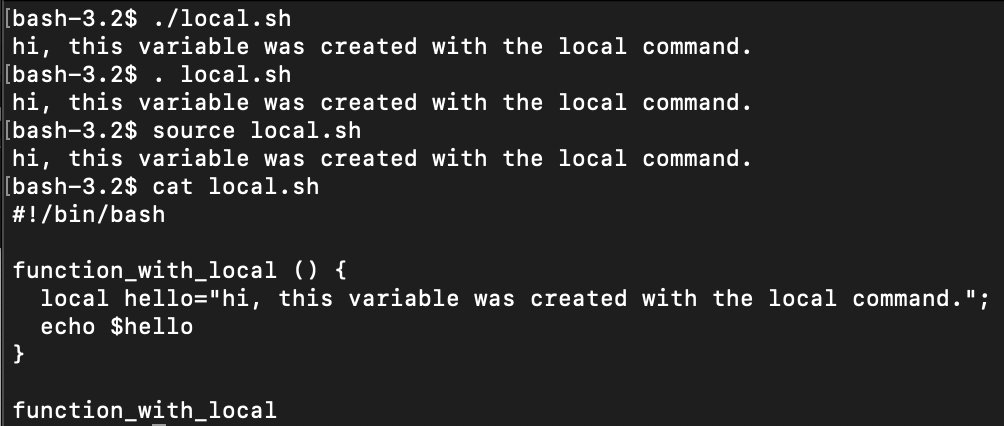

local … sets a variable within a function. This only works within a function, not interactively within the command line.

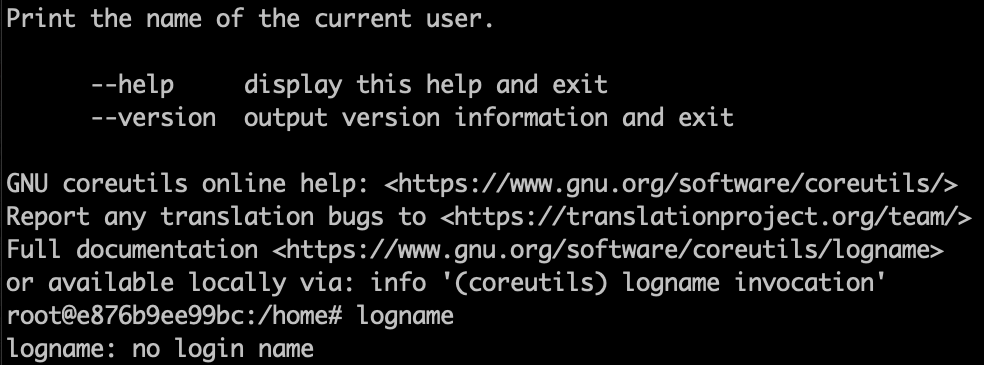

logname … shows the name of the current user. It’s possible to have no logname.

logout … if you’re in a login-type shell, such as one that you ssh’d into, you can use logout in place of exit.

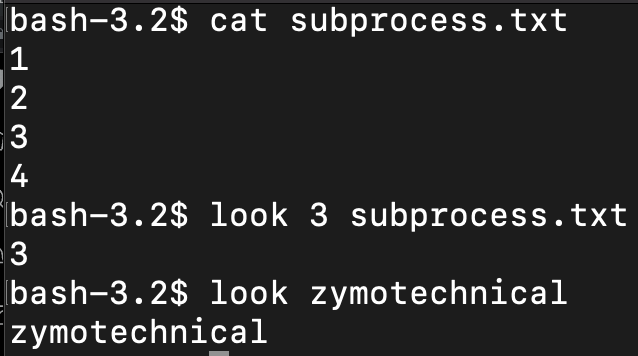

look … looks for a string within a file. If the file is not specified, it looks in the system dictionary at /usr/share/dict/words

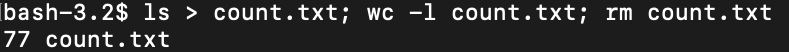

ls … surely you’ve heard of ls, used to list out files in a directory. But what about ls -1 which shows files line by line? ls -l which shows info on the files? ls -a to show all files.

lsof … is a list of open files. In linux / unix, “everything is a file,” so anything running, any program, or any file that is open and being edited, will be shown.

man … the help manual. Use, “man command” for any command and this will give you a much more detailed version of, “command -h” or “command –help” or in the case of builtins, “help command”

mkdir … make directory, makes a folder, or as they are called in linux/unix terms, “directories.”

| mkfifo … in linux, the pipe command sends the output of one command to another for further processing. The, “unnamed pipe,” is | . This pipe cannot be accessed by another session, it just gets created temporarily and is deleted after execution. |

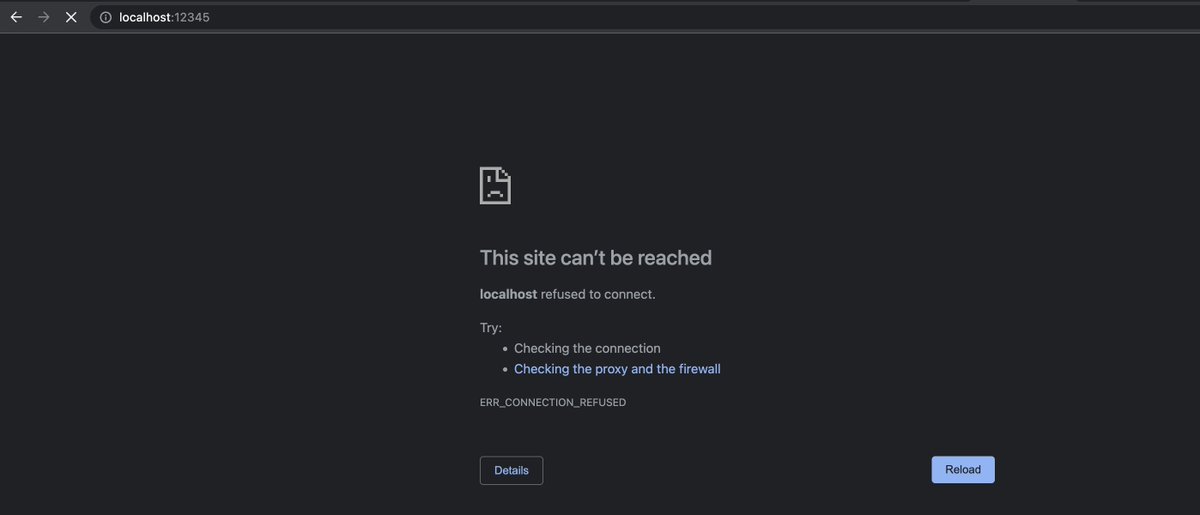

| mkfifo … so if you do the command: ```nc -l 12345 | nc https://www.google.com/ |

80``` this will create a server on port 12345 such that if you visit localhost:12345 on your browser, it will pipe that request to google and the response will be sent to the shell, not the browser.

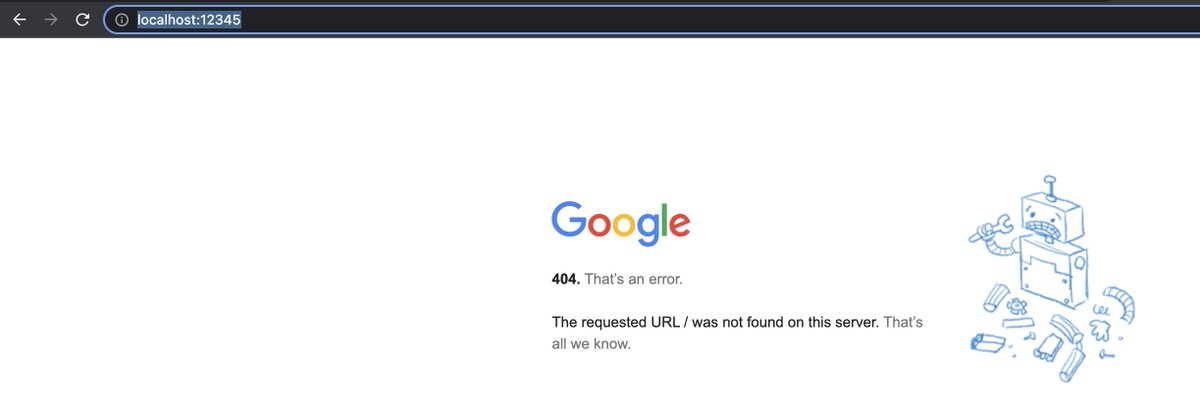

| mkfifo … however if we use, “mkfifo examplepipe” and then ```nc -l 12345 0<examplepipe | nc https://www.google.com/ |

80 1>examplepipe``` this will redirect the standard output 1> to examplepipe.

mknod … creates a pipe (a FIFO) block special file or character special file with a name. Option mknod -p is the same as mkfifo, essentially.

mknod … what is a block/character special file? Basically typically when a file is written to or read from, the linux kernel access a filesystem driver which looks at zones on a disk. However if it’s a device, such as a USB, the request is handled by the driver for that device.

mknod … blocks are like ordinary files, they are an array of bytes, with a first and last value location. characters are serialized, behave like pipes. How different drives of USB drives or other devices treat that data with their driver is up to them.

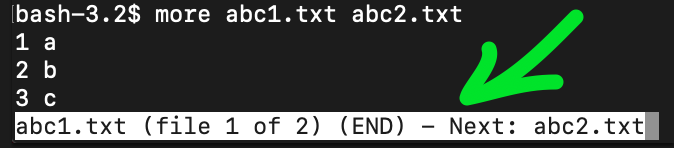

more … a text viewer, similar to less. With both, “more” and “less” you can view multiple files and scroll through sequentially, but more seems to be a bit more intuitive than less when working with multiple files.

most … similar to more and less, but it has an interactive scroll bar which shows you how much of the file you have scrolled through.

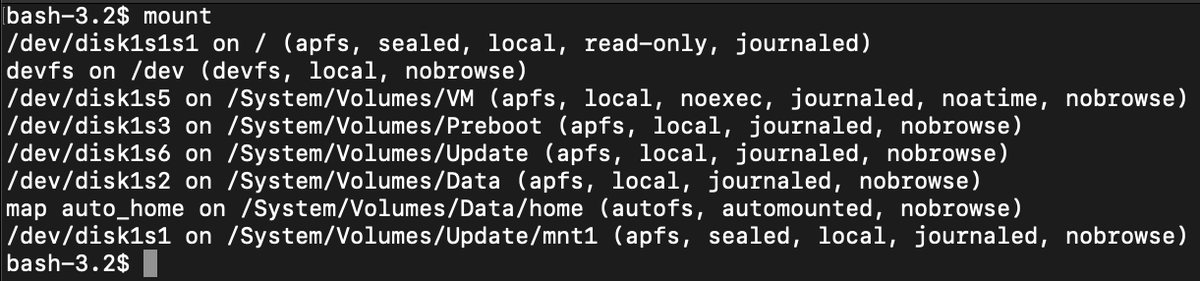

mount … allows the linux or unix based system to mount a filesystem such as a USB drive. Calling the mount command by itself with no target gives information on the currently mounted filesystems.

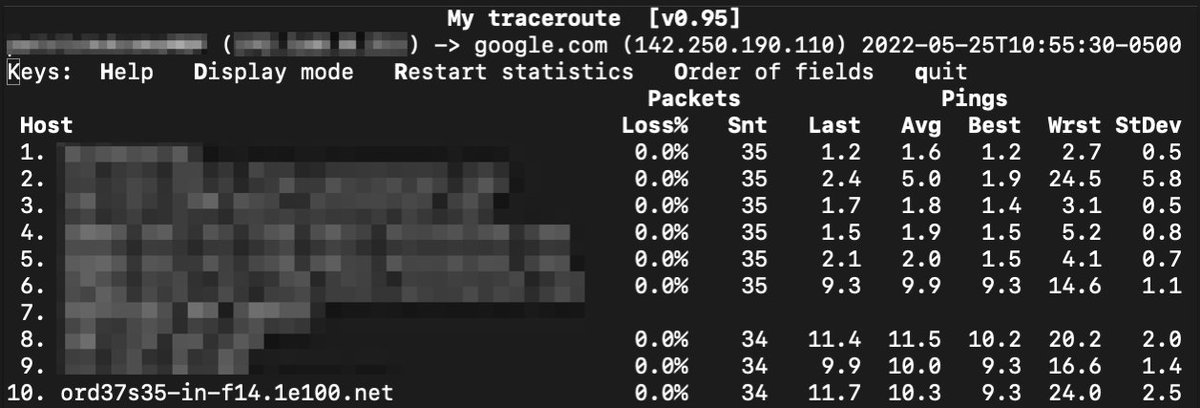

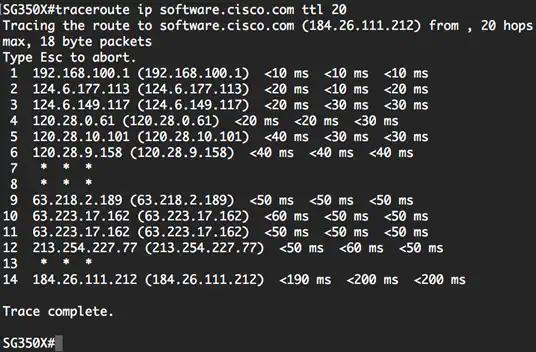

mtr … run a traceroute which provides a map of how data on the internet travels from its source to its destination, including the various routers in between. It’s a combination if ping and traceroute, basically a, “synthetic” version of traceroute which actively pings the host.

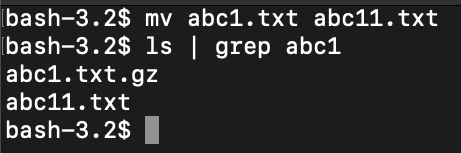

mv … moves files, but can also be used to rename files as shown in this example.

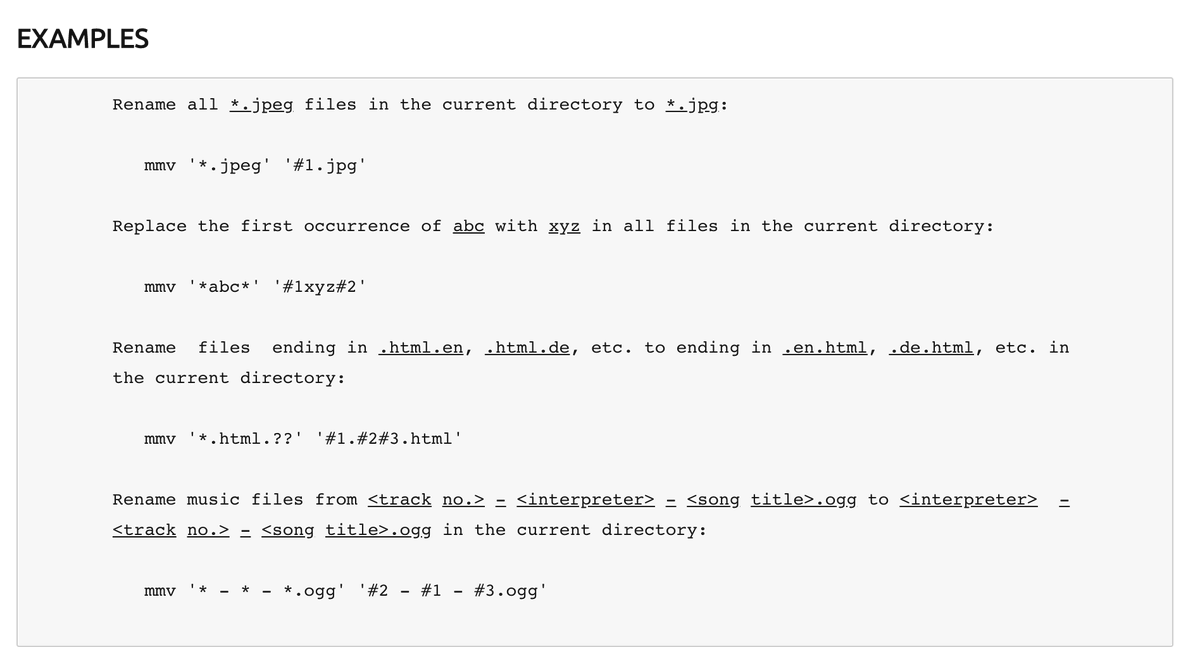

mmv … similar to mv, but allows you to do mass operations, for loops in a sense by using a simple pattern-based language: https://manpages.ubuntu.com/manpages/bionic/man1/mmv.1.html

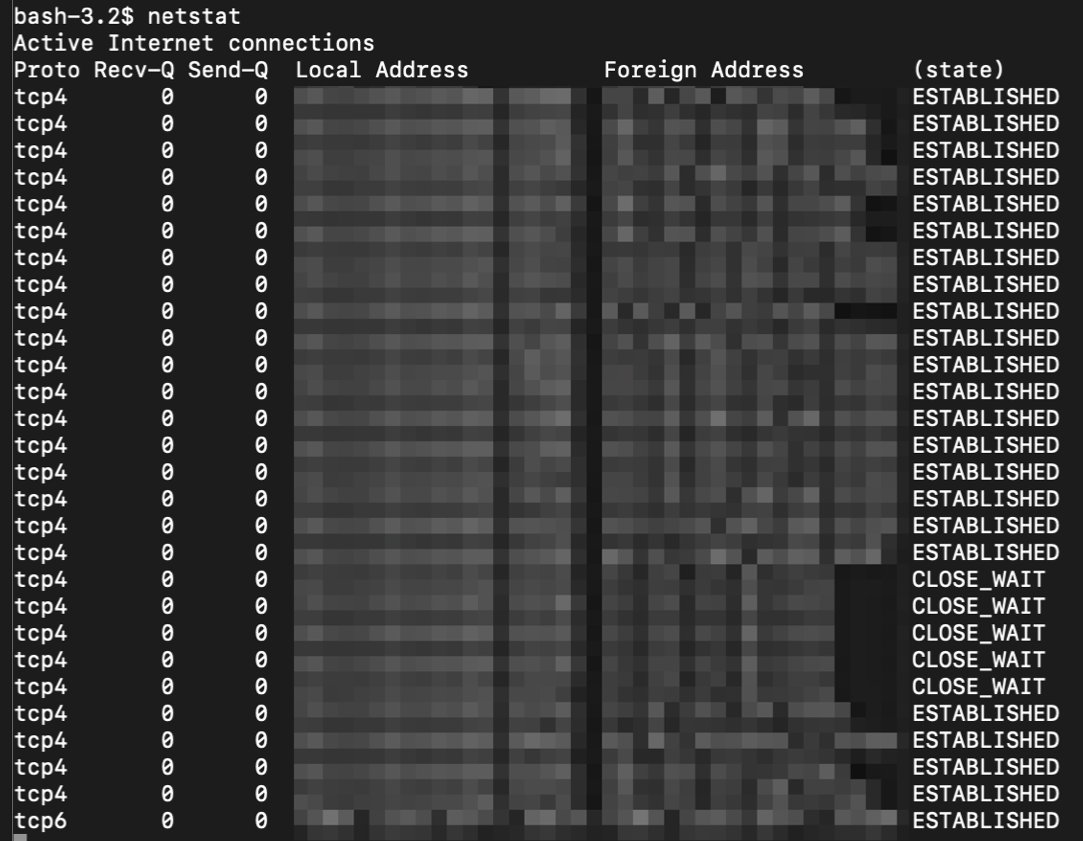

netstat … display the contents of various network info. There are different ways to structure the data depending upon the options used. The most common, non-flag version is shown here, it shows a list of active sockets for each protocol, here showing mostly tcp4.

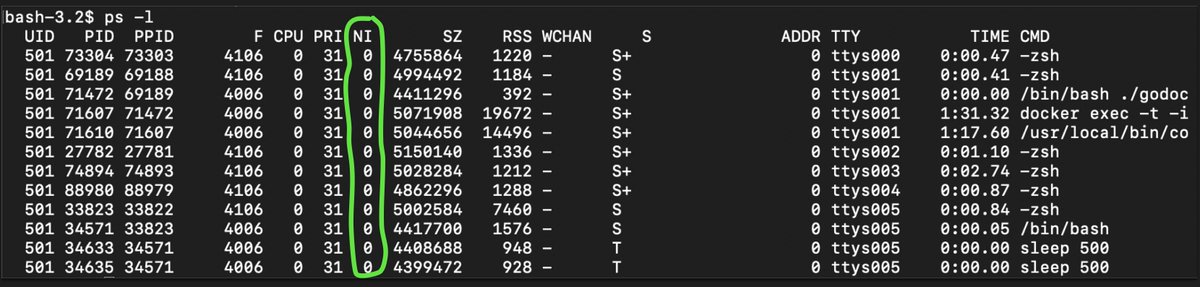

nice … used to assign CPU priority for a particular process. niceness of -20 is the highest priority and 19 is the lowest priority. The niceness value of a processs can be found under the, “NI” column after running command ps -l https://en.wikipedia.org/wiki/Nice_(Unix)

nl … essentially cat with numbered lines.

nohup … run a command such that it will not abort when you logout or exit from the shell. Basically it’s a way to keep commands running even if you are disconnected.

nslookup … a way to look up information about a name server.

open … opens up a file using a default text editor. In my case, I did, “open whatever.txt” on a file and it opened up Mac TextEdit since I’m on a Mac at the moment.

op … operator access allows root to give root privileges on specific commands to other users in the system. A good example is given on the man page. https://linux.die.net/man/1/op

passwd … allows you to change the password on your own user or other users if you have permissions.

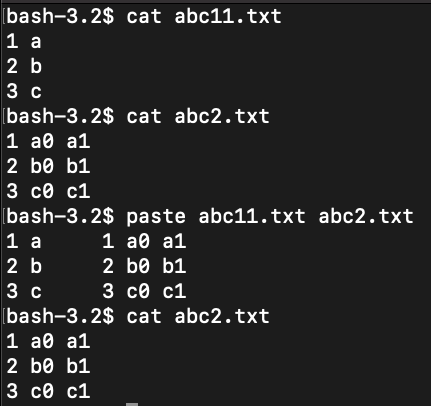

paste … merges lines of files, line by line. Does not permanently effect the files, output goes to stout. So if you wanted to put the results in a file you would have to use > whateverfile.txt

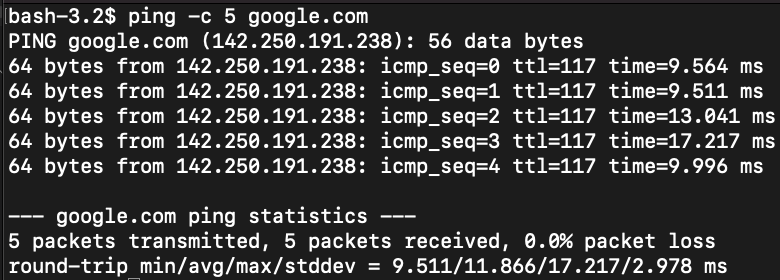

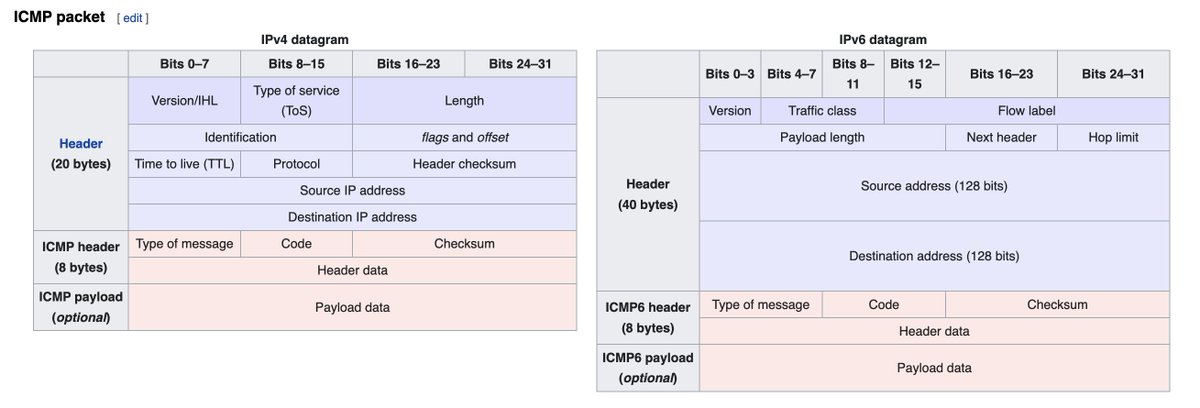

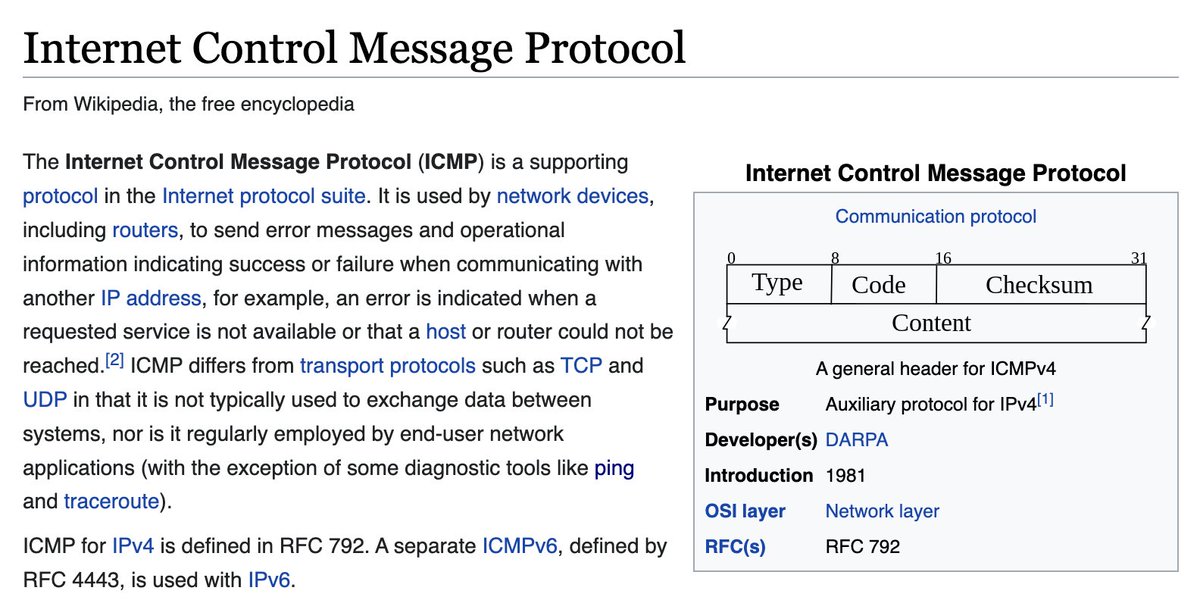

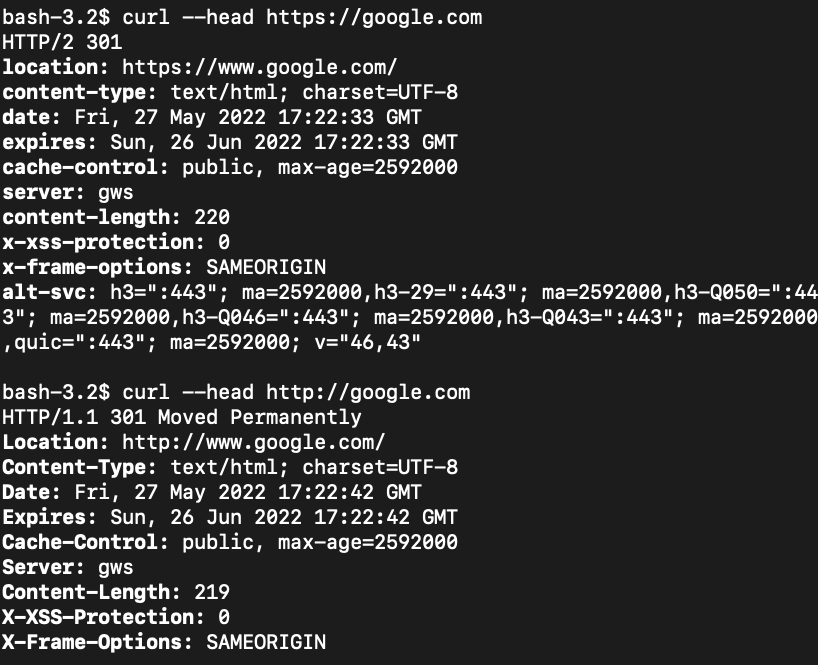

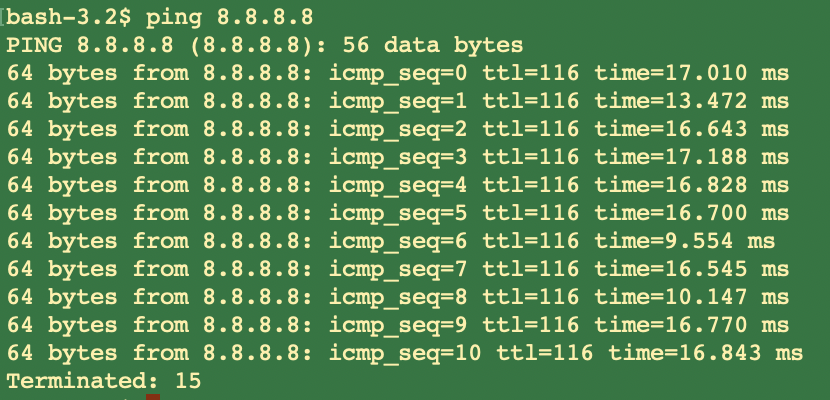

ping … this is a special command which tests various statistics for packet transmission, including round trip times. What’s interesting about ping is that it uses its own message protocol, as a part of ICMP, it’s not a transfer protocol like tcp, so it doesn’t carry any data.

ping (continued) … so this is interesting because ping can’t actually return http status codes, it’s not a transfer protocol, it just gives timing information, so it’s not really the most reliable possible network connectivity tool.

ping (continued) … ping is more of a quick and easy test. More thourough would be “curl –head https://t.co/qJwLSLMUnU”

- which shows the actual response status codes.

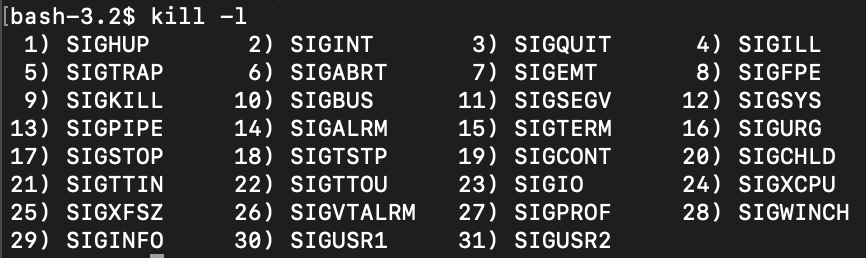

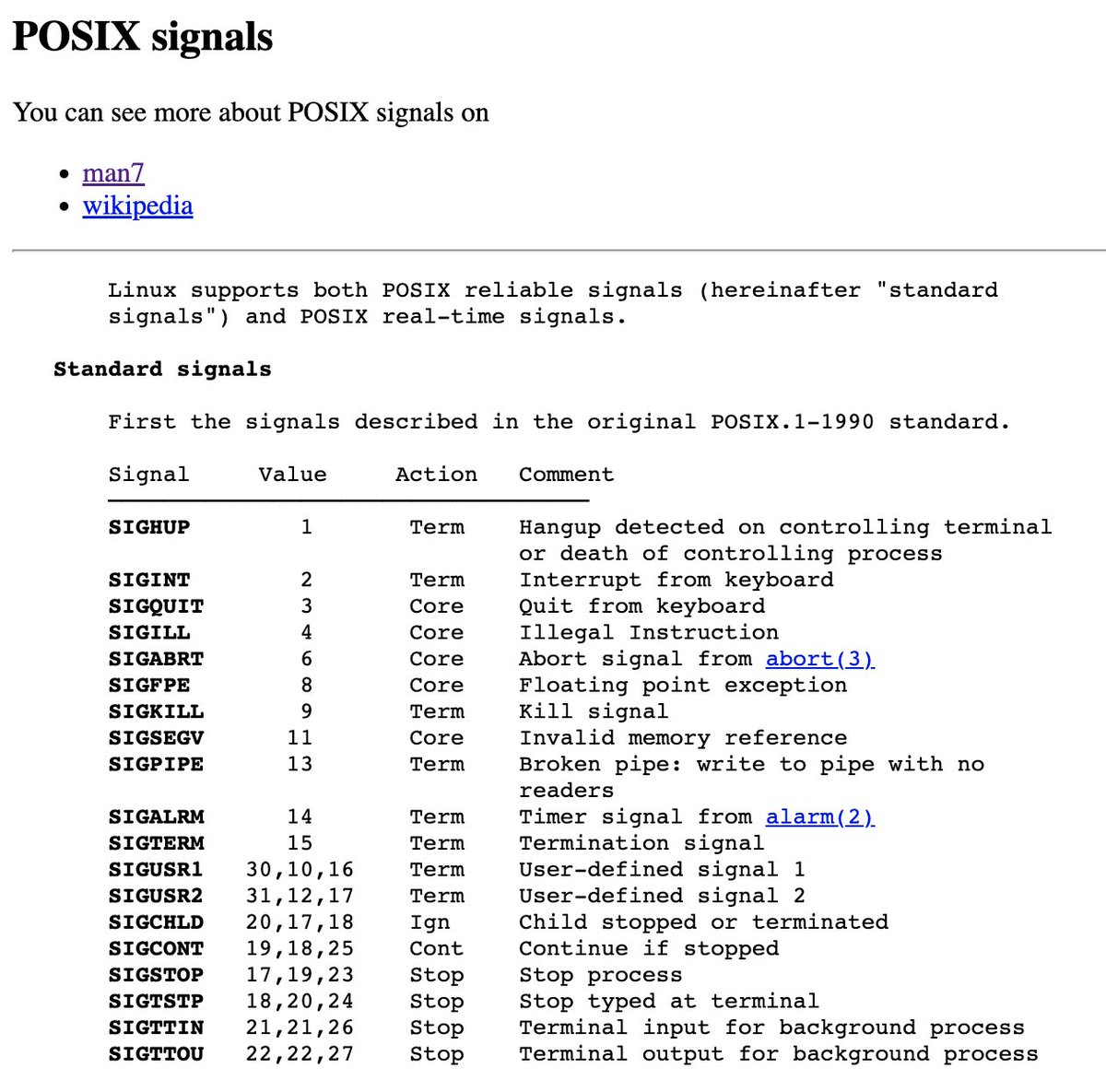

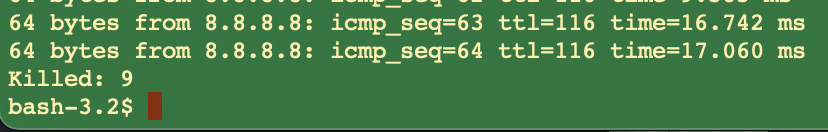

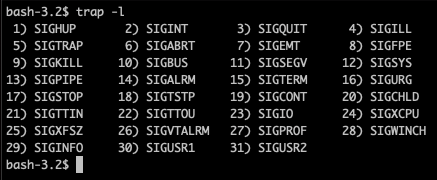

pkill … so first off, if you run, “kill -l” it gives you a list of POSIX signals, https://www.man7.org/linux/man-pages/man7/signal.7.html

which are a type of signal https://t.co/cBEs21vCsC,

which is basically an asynchronous notification sent to a program while it’s in operation, a “button press” so to speak.

pkill … so for example, if you run, “ping 8.8.8.8” on another tab, you can see the process running. If you do, “pkill 9 ping” this will kill that ping process on the other tab, according to code 9, which is a regular, “stop process,” type kill.

pkill (continued) … so if you use, “pkill -15 ping” this is a different type of killing process, a “graceful shutdown.” These are just different ways of shutting down applications, though -15 is the default.

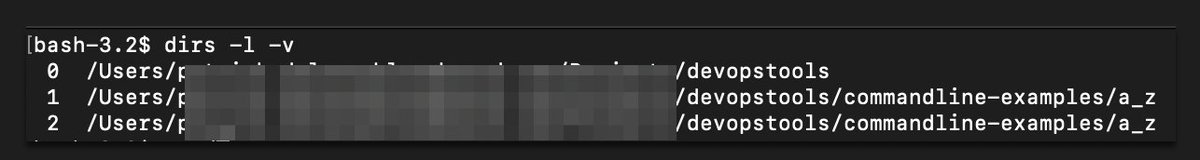

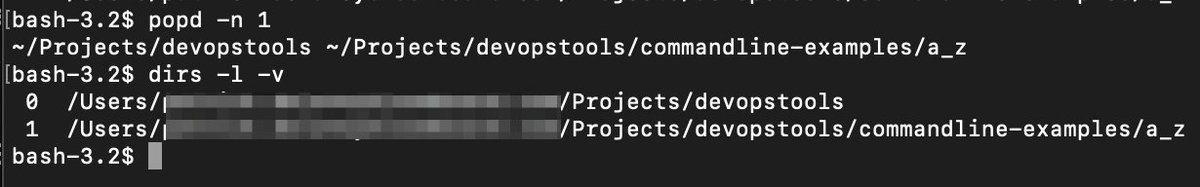

popd … to know popd, you have to know pushd, which is basically a way to push directories to a list. You can push directories to a list with, “pushd directory_name” then, look at list of those directories with, “dir -l -v” . popd can remove those dirs by name or number.

printenv … prints out all of your environmental variables.

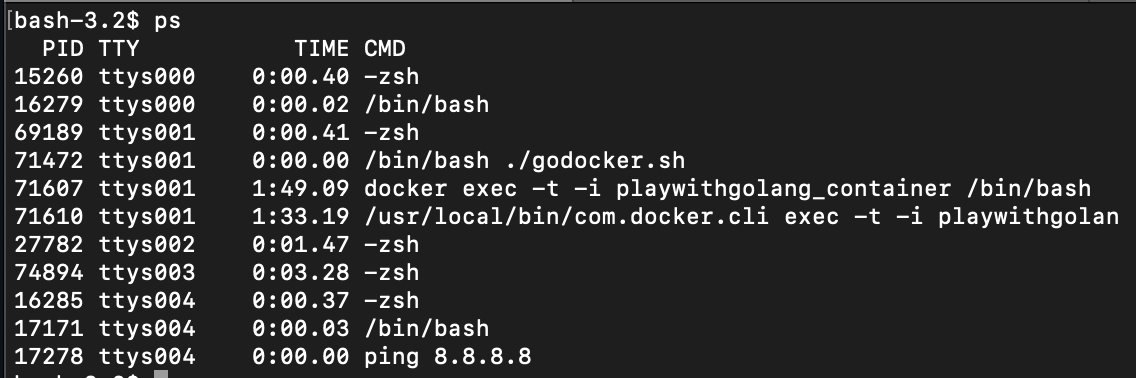

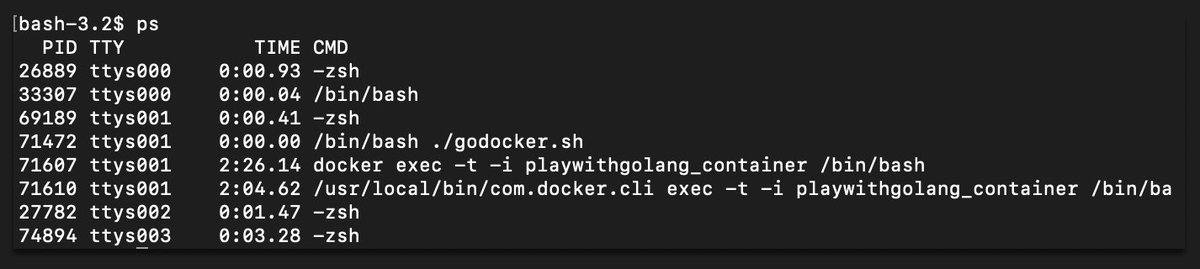

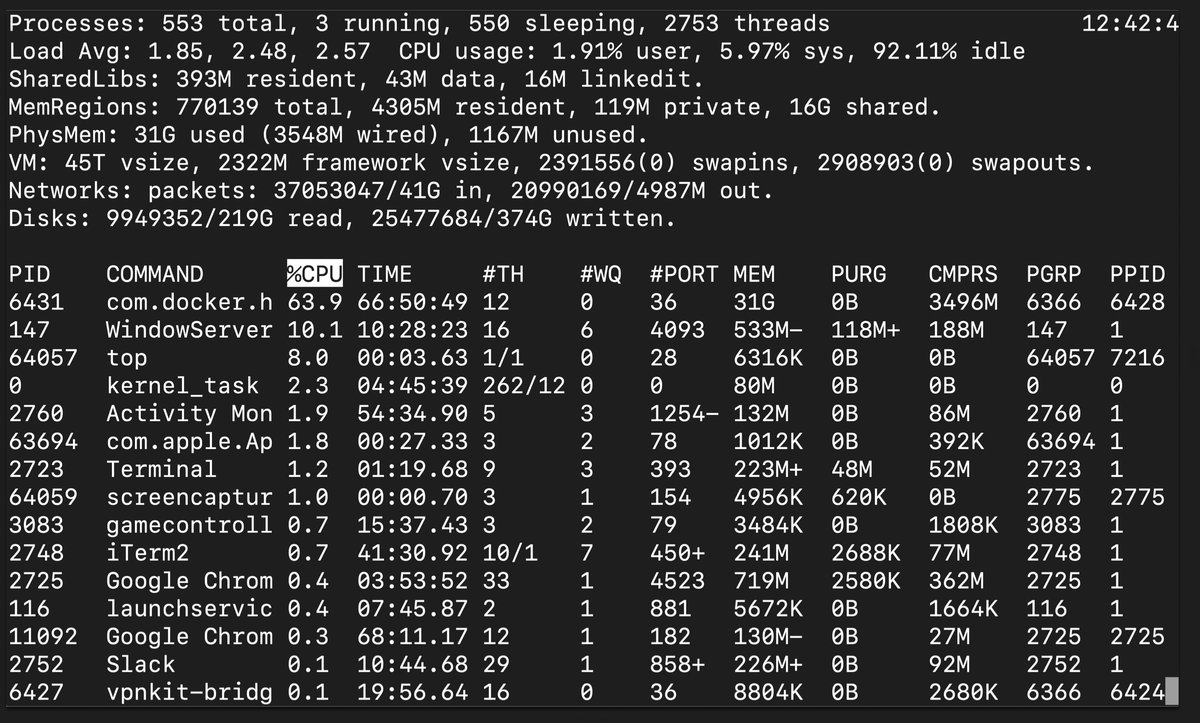

ps … shows all of your currently running processes, along with their PID numbers, etc. Similar to running, “docker ps” but for your actual machine.

pushd … see popd directly above.

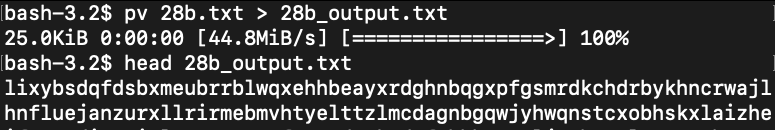

pv … monitor the progress of data through a pipe. If you are outputting a file to another, or if you’re doing some kind of operation after a pipe, you can view the progress on a scale of 0-100%.

pwd … print out the current directory.

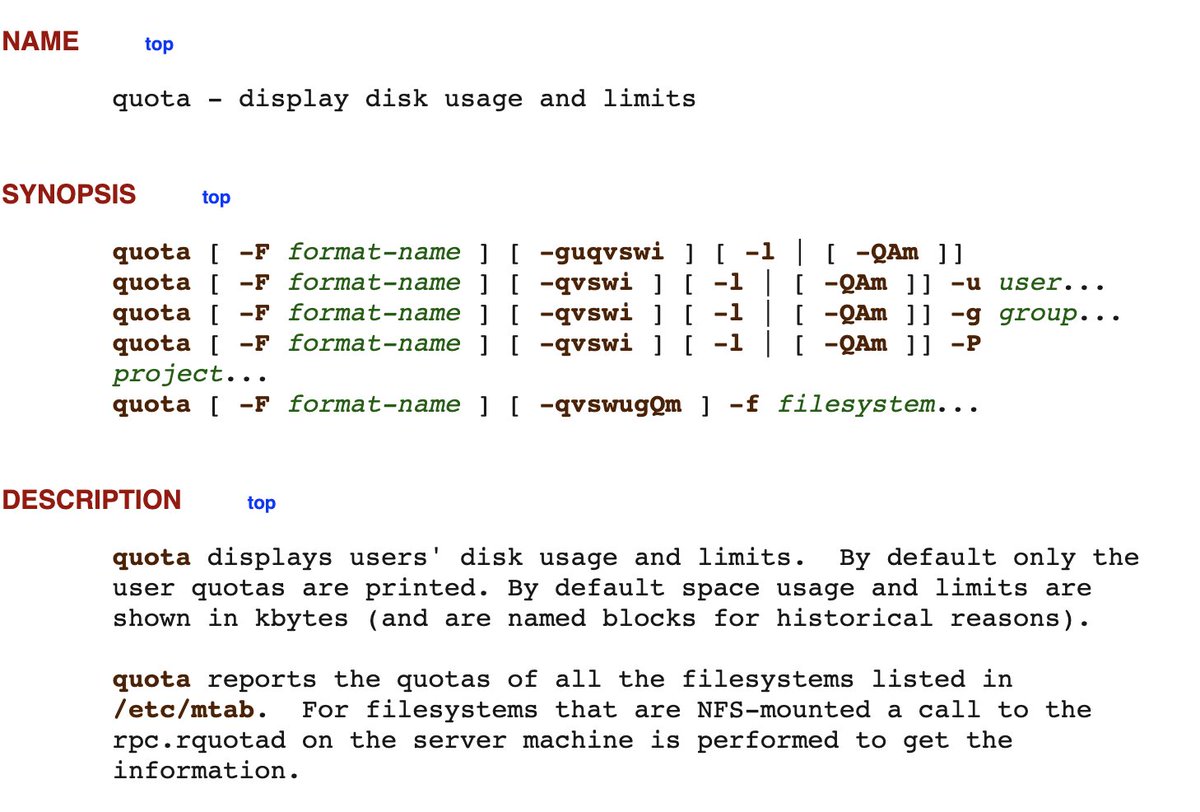

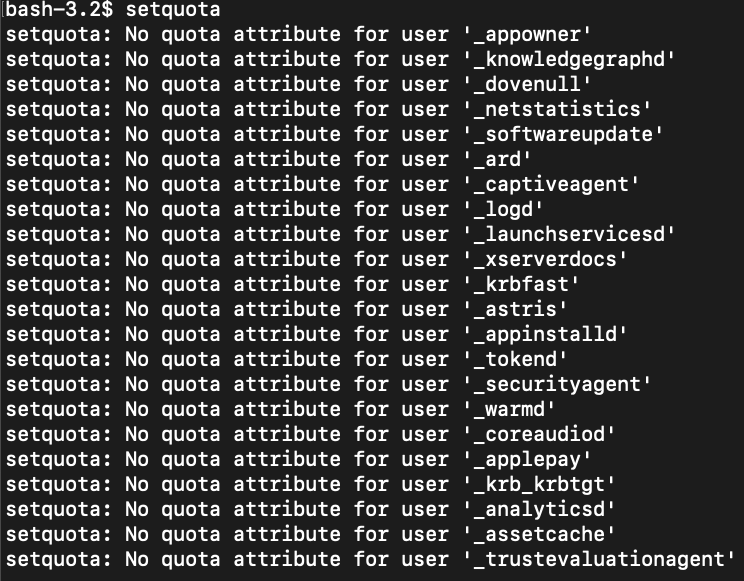

quota … display a user’s disk usage and limits, if they are set. This would be used together with, “setquota” which sets those quota levels with multiple options, “setquota -u [username] [soft disk limit] [hard disk limit] [soft inode limit] [hard inode limit] [partition]”.

quotacheck … similar to quota.

quotactl … similar to setquota

rcp … is the legacy remote copy protocol tool for copying files between machines. This has been replaced by scp.

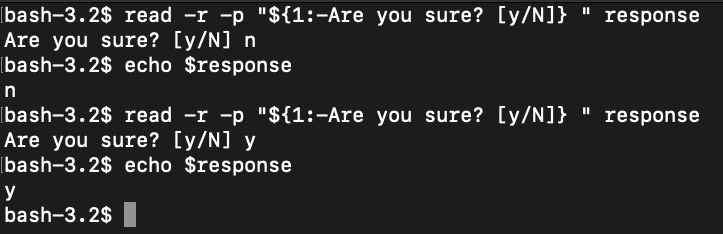

read … reads from stdin, allows the capability to create an interface, pass input to a variable.

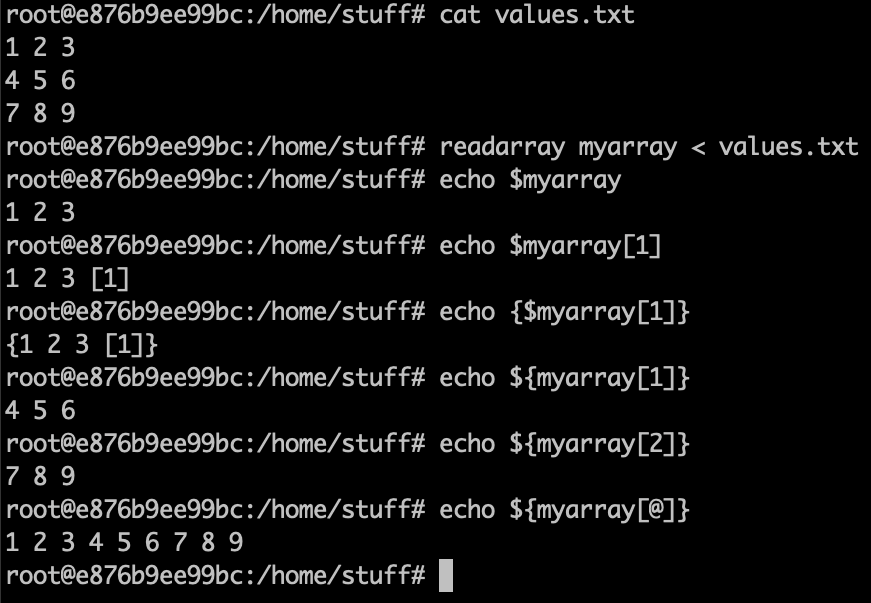

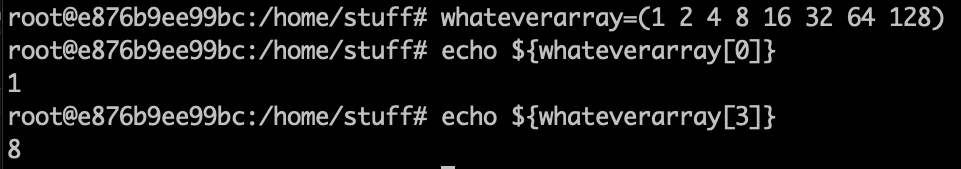

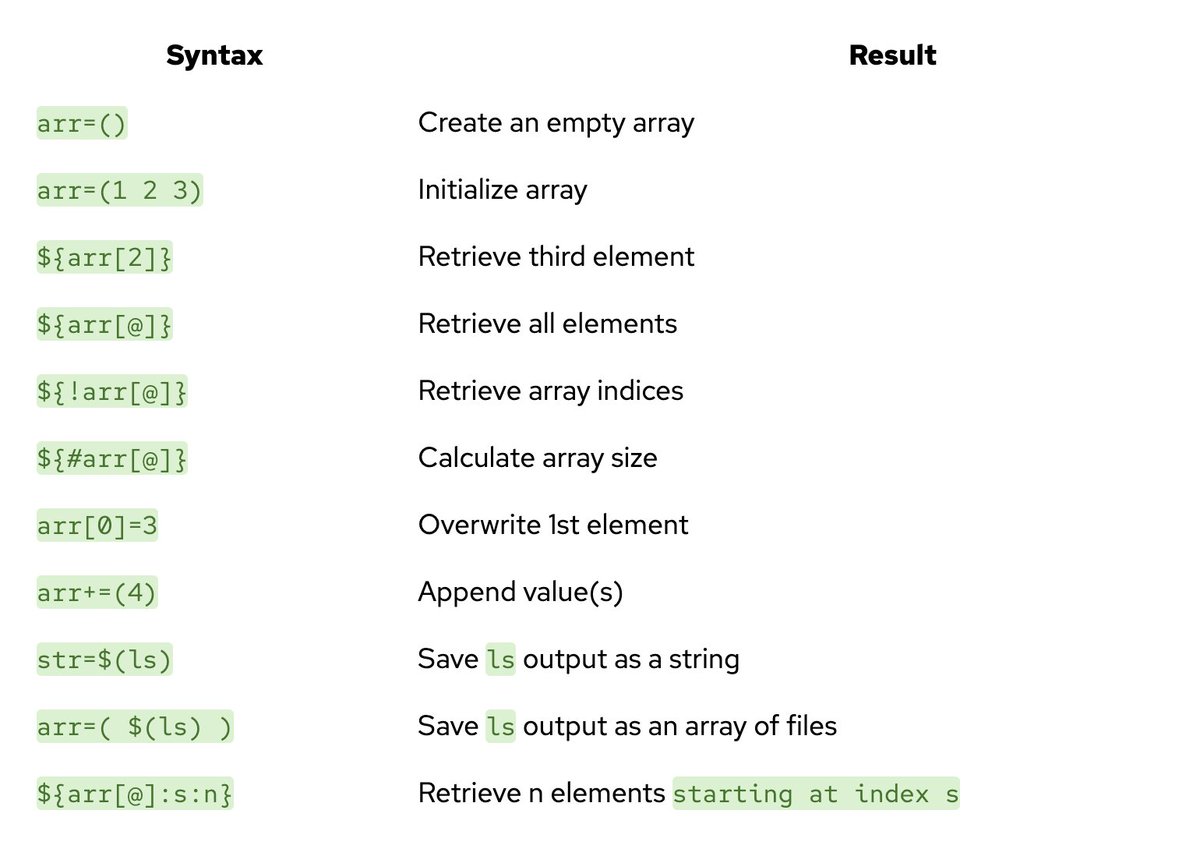

readarray … you can actually store items in an array within bash, and you can read items from a file into an array in bash with readarray as shown below.

readarray (continued) … bash has arrays just like scripting languages such as python. Here’s a good guide that involves a bunch of array scripting. https://opensource.com/article/18/5/you-dont-know-bash-intro-bash-arrays

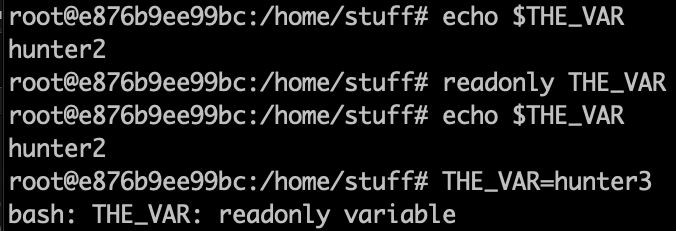

readonly … you can make variables readonly.

readonly (continued) … you can’t unset a readonly variable. The purpose of a readonly variable is to make it set permanently until the terminal terminates!

reboot … reboots the system.

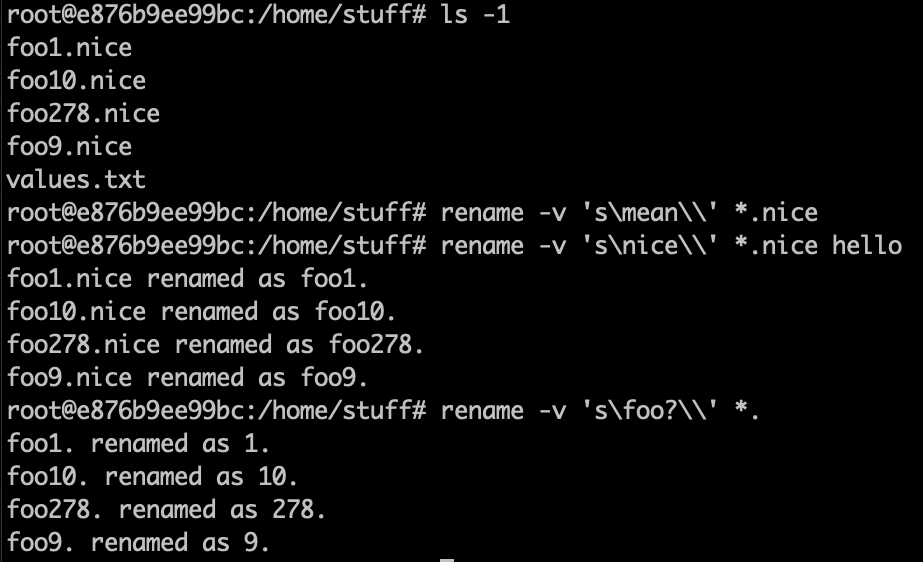

rename … rename files in bulk according to a regex pattern and substitution command. Careful! There’s no fallback on this command, so once things are renamed, that’s it!

renice … similar to nice, sets the nice value of a process, re:

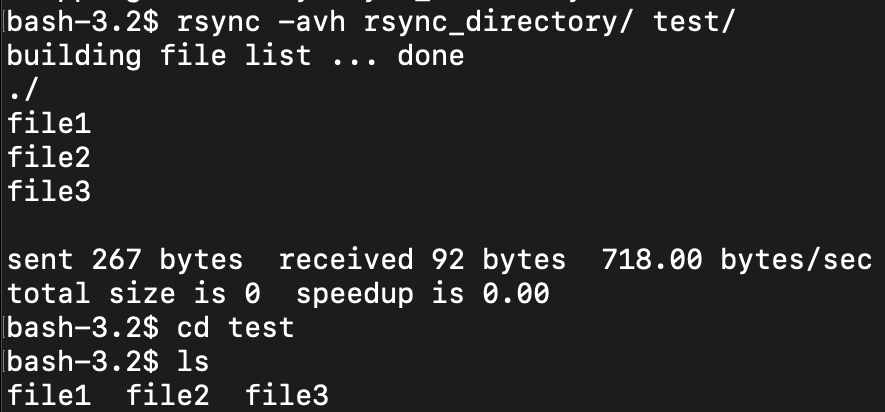

rsync … kind of like Dropbox, allows you to sync folders between two machines. https://www.geeksforgeeks.org/rsync-command-in-linux-with-examples/

return … at the end of a function, “return” the value and exit with the given return value.

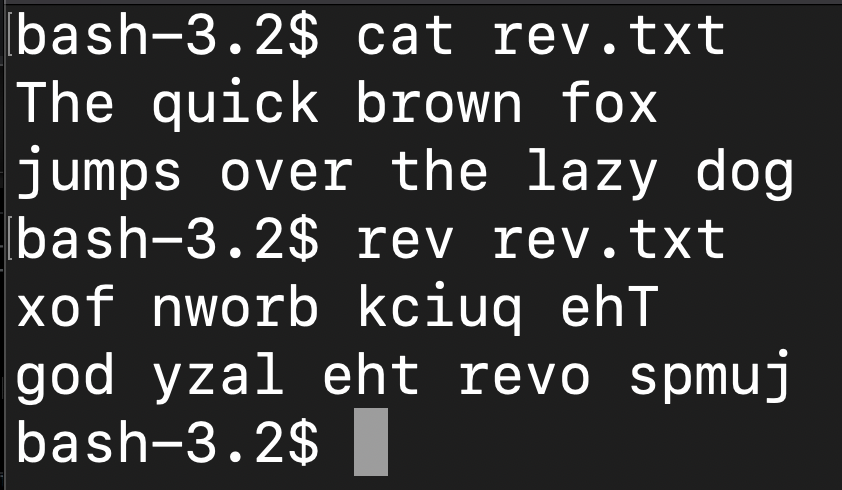

rev … reverse lines in a file, as shown:

rm … remove, or rather delete, the file in question…fairly common command.

rmdir … this is for removing directories (folders). However if it’s a folder with a bunch of stuff in it, you have to use, “rm -rf

rsync … can be used to make one directory sync up, or be equivalent in terms of the files and directories in it with another. Can be used remotely, but need to make sure it’s over ssh, or basically, secured.

scp … secure copy. This is a command parallel to ssh, which allows you to securely copy a file from one server/computer to another, remotely. So whereas you would login with ssh by doing, “ssh name@server” and then have some sort of passphrase or keyless entry…

scp (continued) …with scp, you do something similar, but you would also include a filename, e.g.: “scp filename.txt name@server” or if there’s a port involved, “scp -P 1234 filename.txt name@server” - you can also specify the location that the file goes on the receiving server.

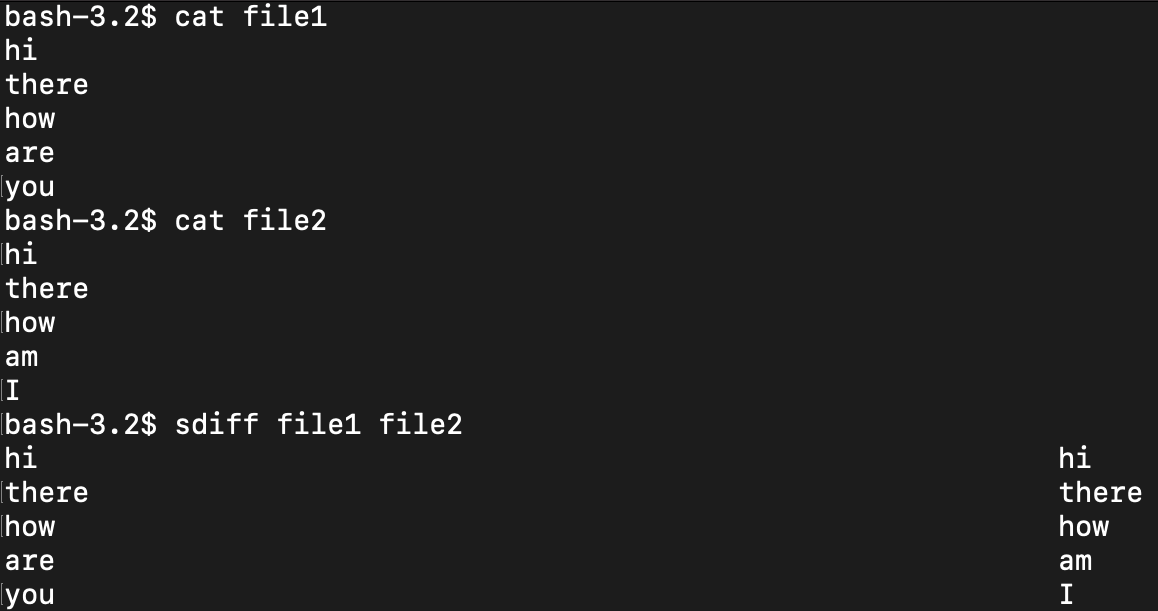

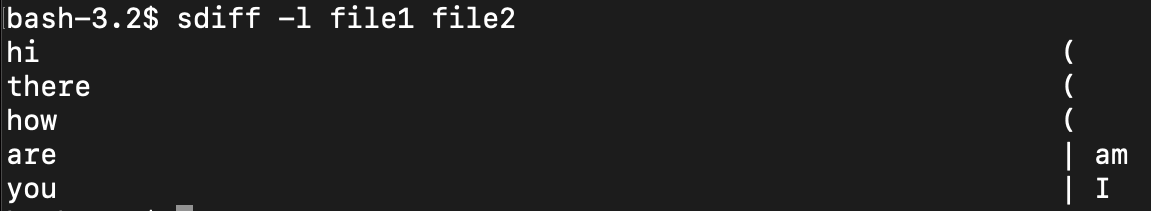

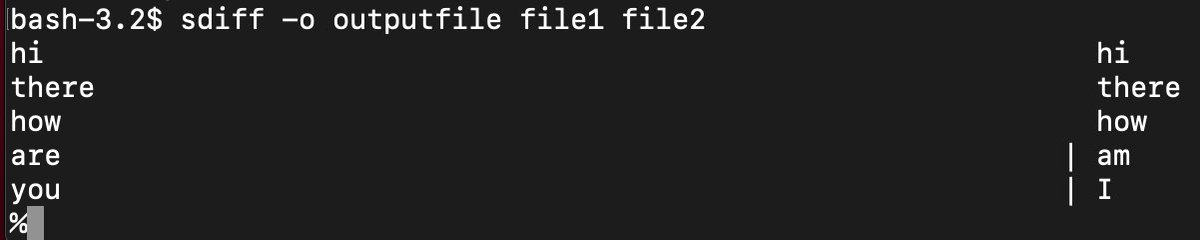

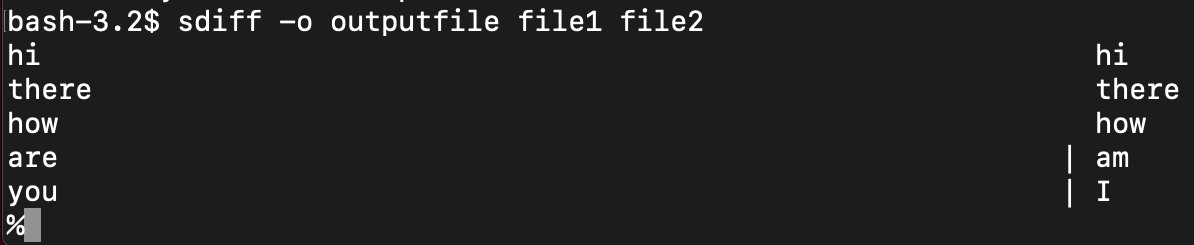

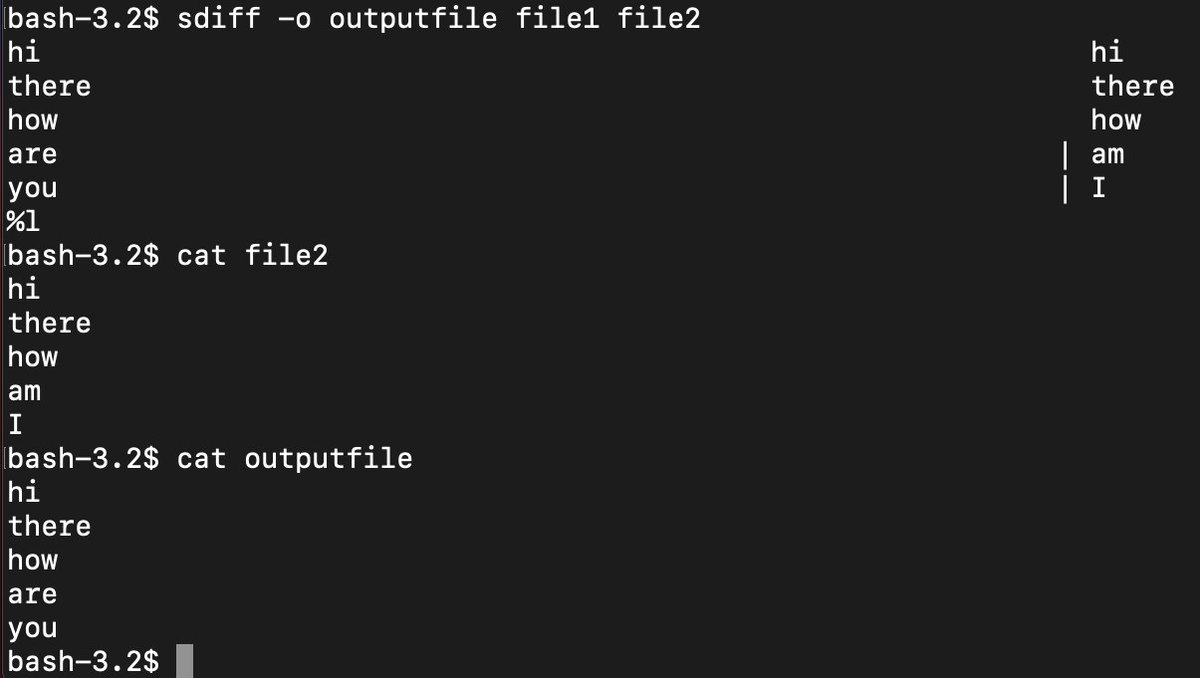

sdiff … merge two files interactively. If you do sdiff -l it shows ( on the right side which are identical. If you do sdiff -o OutputFile file1 file2, it brings up a prompt which allows you to merge the two files.

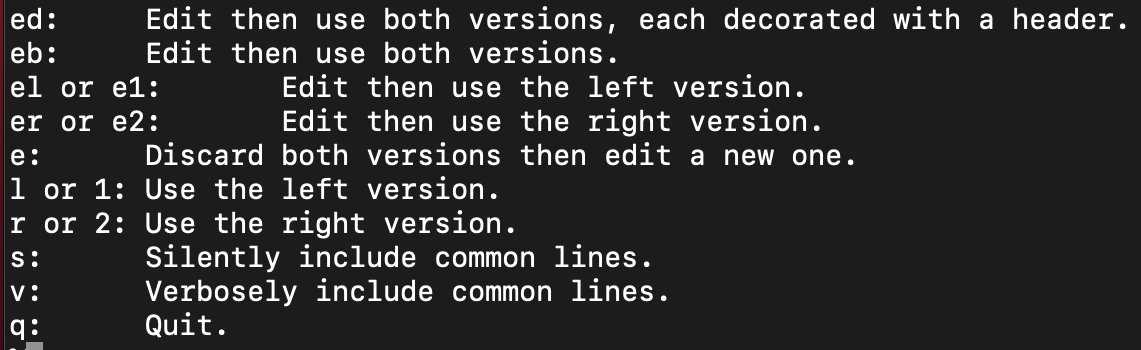

sdiff (continued) … the prompt looks like the following. So for example if you choose, “l” then the left side will go into the output file.

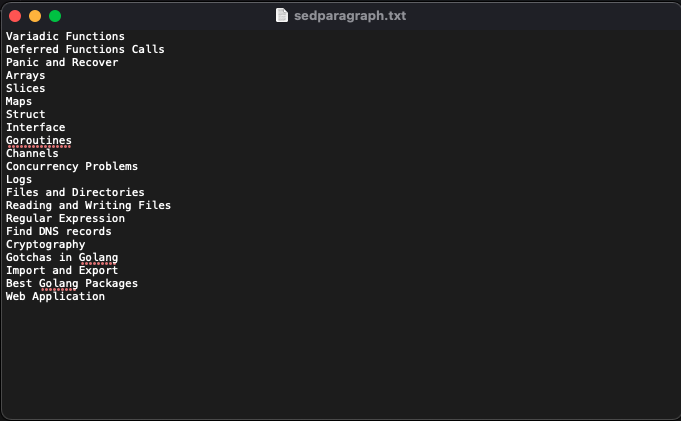

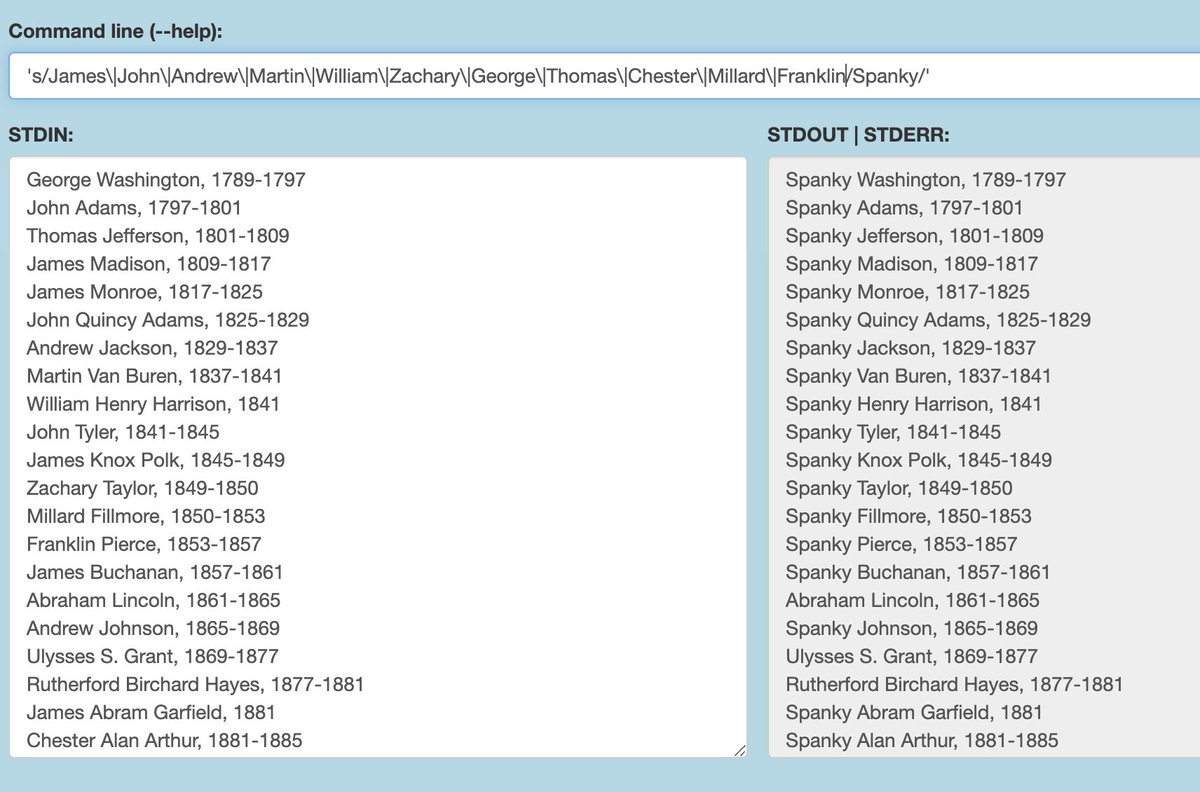

sed … stream editor, like awk but different options and more meant for streams of characters rather than tabular data. You can use a sed builder such as this: https://sed.js.org/

sed (continued) … here we’re showing a replacement command, which takes names and replaces them with, “Spanky.” But you can also do loops, flags, prints, appending, match patterns, and so on. Here’s a cheat sheet. https://quickref.me/sed

screen … you can use this while using ssh, to run multiple remote shells while logged in to ssh.

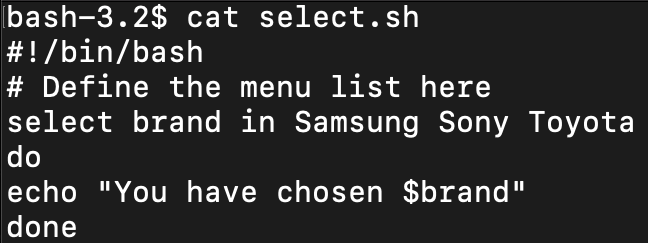

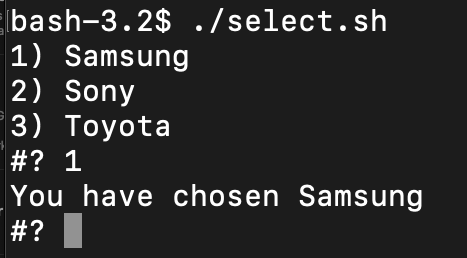

select … allows a human to select an an array of options. The way to code select is quite simple because select generates a list for you based upon the array.

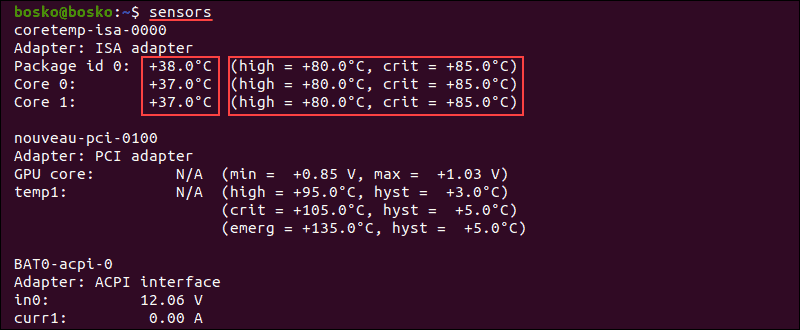

sensors … used to read sensor chips showing temperature and other environmental stuff. It’s not available on the computer I’m on but here’s a guide and example. https://www.commandlinux.com/man-page/man1/sensors.1.html

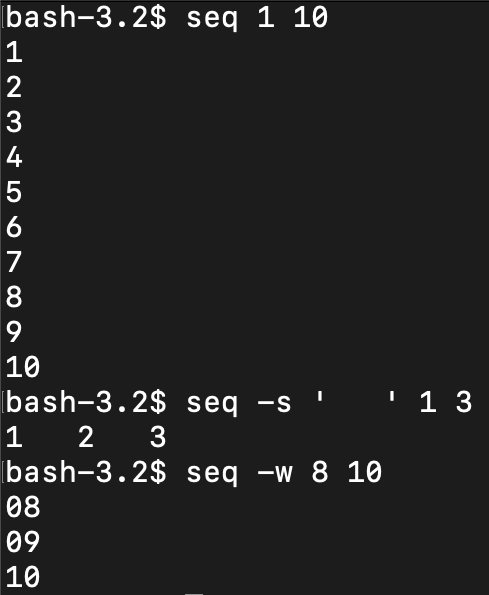

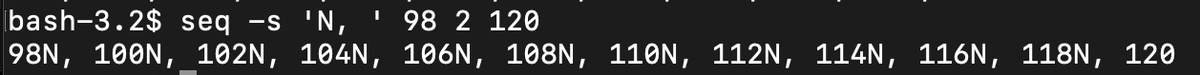

seq … print out numeric sequences according to different patterns and with some different options available.

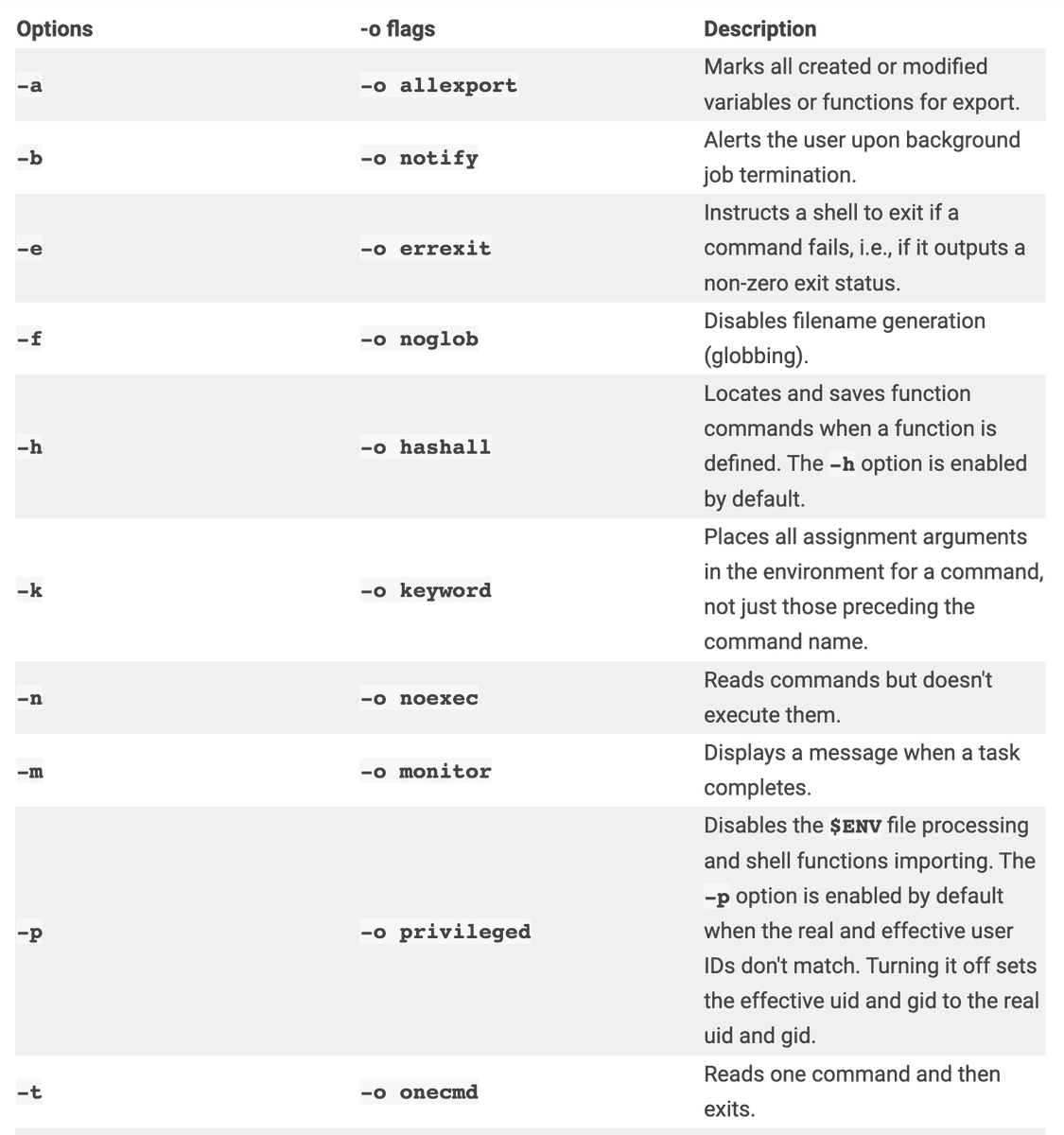

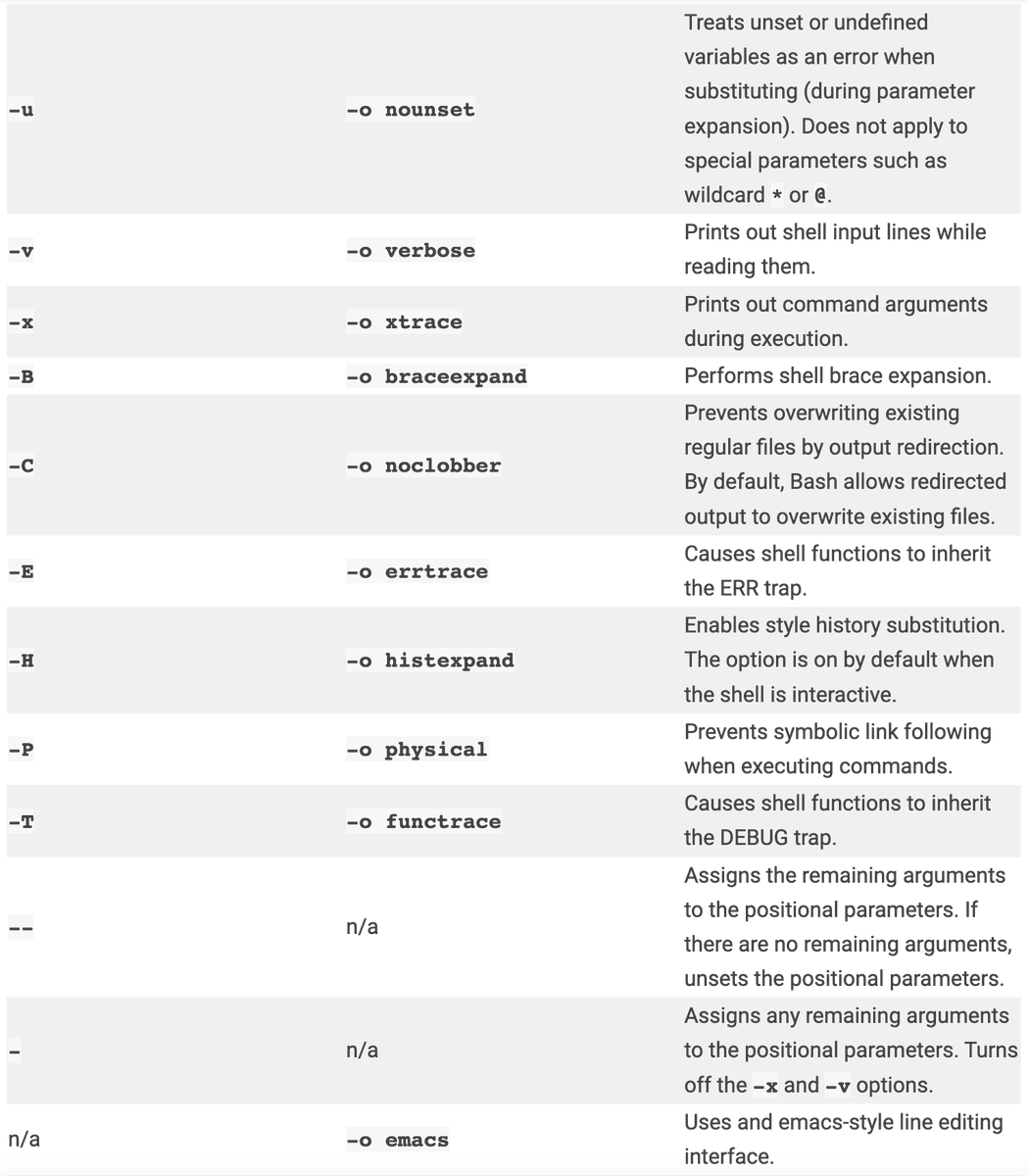

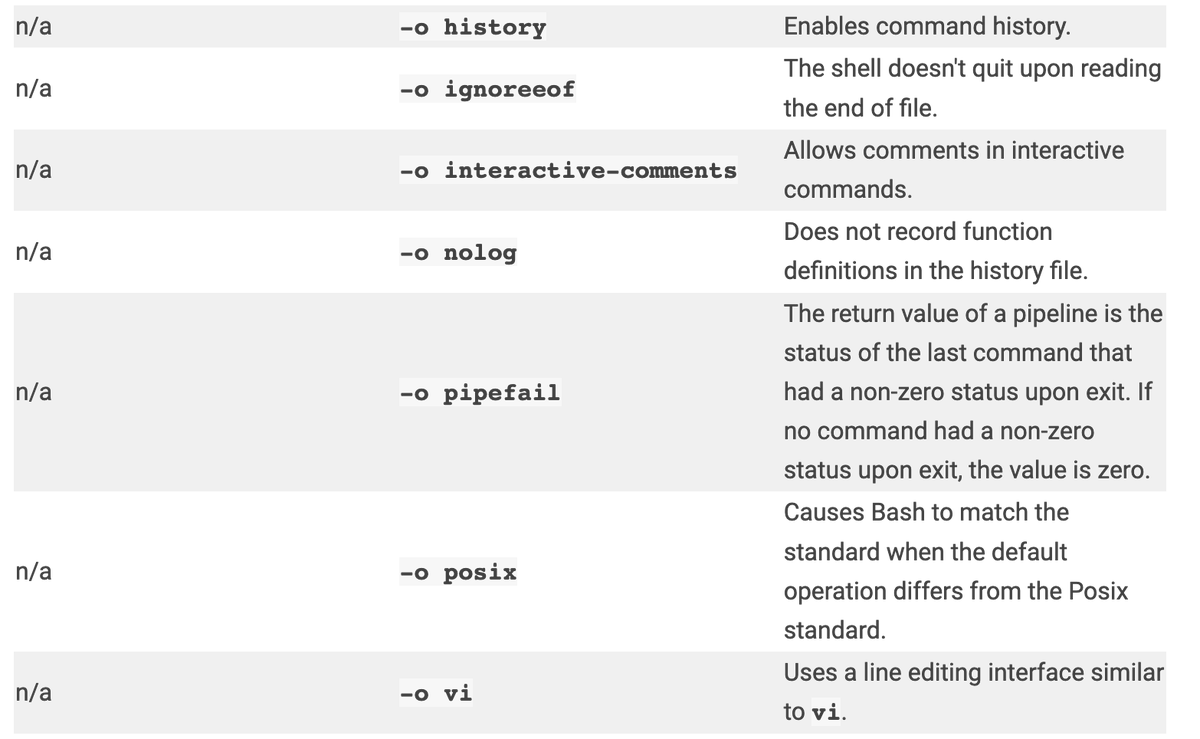

set … allows you to create all sorts of settings of bash itself, there are lots of options. For example, if you set to, “-v” verbose, it prints out the command you entered in every time.

sftp … ftp but uses ssh message protocol. You can do: sftp -oPort=customport user@servername, then you will get to a prompt, sftp> at which point you can use get/put, e.g. sftp> get remote-file local-file or sftp> put -r local-directory with files and directories.

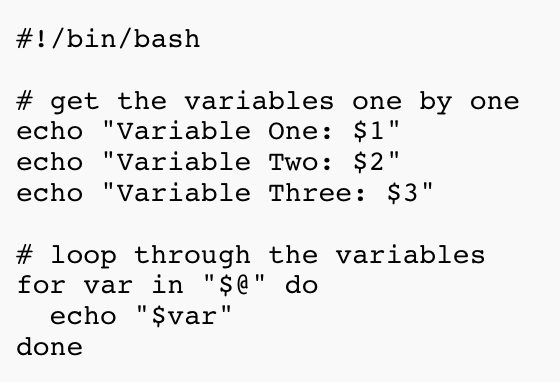

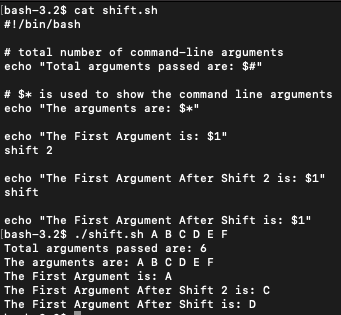

shift … is a way to cycle through arguments as shown in the attached image … you can cycle through various arguments fed in to a command.

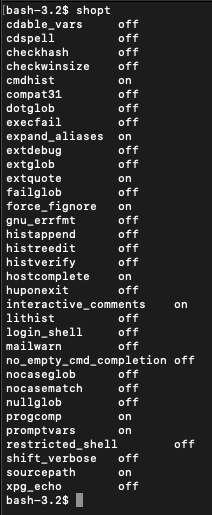

shopt … shell options. You can use -s to set the options. Here are a bunch of explanations on what the options are. https://www.gnu.org/software/bash/manual/html_node/The-Shopt-Builtin.html

shutdown … shut down or restart linux.

sleep … suspends the calling process for a specified amount of time. Basically, the next command can’t be called until the sleep process is done. You can call by seconds, minutes, hours, etc. with “sleep 5s” or “sleep 5m”

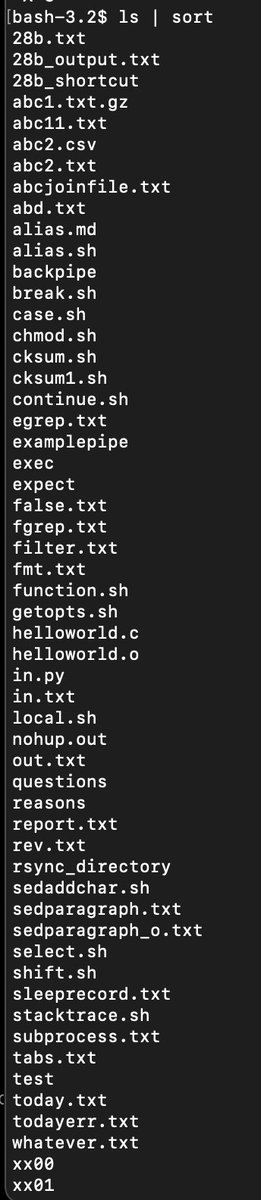

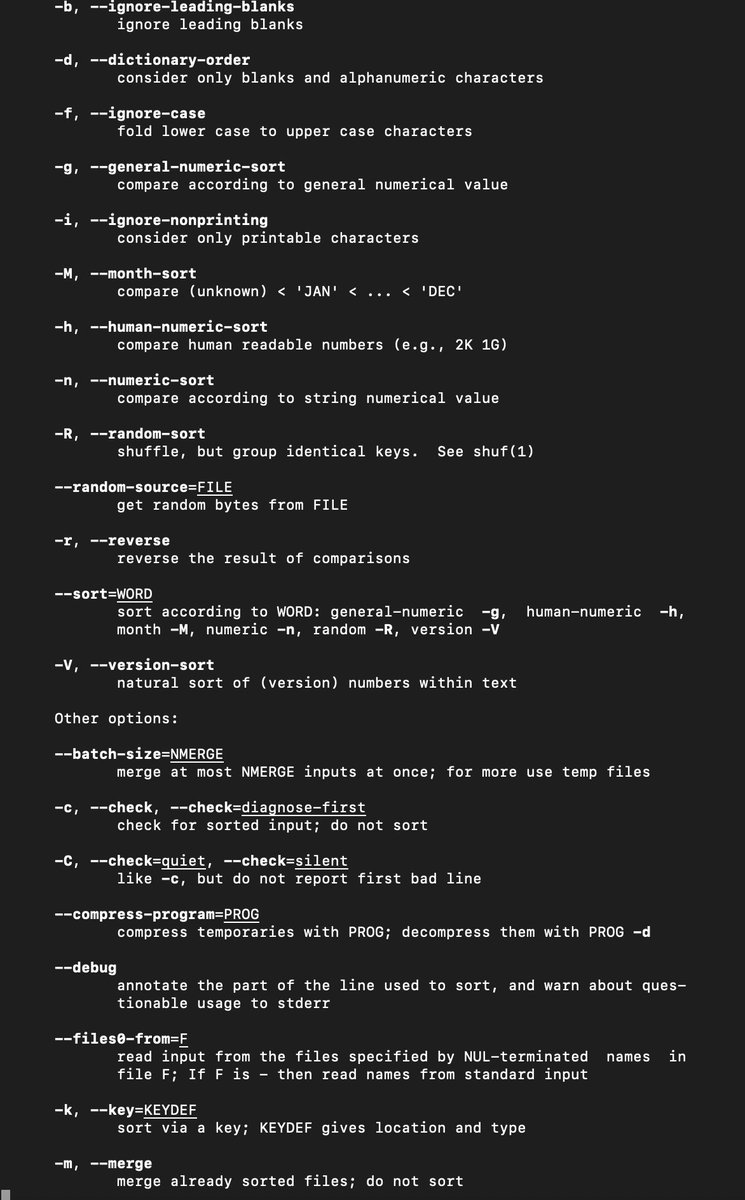

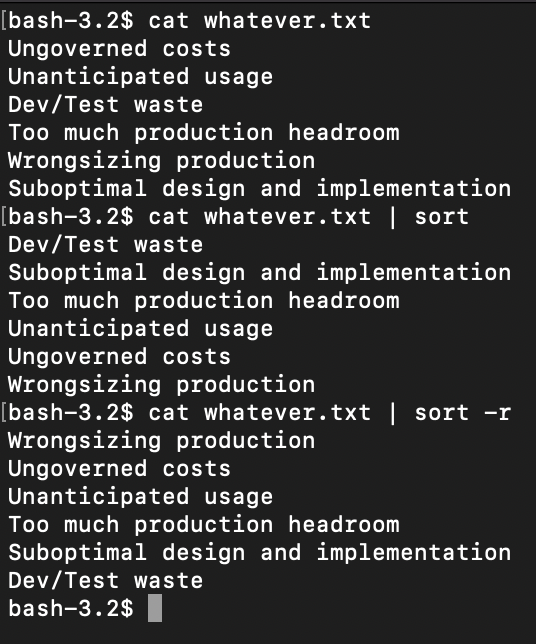

| sort … sort stuff, for example - you can fill in ls | sort which will sort all of the files from ls alphabetically. Lots of sorting options. You can also sort the contents of files with, “cat” - for example. |

source … executes the contents of a file, similar to “.” - note that source is a builtin command, and that . just uses source to run. However in /bin/sh shells, . behaves differently than source.

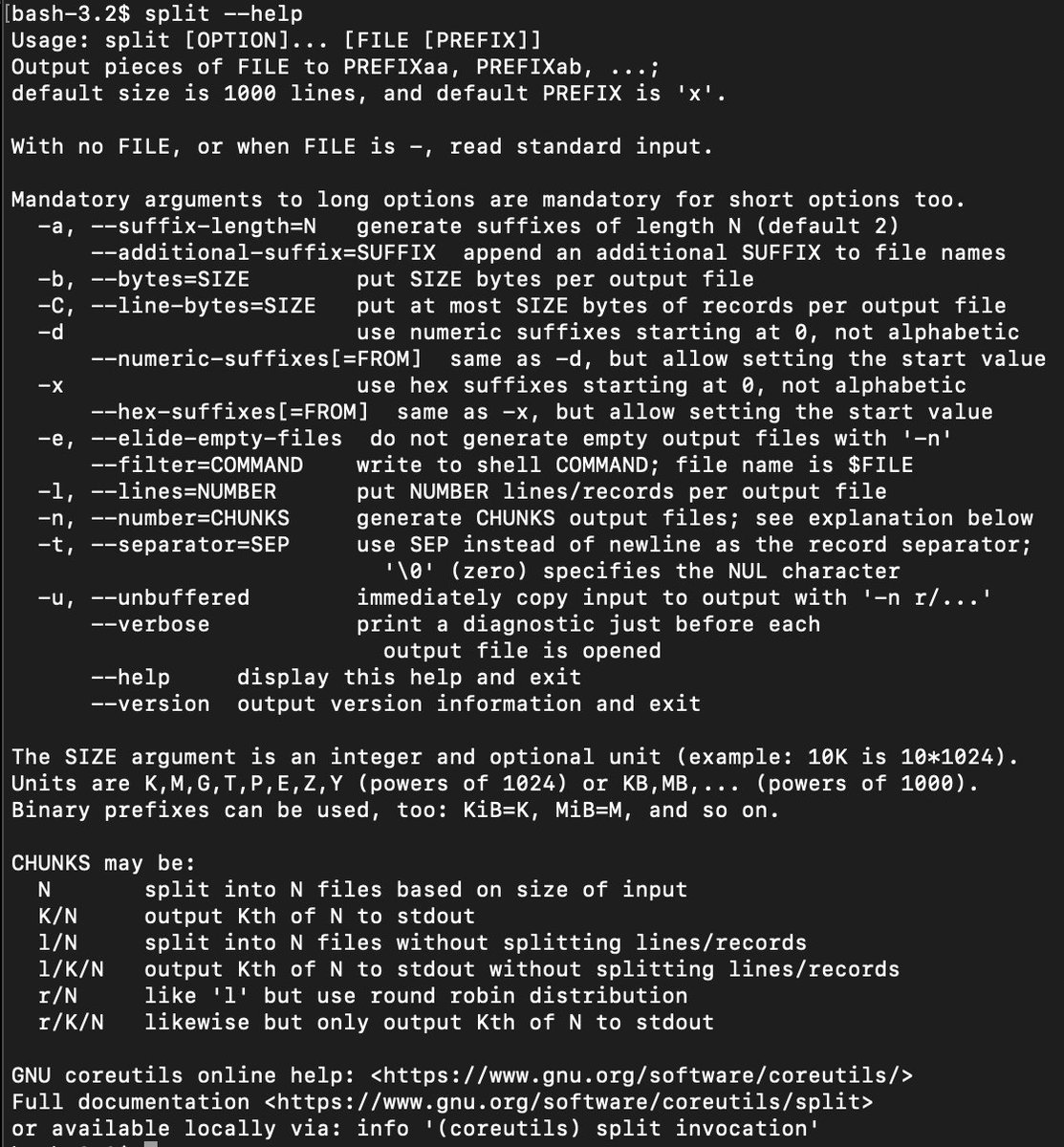

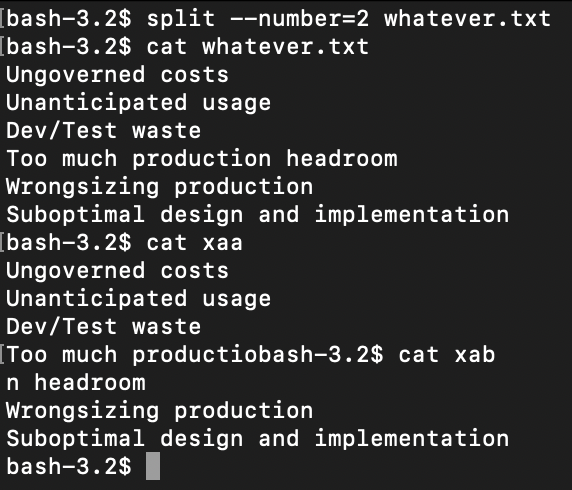

split … split a file based upon byte size, lines, suffixes or numbers of chunks. In this example I split the whatever.txt file into two chunks. The default output names of the new files are xaa, xab, xac, etc.

ssh … encrypted communication between servers. Multiple types of encryption can be used such as rsa, dsa, ed25519 etc. The basic format is ssh user@server - the setup involves creating a key/pair on the remote server, or using a password (though key/pair is more secure).

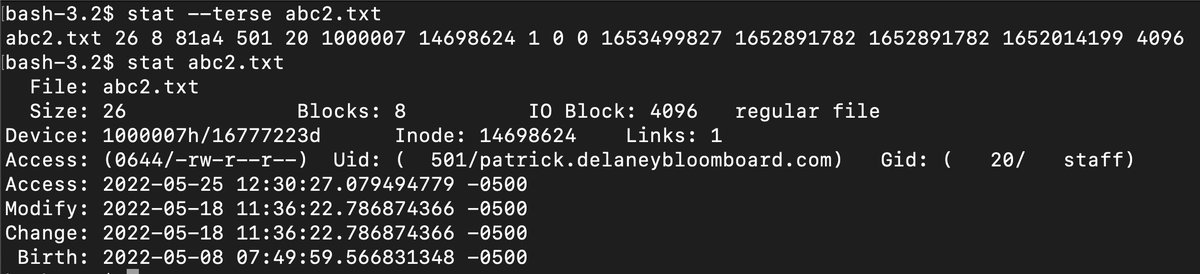

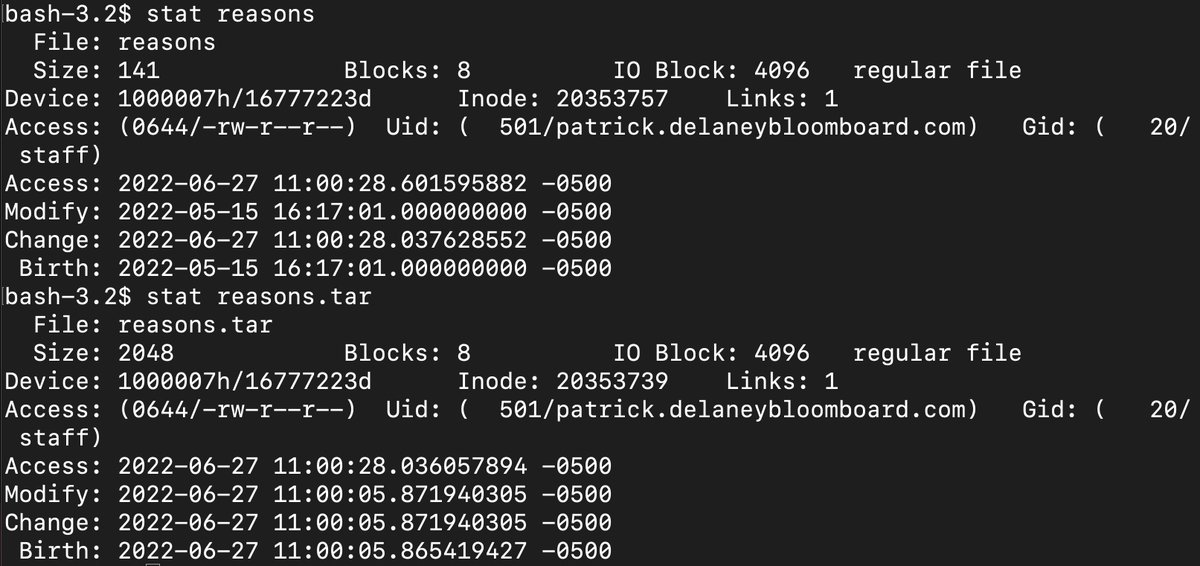

stat … gives info on a file in terms of when it was created, accessed, modified, the size, etc. You can also have the output given in terse format as shown.

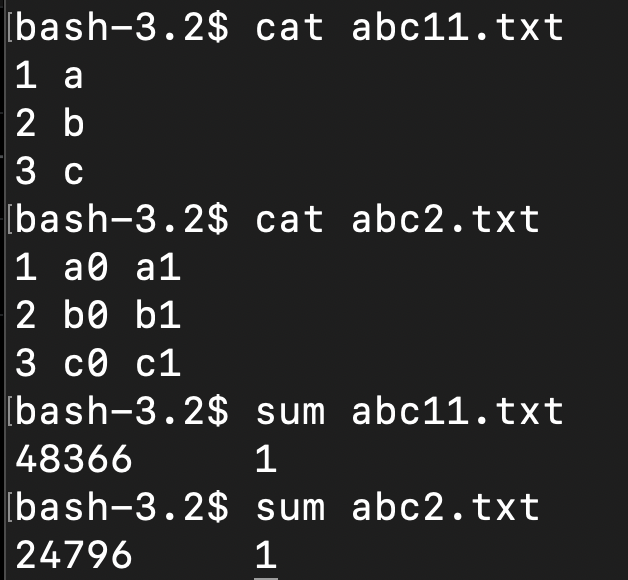

sum … can be used to do a checksum on a file, used to help check to see if the files are the same.

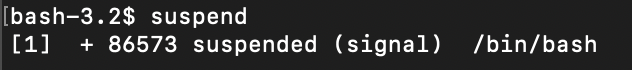

suspend … suspends the execution of the shell. Login shells cannot be suspended. Suspend is the equivalent of doing CTRL+Z

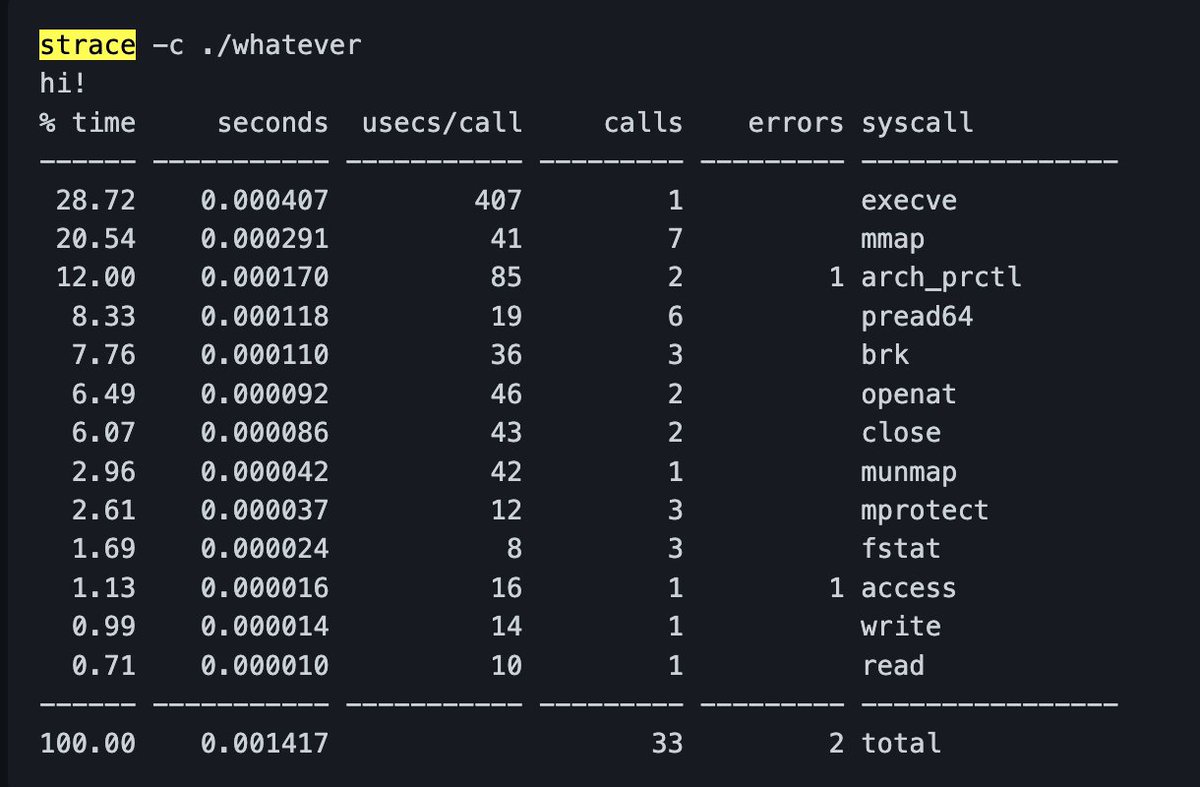

strace … traces out the commands of a program, line by line, including timing information. Note, the timing information of strace is not accurate to actual machine or human-perceived time, because strace itself slows down the running of the program.

strace (continued) … I go into great detail about how strace works here in these notes: https://github.com/pwdel/codepractice/blob/main/MLOps/Codeperform/codeperform.md#testing-out-strace-on-code

su … substitute user - if your user has sufficient powers, it can execute commands as another user.

sudo … execute commands as though you are the root user. Note - works differently in different linux versions.

tail … show the last part of an output - you can specify the number of lines.

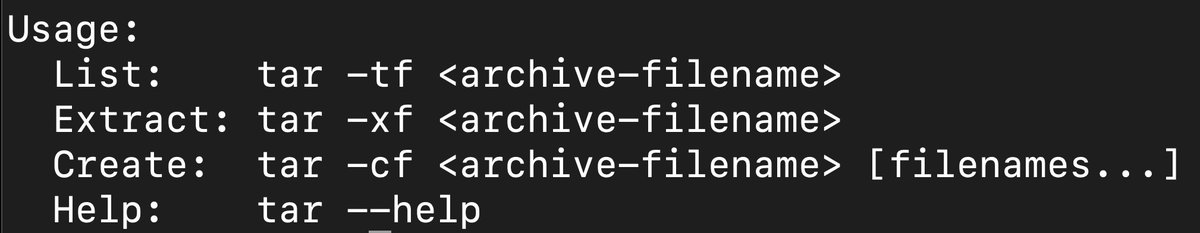

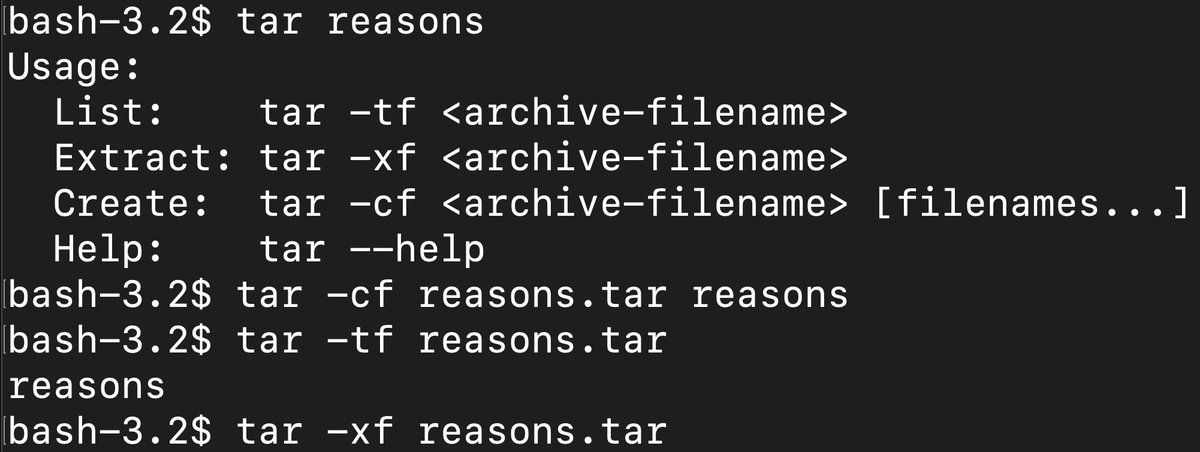

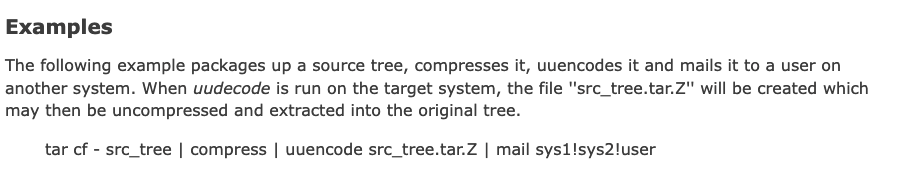

tar … you can zip using tar - the options are to create, list or extract.

tar (continued) … remember, a tar file has overhead because it contains a header with information on how to extract the file. So your tar file may be larger than the original, if the original is very small.

tee … usually if you redirect the output of a command into a file, then stdout will show nothing. tee allows you to push the output in two or multiple directions, the output and the file. Pushing the output to a file using > would be equal to tee’ing to a file and >/dev/null

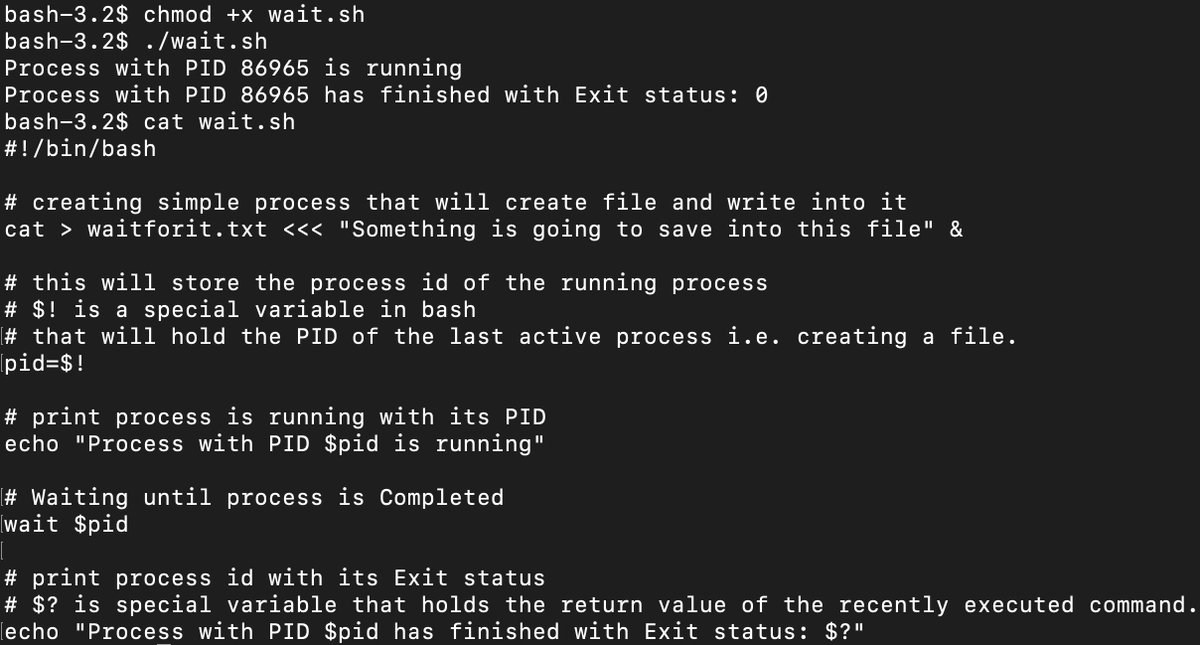

systemctl … you can use this to stop, start and control various applications and services. https://www.digitalocean.com/community/tutorials/how-to-use-systemctl-to-manage-systemd-services-and-units

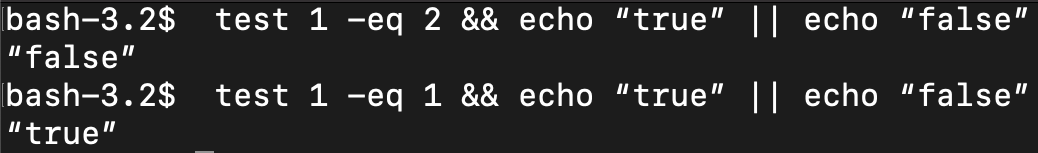

test … tests expressions against each other. The -eq options tests if equal, there are other options.

time … measure the time to run a routine. You can use it to measure whatever program in any language. I wrote a simple shell script to measure the time to execute various routines against each other: https://github.com/pwdel/codepractice/blob/main/MLOps/Codeperform/app/codeperform.sh

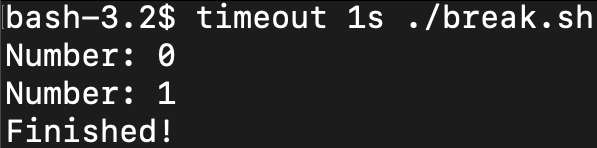

timeout … run the command, but kill it if still running after duration. Can use –signal=SIGNAL to specify a signal to be sent upon timeout. The duration is a floating point number with suffix s for second, m for minutes, h, d, etc.

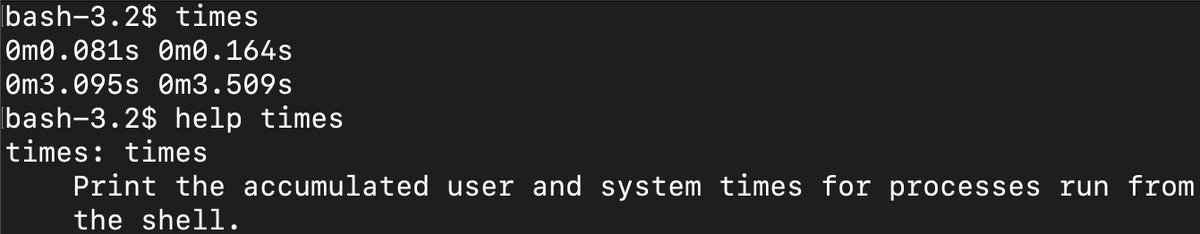

times … fairly self-explanatory help file and output. Print the accumulated user and system times for processes run from the shell. Note it’s the process time, not the logged-in time, so it’s a sum of the time all processes ran since that shell started.

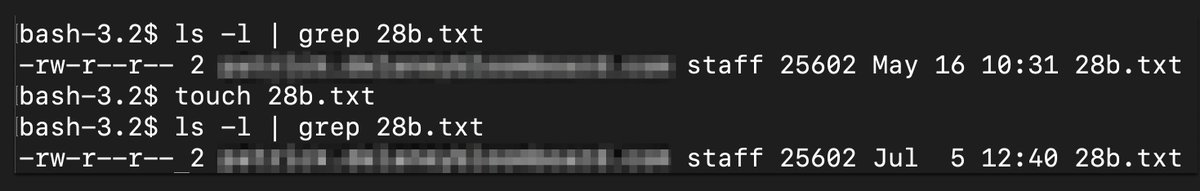

touch … is an interesting one, I thought it just created a new, blank file, which it does, but really it’s there to open and modify the date edited of a file without changing it. So here’s a file I, “touched” on July 5th which was last modified May 16th.

top … is really more of a linux thing analogous to Windows Task Manager or OSx Activity Monitor, but well, basically, it’s a bash command that allows you to see the top process information on that particular machine at that time.

traceroute … the internet is a series of routers and servers, in order to get from your machine to a remote server, a signal has to pass through a variety of routers. Traceroute shows the route that it goes, demarked by IP addresses, as shown in the example below.

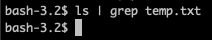

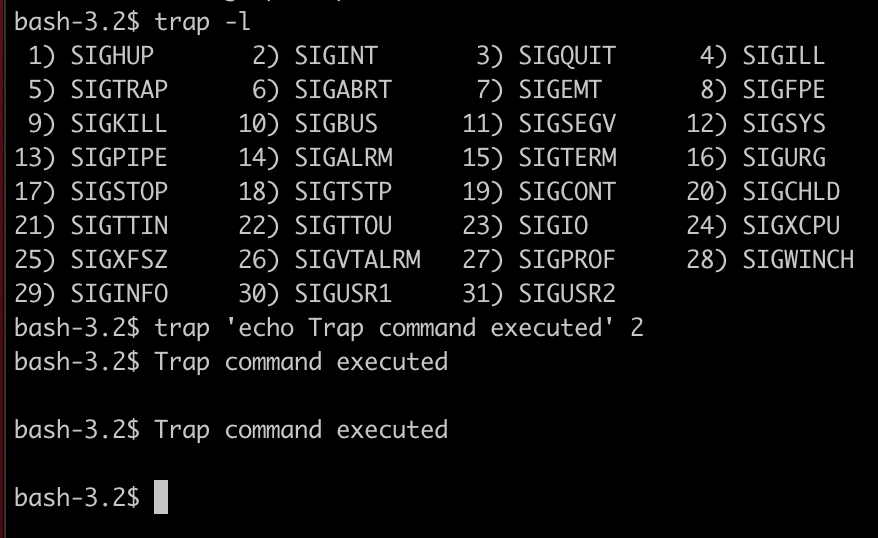

trap … Signals are software interrupts that are sent to a program to notify it of an important occurrence, such as the violation of a policy, a manual interrupt, etc. Traps activates rules for what to do with such signals. We can list signals with trap -l

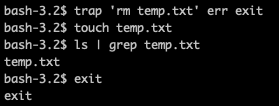

trap (continued) … so we can set a command to clear a file, “temp.txt” upon exit. Here we set the trap to remove (rm) the file upon exit. Upon returning back to the shell, we can see that the temp.txt file is gone.

trap (continued) … So how to use these signals? Well, for example, “SIGINT” - interrupt, is 2, so if you feed in trap

trap (continued) … so in short, trap can be used to accomplish any automated task based upon a generated signal.

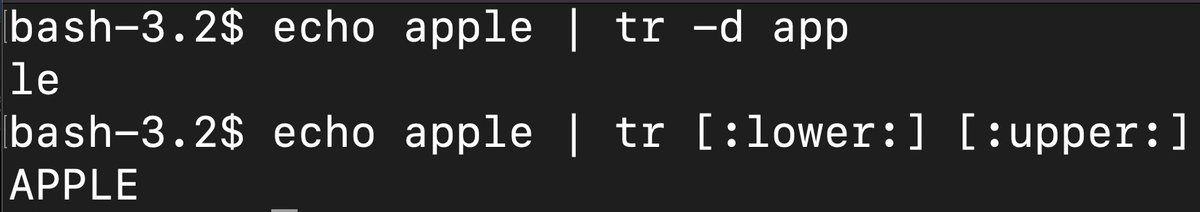

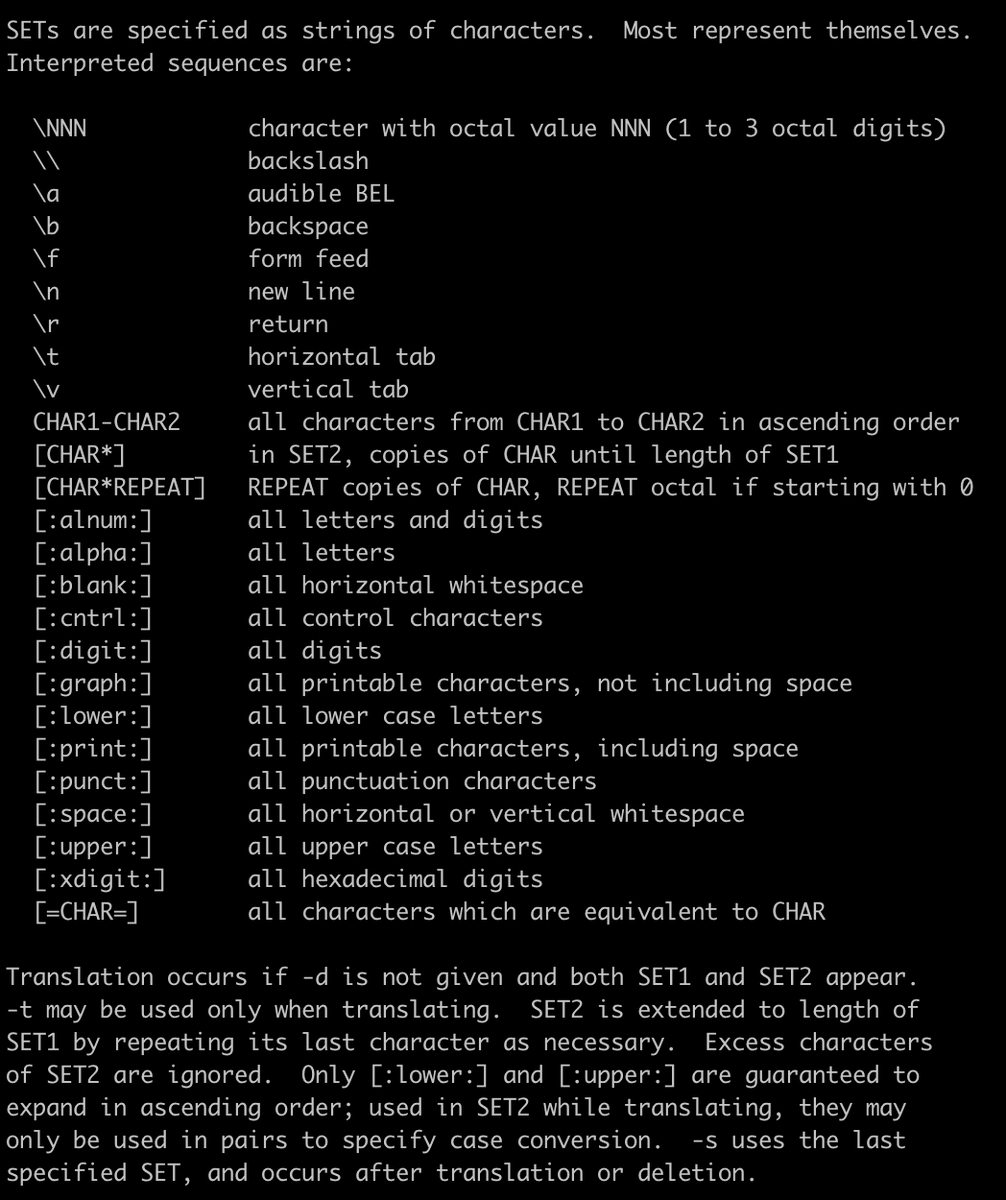

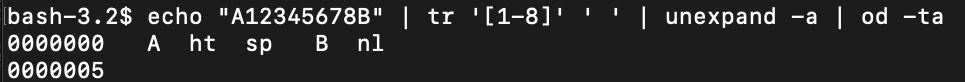

tr … translate, squeeze, delete characters – basically, you can shorten a string based upon the size of an input, or use all sorts of options to manipulate that string, turn it upper or lower case, etc.

true … do nothing, successfully. Produces an exit code of 0. See, “false” -

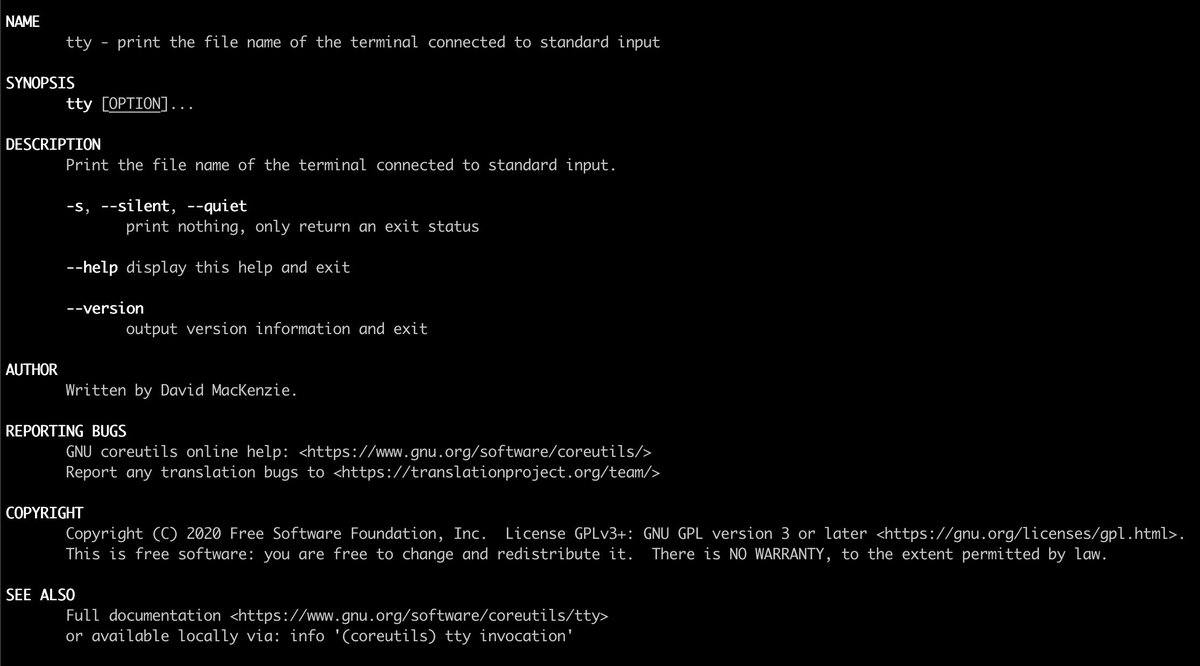

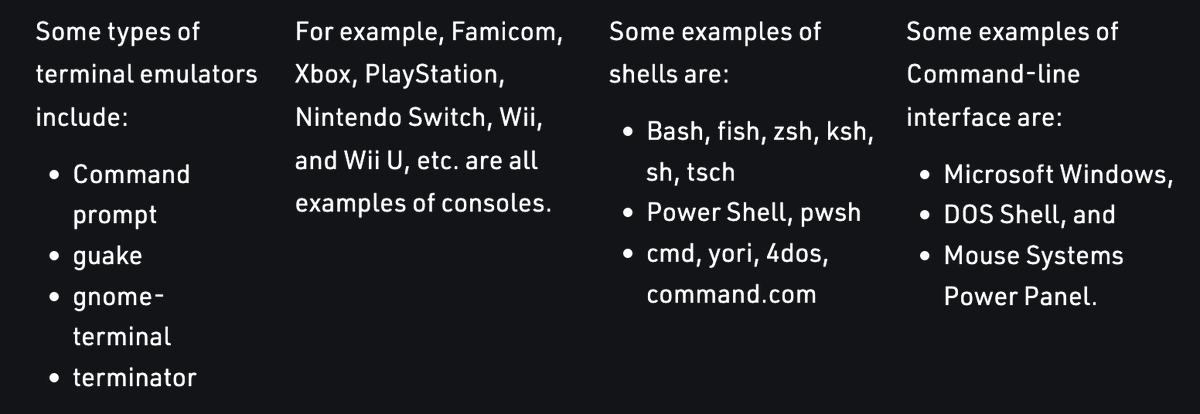

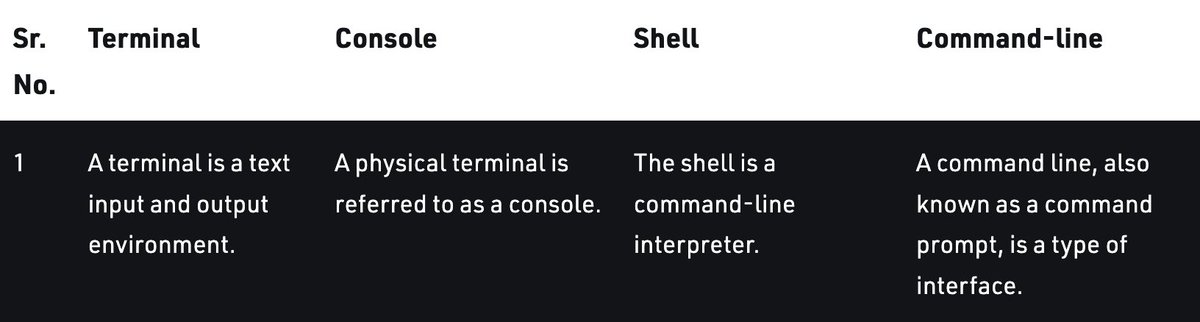

tty … print the filename of the terminal connected to standard input. Here are some of the differences between terminal, console, shell and command-line. Whereas bash is the shell at /bin/bash, the terminal is a layer down and is at /dev/ttys005

type … gives information about a command or file, presuming that the command is within $PATH.

umount … unmount a device. In linux/unix, everything is a file, including devices. If you mount a USB drive, it is thought of within linux as a file. https://www.debian.org/releases/wheezy/amd64/apds01.html.en

The mount/umount commands basically makes the, “file” accessible to the directory structure.

unalias … the un-doing of alias. If you no longer find the inside joke command name funny, you can always take off the alias with, “unalias.”

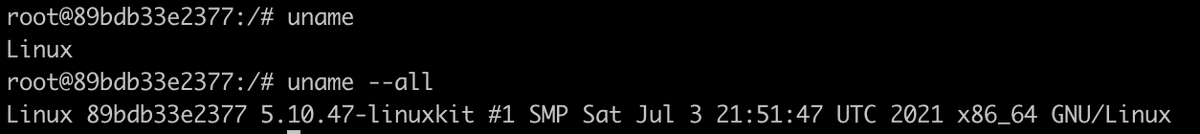

uname … prints off the system information. So for MacOS it’s going to be Darwin. On Linux it’s going to be Linux. You can show more info with –all or various pieces of –all with the different options uname provides.

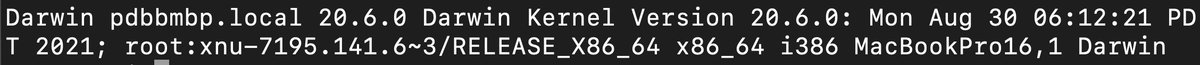

unexpand … replaces spaces and tabs in the line with the assumption that a tab causes the terminal used to display the line to move to the next tabstop. The tabstop is the number of spaces per tab character.

unexpand (continued) … so for example, you can set the tabsize of the tabstop to 4 spaces per tab with, set tabsize 4; set tabstospaces .

unexpand (continued) … The first 8 characters, A-7 in the result are one tab interval. That leaves a space (where the 8 was) at the first tabstop, (sp in octal). The unexpand program does not add a space; that is left over after unexpand replaces the spaces in 1-7 with a tab.

unexpand (continued) … A file with many lines beginning with spaces can be much larger than one using tabs. Files with different tabstop sizes are also messy, so unexpand can be used to shrink a file or clean it up.

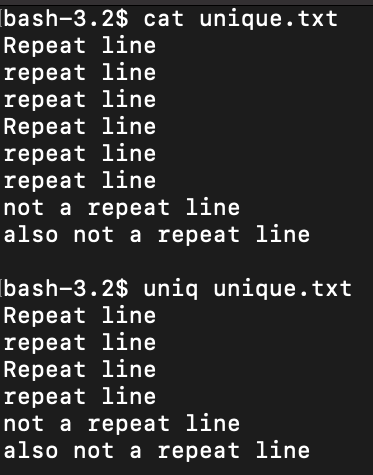

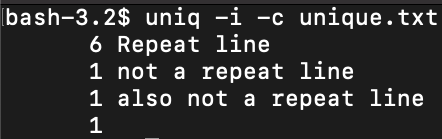

uniq … filter out the unique lines in a file. With -c prints out counts of repeats, with -i ignores differences in character case.

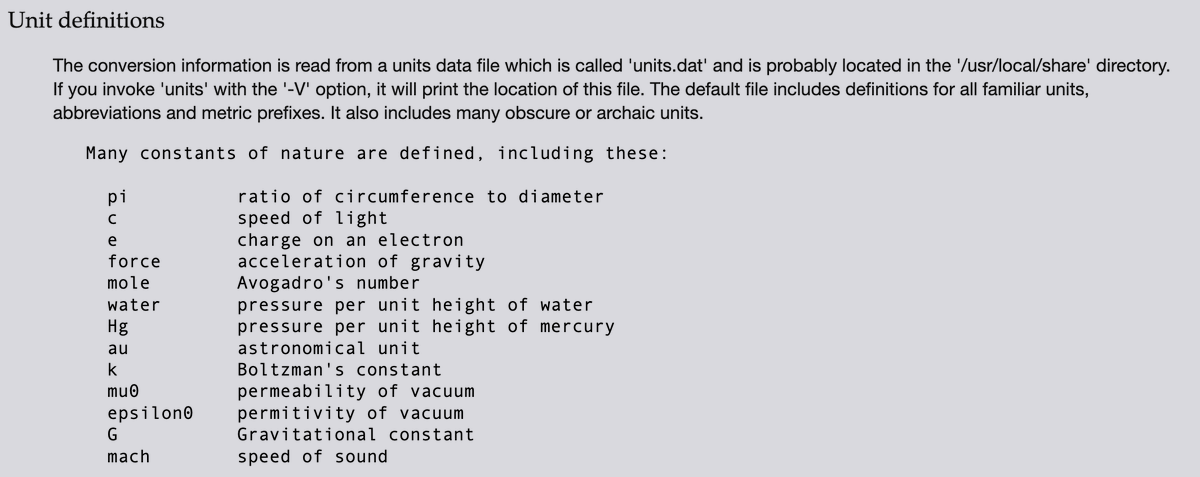

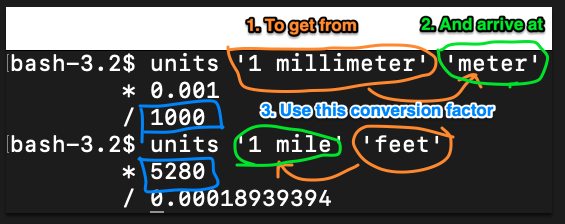

units … converts all sorts of unit measurements (yes, actual scientific unit measurements) into other units. You can use your own custom conversion file with -f or just use the database built into the units command.

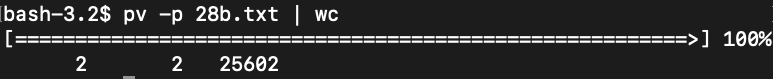

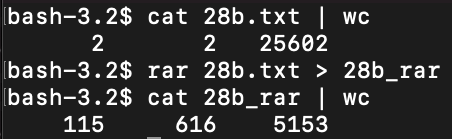

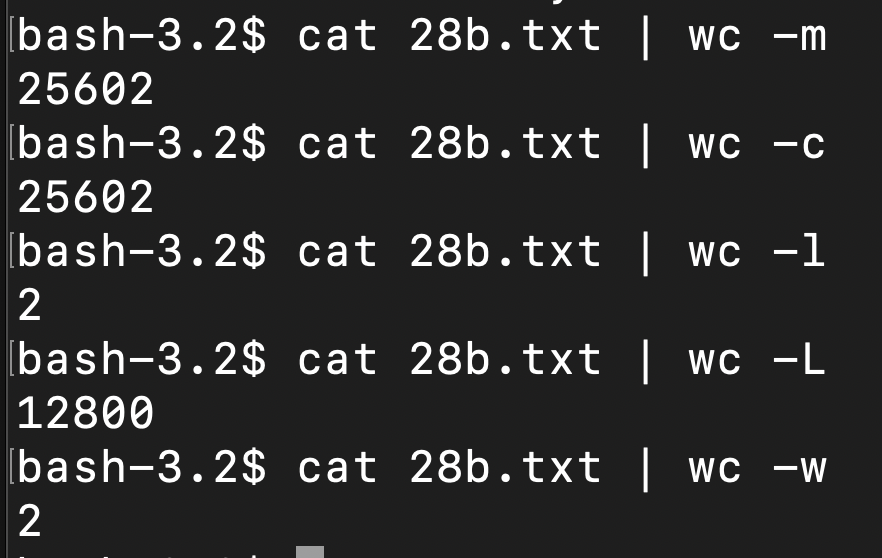

rar … another compression format like tar. Here we compressed a file from 25602 bytes (25.6kb) down to 5.1 kb

unrar … un-compress a rar file.

unset … opposite of set.

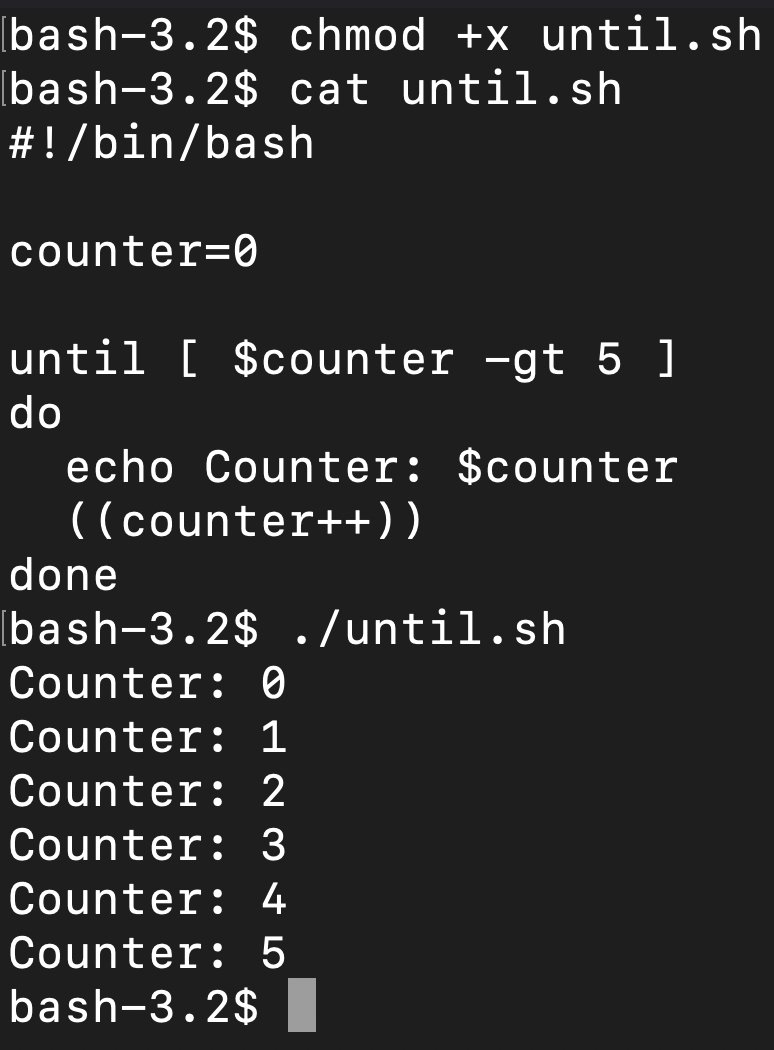

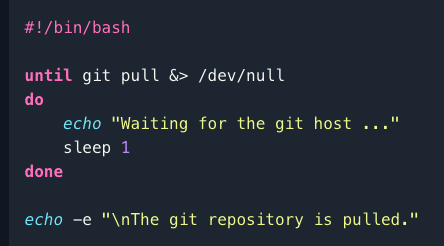

until … useful for loops or conditions in shell scripts, e.g., “do this until X happens,”

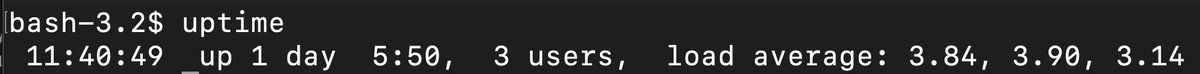

uptime … show the machine uptime

useradd / userdel … create and delete linux users.

users … show the users currently logged in.

uuencode / uudecode … are encoding tools, used to encode and transfer over mediums that do not support ASCII.

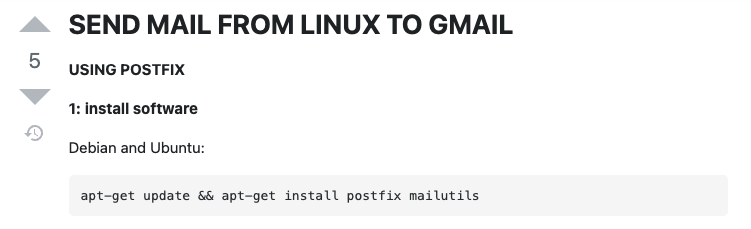

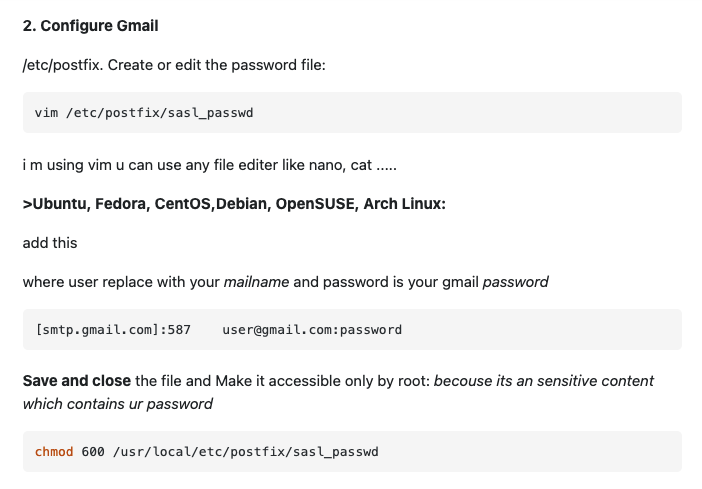

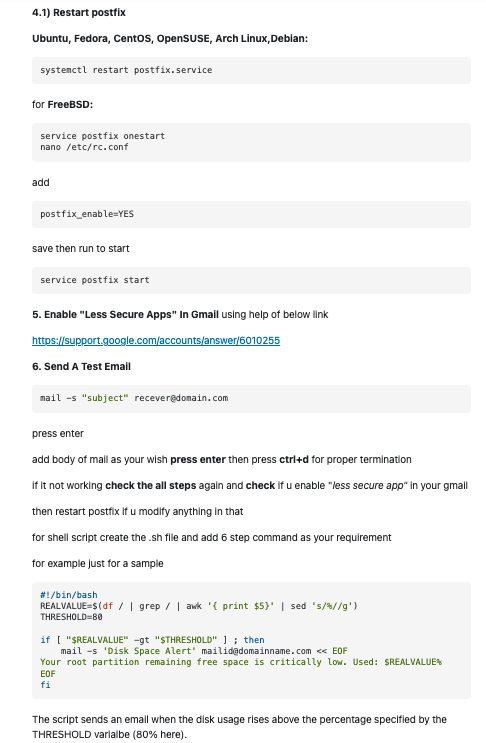

mail … I just became aware of this command now. You can send email from a bash shell. https://stackoverflow.com/questions/5155923/sending-a-mail-from-a-linux-shell-script

vdir … verbosely list directory contents

vi / vim … text editors. I’m not going to go into these because I don’t want to start a fight between these and emacs.

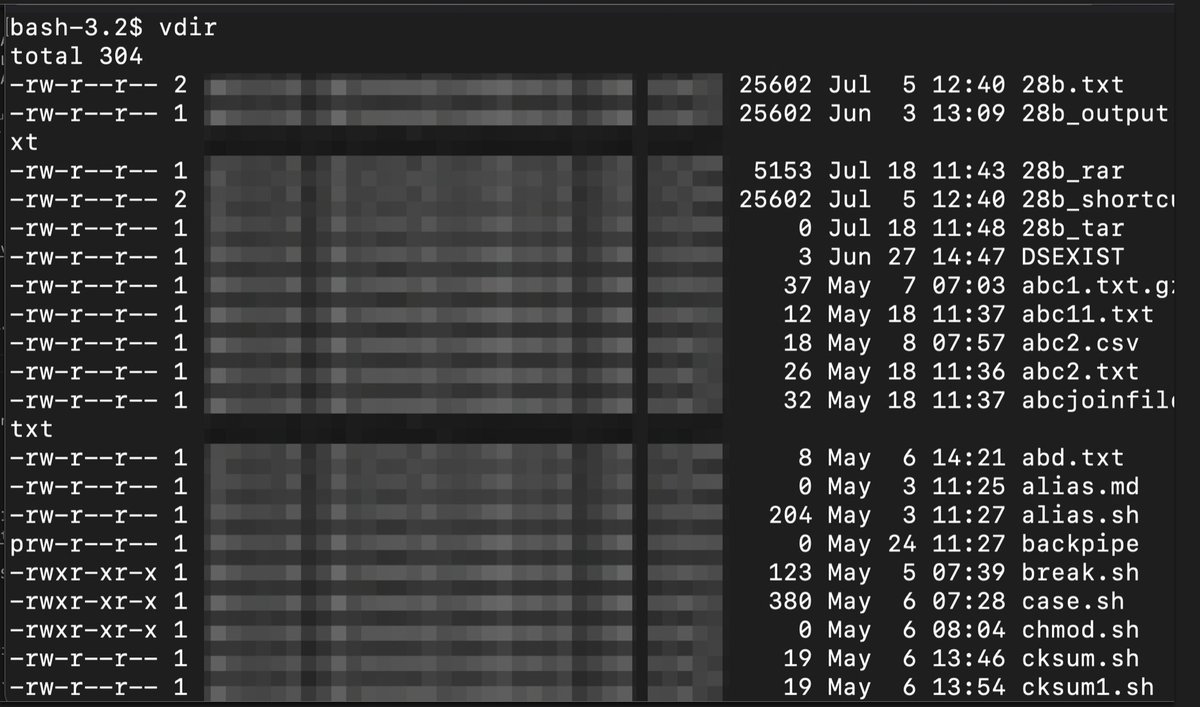

wait … wait for a condition to occur. You can add this into shell scripts.

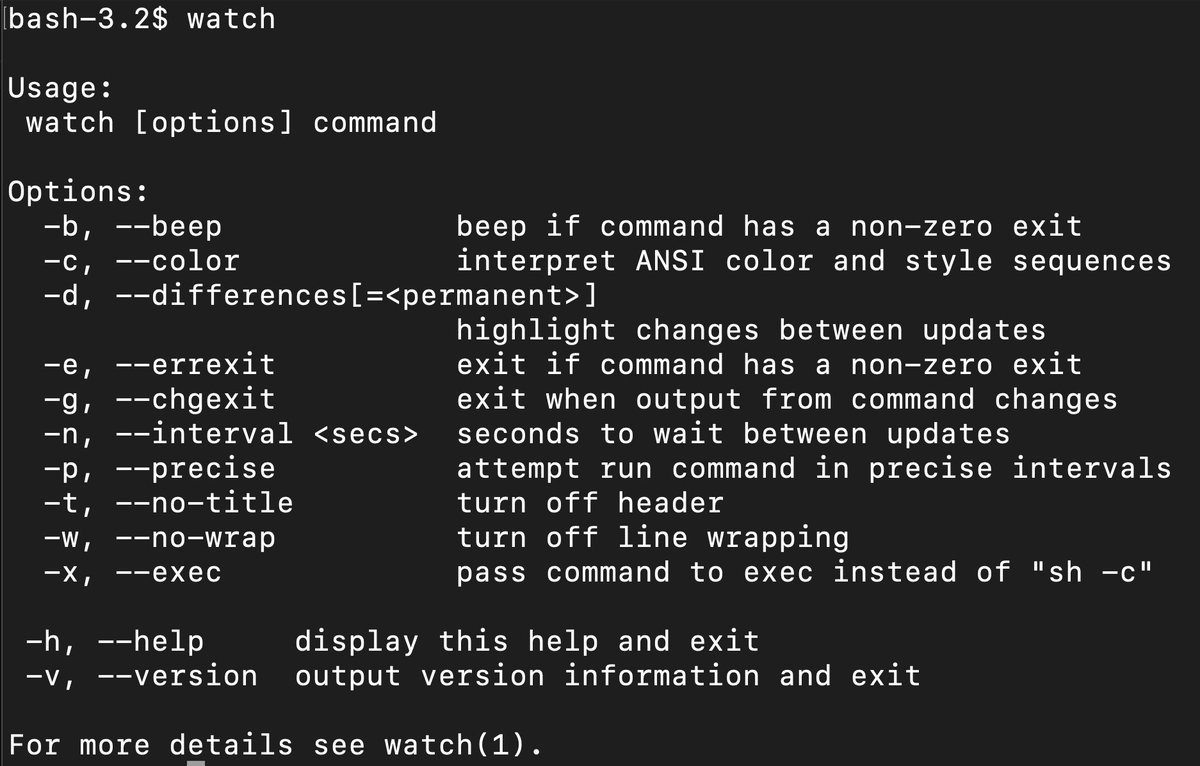

watch … execute or display a program periodically. So for example if you do, watch -b false it will beep every two seconds, super annoying.

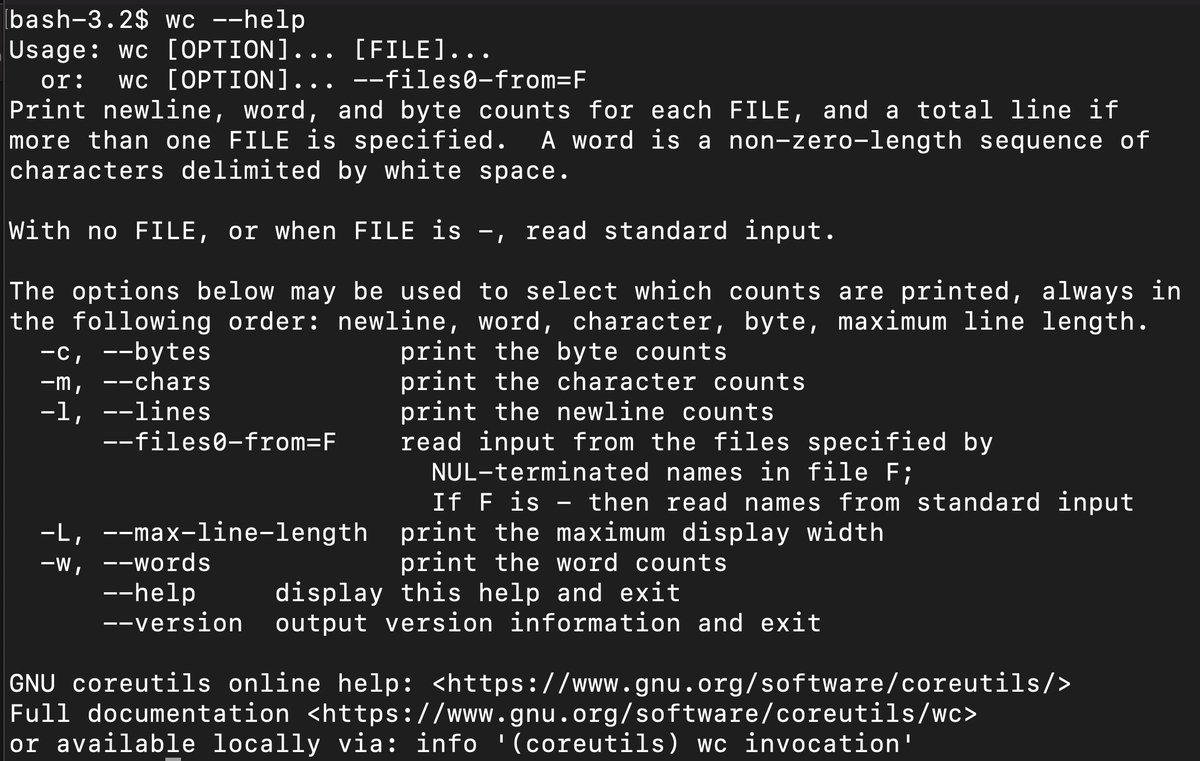

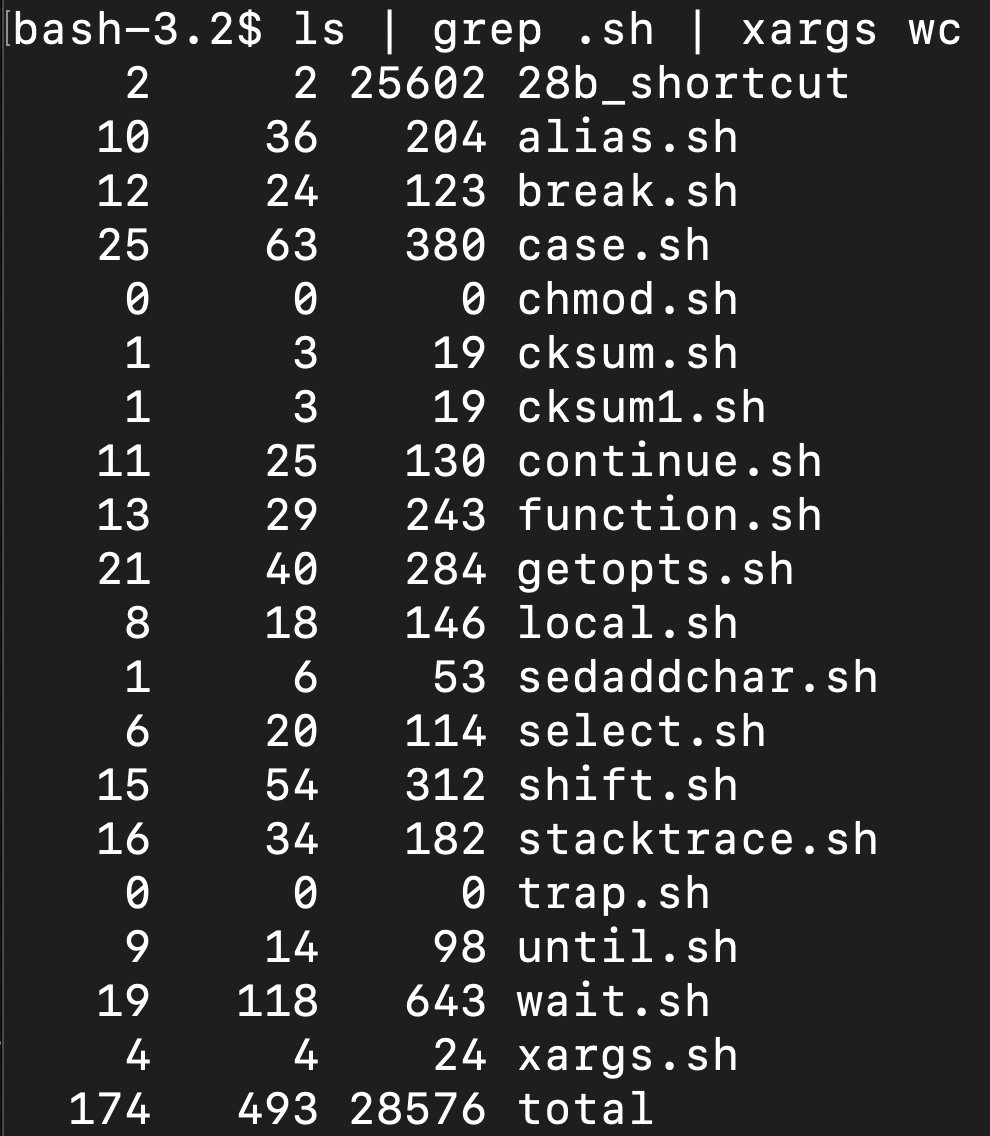

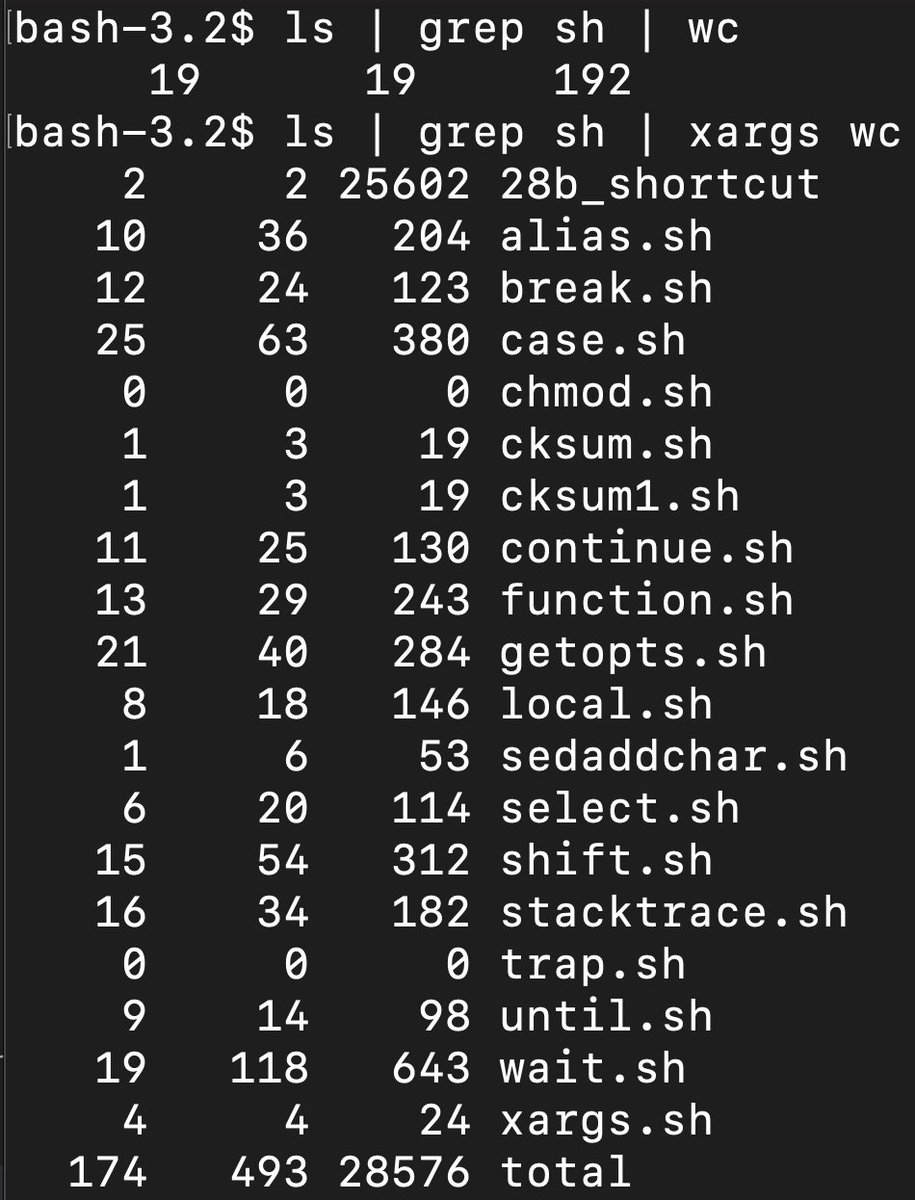

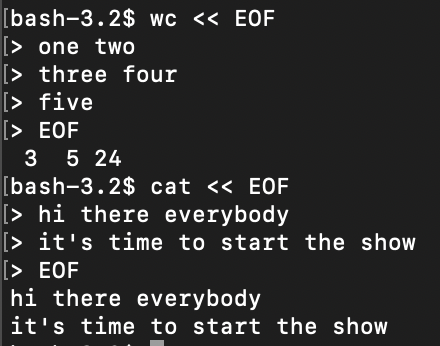

wc … very useful command, already used quite a bit in this whole thread, outputs counts of either characters, lines or bytes depending upon the option used.

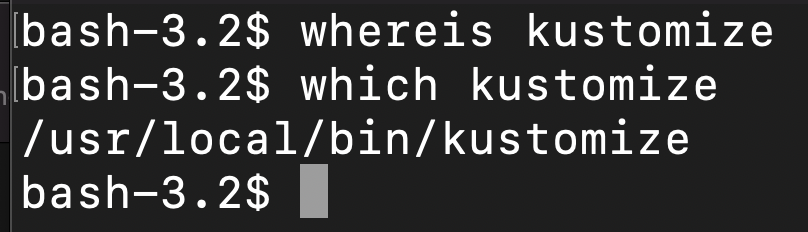

which / whereis … searches the user $PATH for a program. Whereis does not appear to work on Unix/MacOS. Here I searched kustomize as an example.

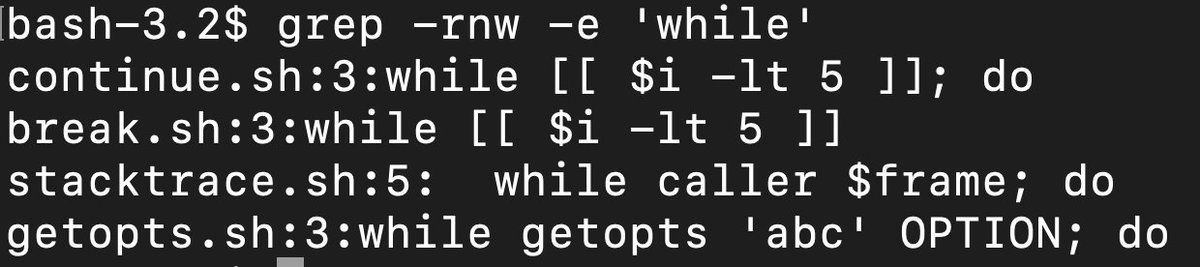

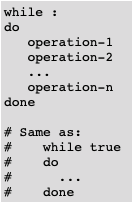

while … used in a while loop within a script. Doing a quick search to see where I have used while in this thread already, looks like I first used it on May 6th, 2022 in the, “continue” example.

who … print out all of the users logged in. Different than, “users” which just prints out the name, “who” shows what type of user and when last seen.

whoami … prints out your user name.

wget …. retrieve web pages, url’s via HTTP, HTTPS, FTP. This is great for downloading binaries and packages that may not be available with apt-get.

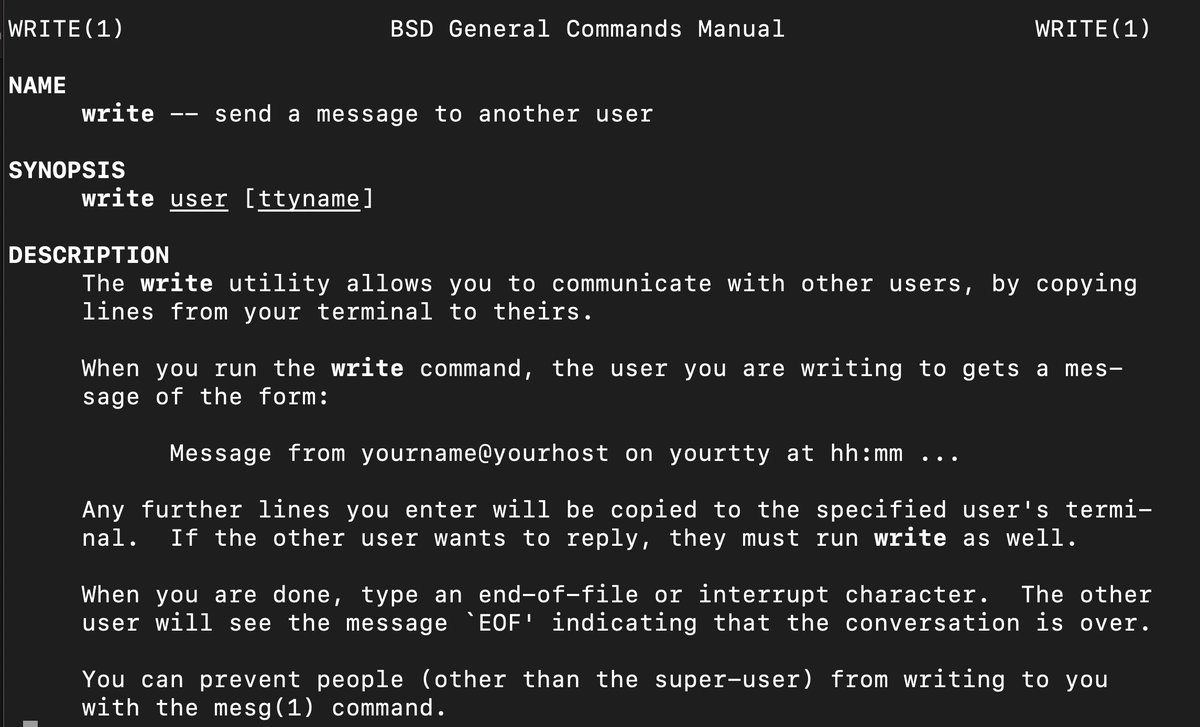

write … another old-school messaging command, first appeared on unix in 1993, seems like it’s similar to mail in that it would need a decent mount of setup to make it work with an actual SMTP server.

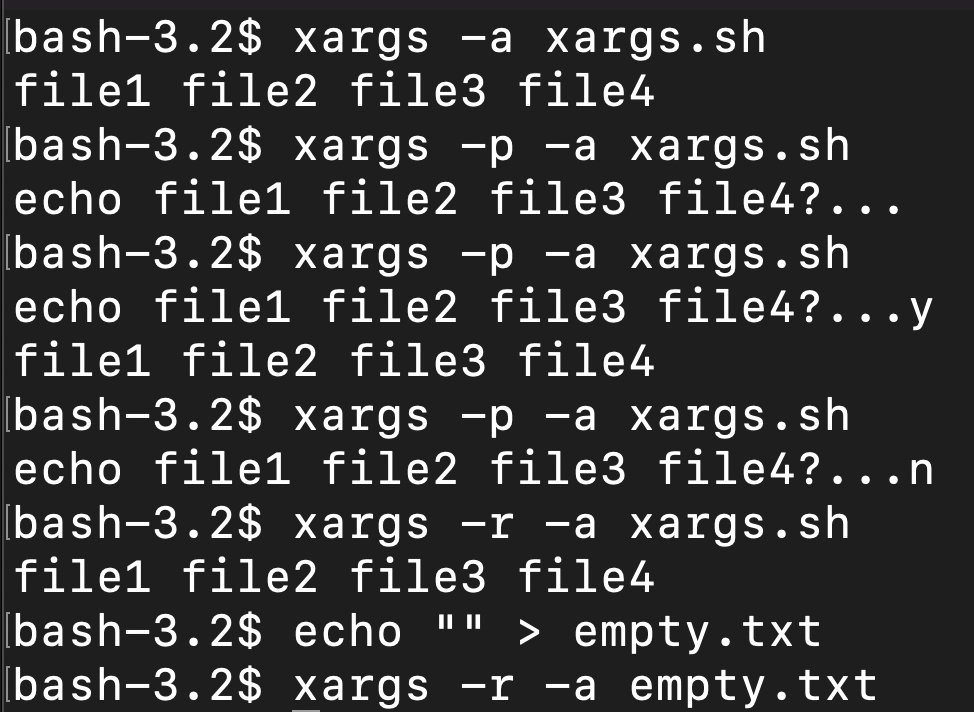

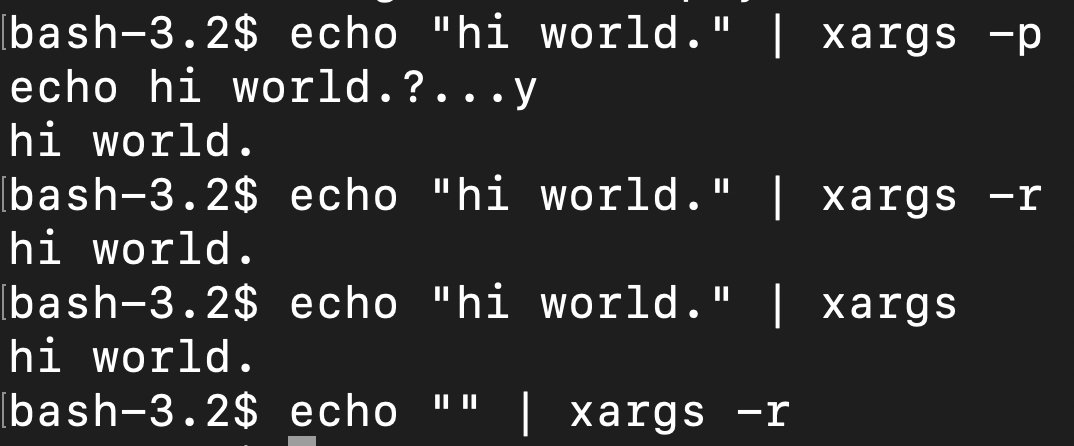

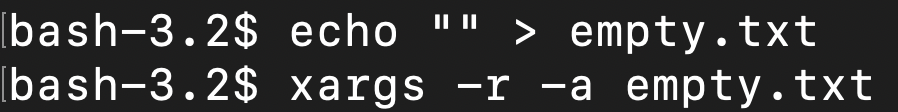

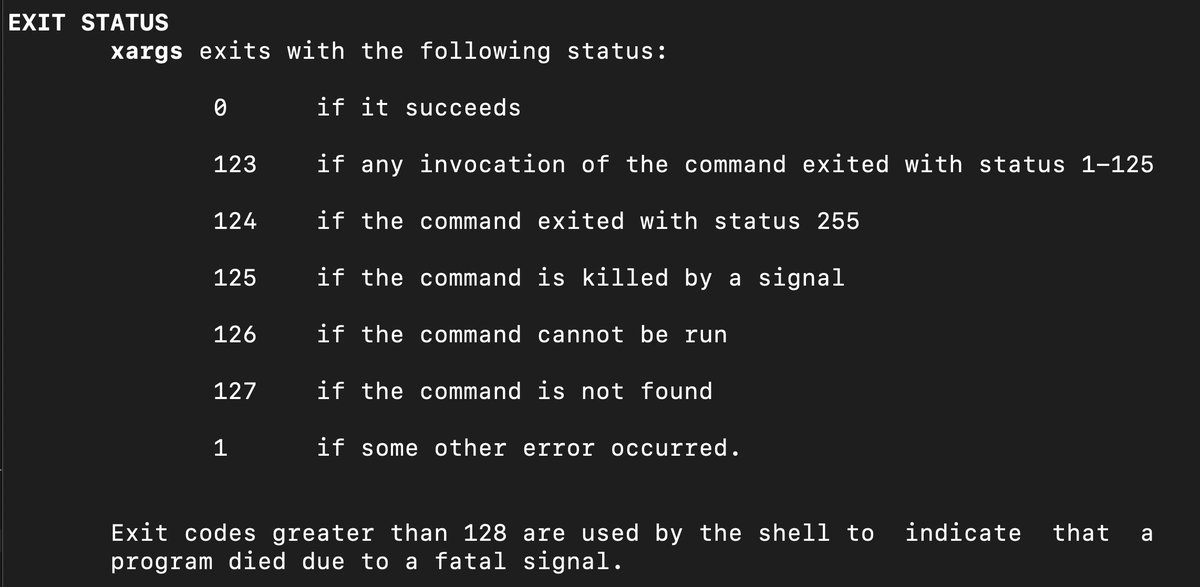

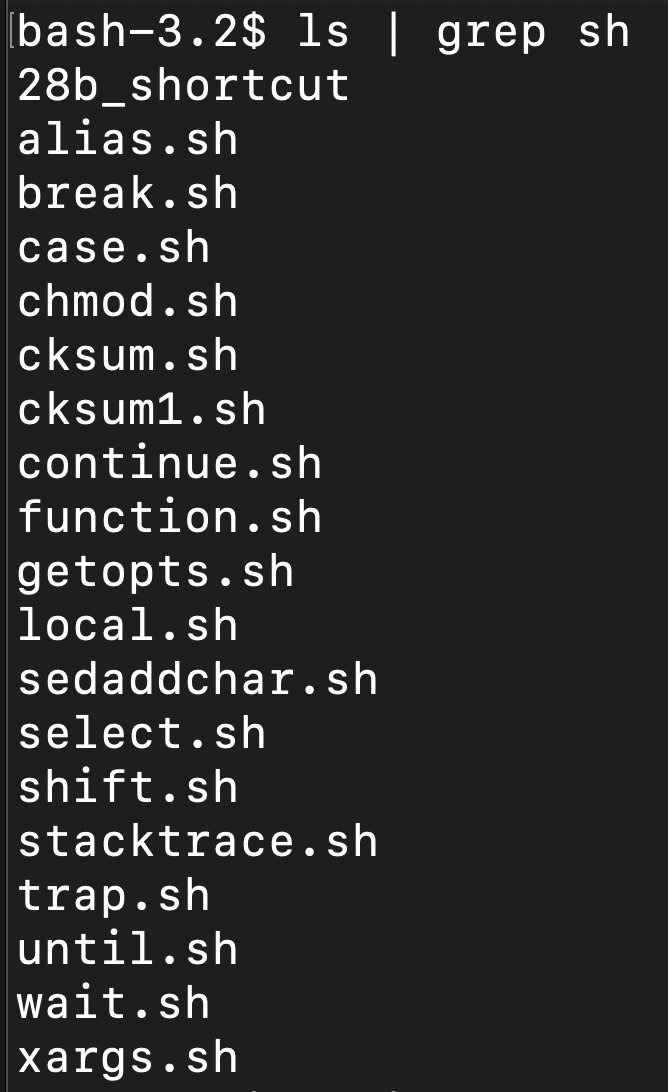

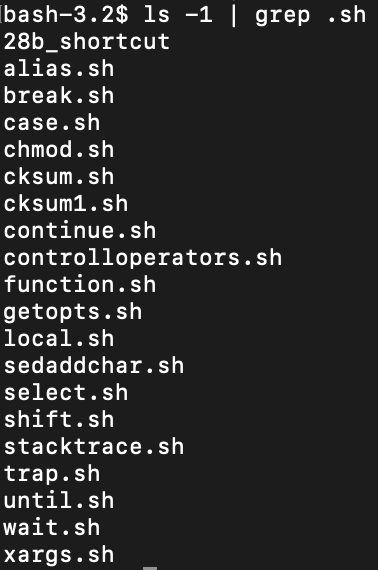

xargs … build and execute commands from a stdin. The -a option can be used to read from files. -p prompts the user yes/no, and -r skips running if the input is empty.

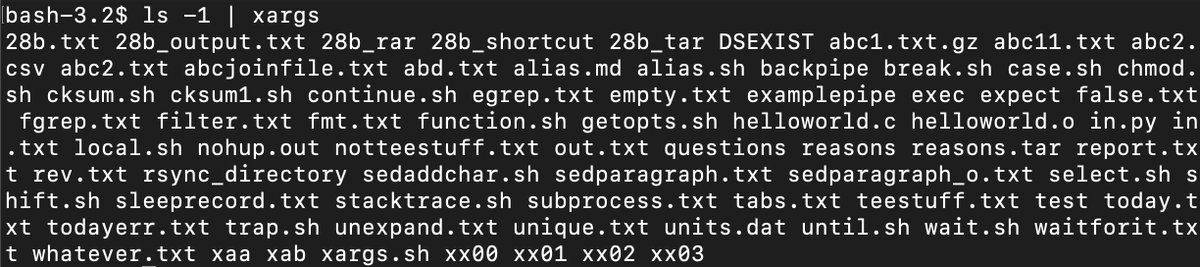

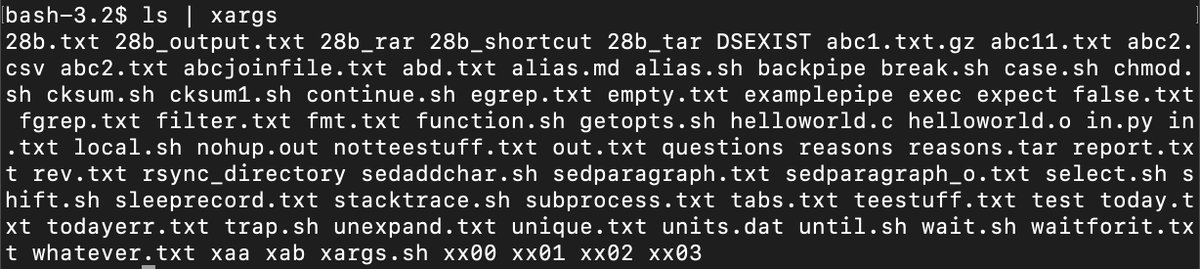

xargs (continued) … you can take the results of ls -l for example, and compress that all into a compact list by piping it into xargs. The output of xargs can also be used as an input directly to other commands.

xargs (continued) … stands for extended arguments, so it’s basically a way to help create arguments which will then be used as an input to another function which actually accepts input arguments. That being said, it has its own exit codes to say if it was successful.

xargs … So, you have to make sure you feed the output of xargs to commands that accept input arguments, which could be existing commands or your own bash programs / commands, which include $1, $2, etc. input arguments.

xargs (continued) … to further demonstrate this, look at how grep outputs arguments and compare that to how xargs outputs arguments. Grep creates a columnar list vs. xargs creates a stream suitable for stdin. So outputting from xargs into wc is more informative than from grep.

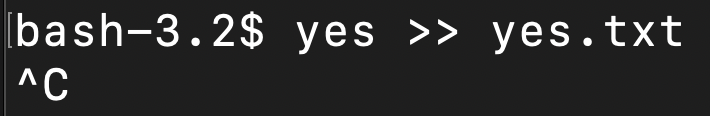

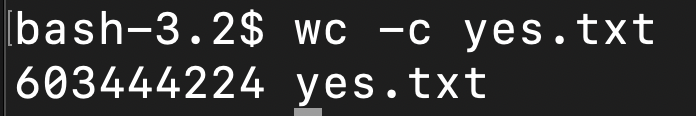

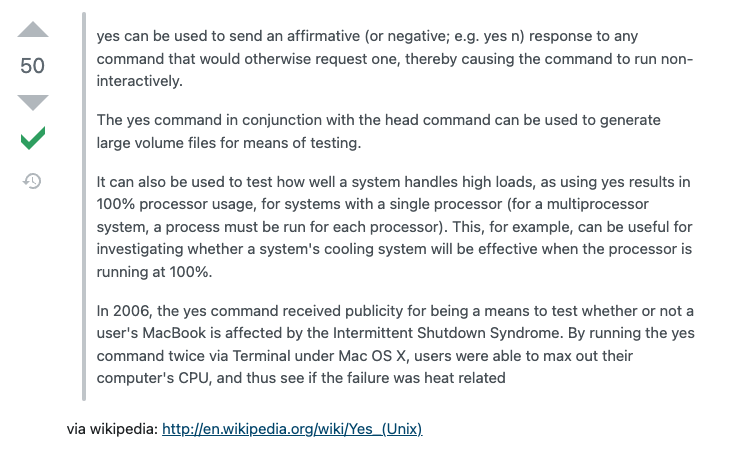

yes …creates y indefinitely until interrupt. Be careful with yes, it could fill up your disk space fast. I interrupted this command after 5 seconds and it had created a file that was >603MB of just the letter y. A 500GB hard drive would fill up in about 80 mins.

yes (continued) … it can also be used for testing, e.g. fill up your computer’s CPU and see if there is a heat issue, for example.

zip … another compression method, like tar and rar.

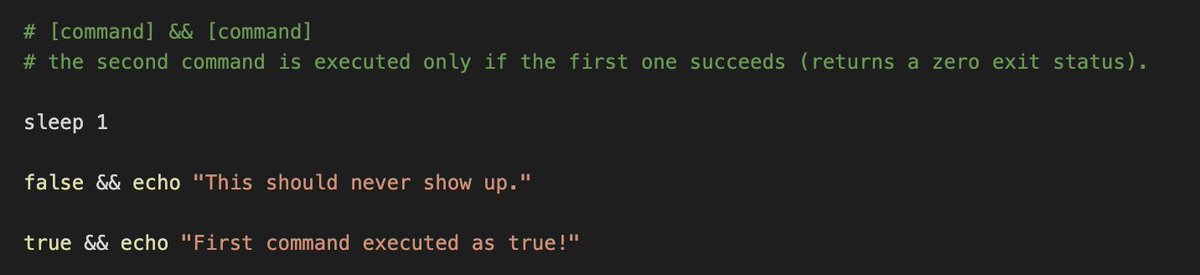

I’ve reached the end of the alphabet, so I’m going to move on to symbols, which account for the control and redirection operators … https://pubs.opengroup.org/onlinepubs/9699919799/basedefs/V1_chap03.html#tag_03_113

and https://www.gnu.org/savannah-checkouts/gnu/bash/manual/bash.html#Redirections

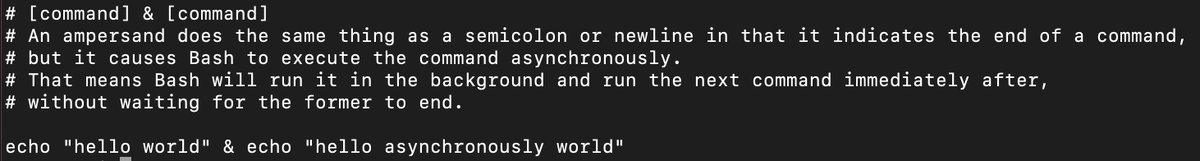

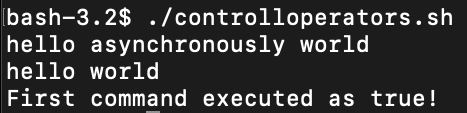

| Control Operators … & && ( ) ; ;; newline | … so starting out with & single ampersand, causes commands to execute asynchronously. |

| Redirection Operators … < > > | « » <& >& «- <> … perform redirection functions and is one of those symbols. More info: https://www.gnu.org/software/bash/manual/html_node/Redirections.html |

Among the various symbols there are also: Compound Commands … { [command list]; } ( [command list] ) … https://mywiki.wooledge.org/BashSheet#Compound_Commands

Expressions … (( [arithmetic expression] )) $(( [arithmetic expression] )) [[ [test expression] ]] … https://mywiki.wooledge.org/BashSheet#Expressions

Special Parameters … 1,2 addheaders.sh command-line-a-to-z-new.md command-line-a-to-z.md getimagelinks.sh getimagelinks_special.sh imagedownload.sh imagelinks.txt images replaceimageurls.sh replaceimageurls_special.sh @ # ? - $ ! _ … https://mywiki.wooledge.org/BashSheet#Special_Parameters

Parameter Operations … “$var”, “${var}” there are a lot of these … https://mywiki.wooledge.org/BashSheet#Parameter_Operations

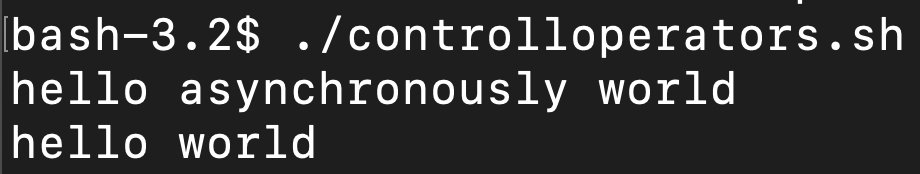

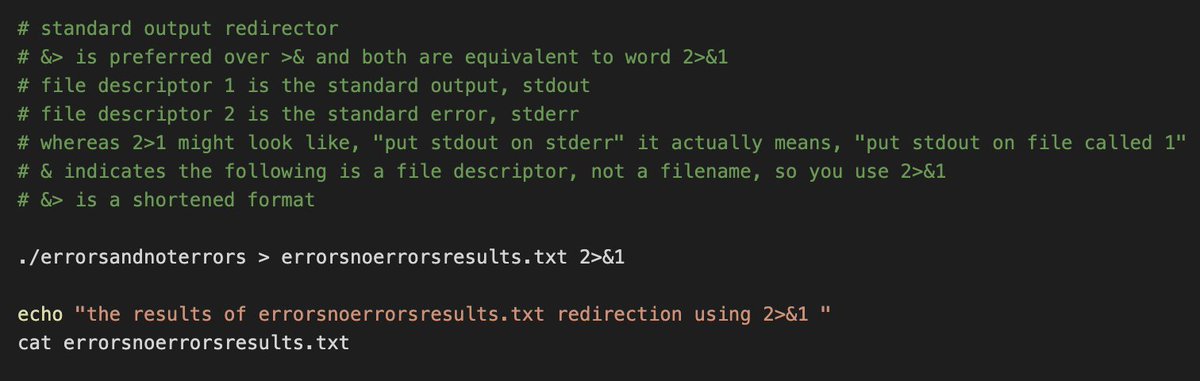

To understand Control Operators, we can go through and review how they each behave, but basically they control the sequence of command execution. Redirection Operators deal with the inputs and outputs to the terminals, as well as to and from files. About inputs/outputs:

So we covered &, which means execute commands simultaneously. Moving on, && … means only execute the next command if the first command was successful.

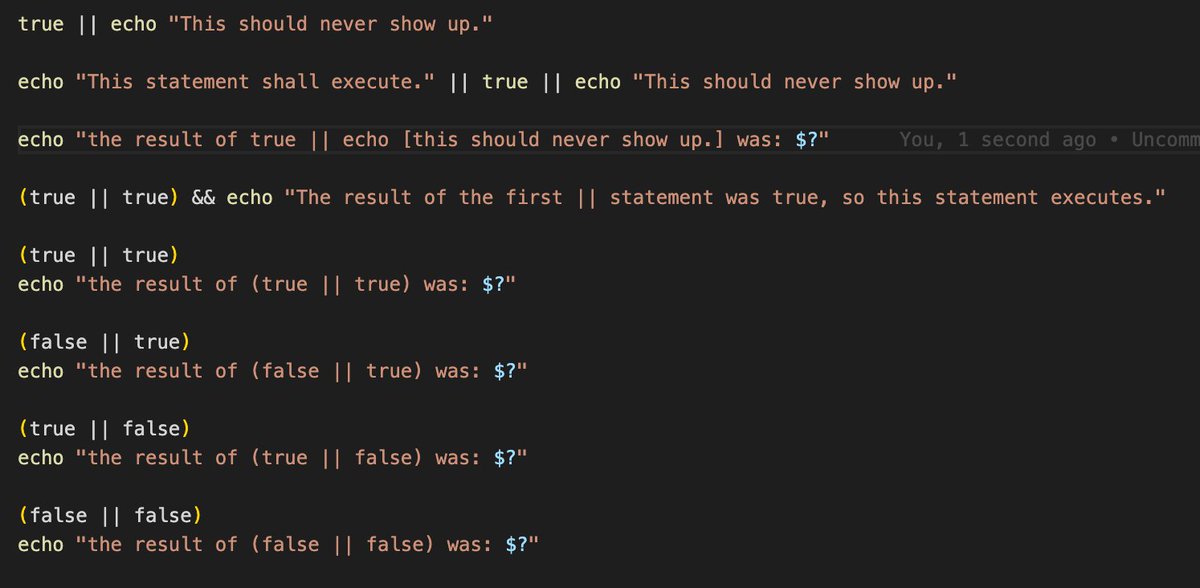

| … is a logical OR operator, but it’s a sequential OR, which means that per bash spec, if true exit status is fed in firstly, the next statement will not execute. It’s important to not look at it purely as a mathematical expression, but an operator. https://unix.stackexchange.com/questions/632670/the-paradox-of-logical-and-and-or-in-a-bash-script-to-check-the-succes |

| … also known as pipe, takes the standard output (stdout, or 1) and feeds it into the next expression as an input (stdin or 0). This has already been used a lot in this guide. |

; … semicolon is the bulldozer of control statements, the next statement executes no matter the output of the previous command, in order, non-asynchronously.

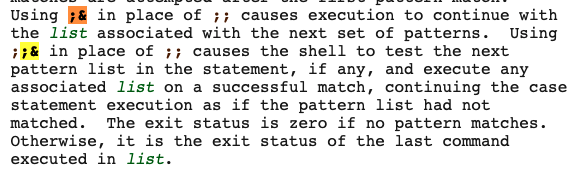

;; … double semicolon, from the man pages, is used at the end of a, “case” statement to create a hard stop in evaluating a case. Otherwise if you used ; the next line would execute. https://www.man7.org/linux/man-pages/man1/bash.1.html

;& and ;;& … note from the above tweet, you can build complex logic in case statements with these tools.

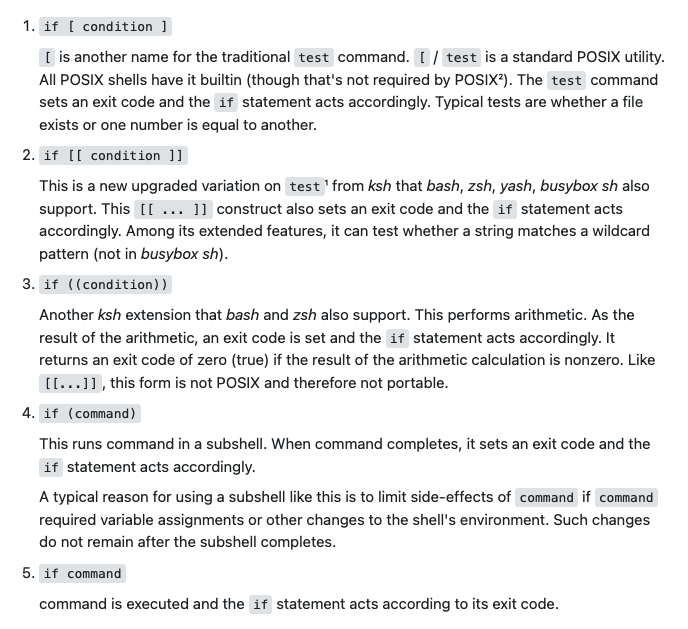

[ ] ( ) [[ ]] (( )) … Basically if you see a double, it’s an extended bash feature, whereas the single is the original /bin/sh feature, which means single is for testing and expressions, double is for setting exit codes and math https://unix.stackexchange.com/questions/306111/what-is-the-difference-between-the-bash-operators-vs-vs-vs

… as mentioned in the above tweet, the doubles are for expressions, either test expressions or arithmetic expressions.

… whereas the singles are for commands.

So that covers the control statements, next are redirection operators.

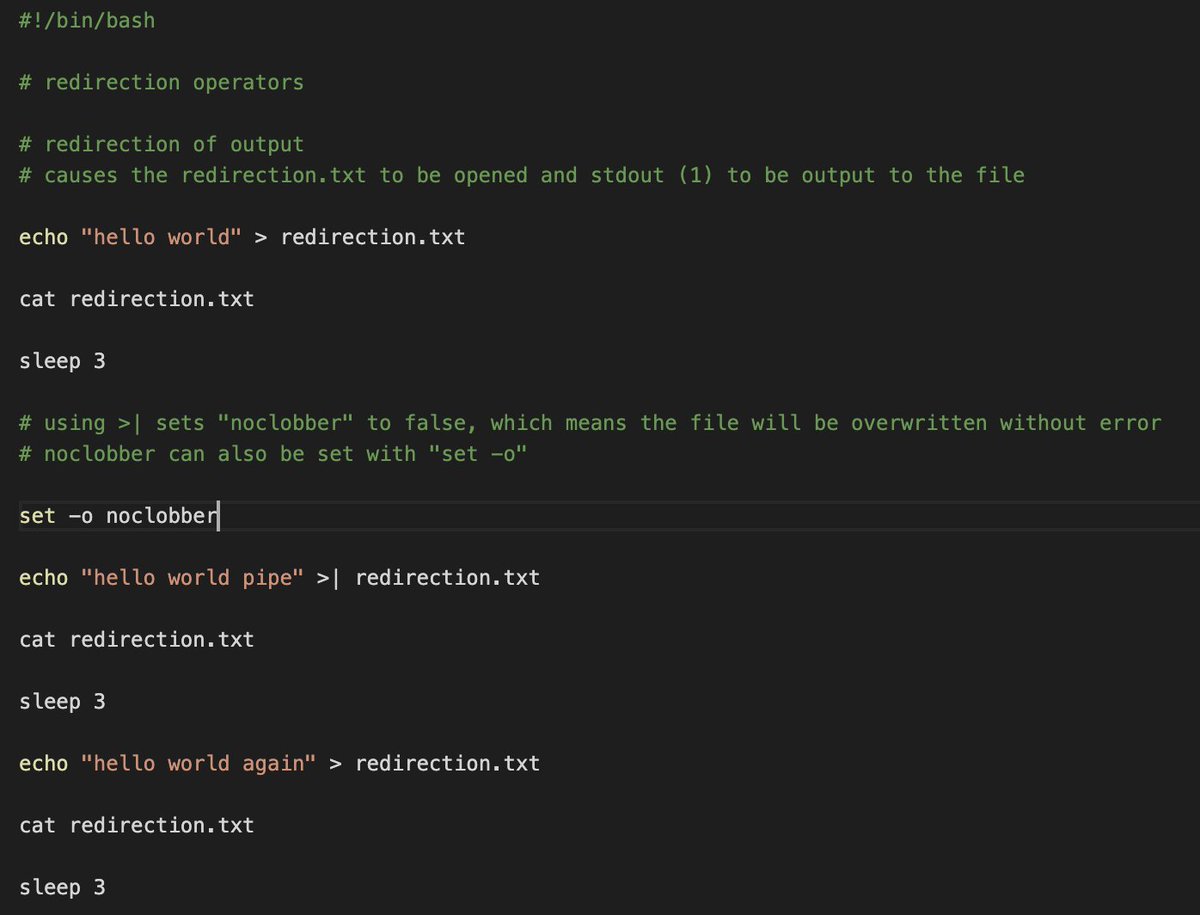

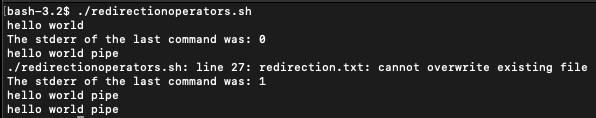

vs > … these redirect stdout output, 1 of a command to a file. You can do set -o noclobberto prevent files from being overwritten. If you use >it over-rides that setting while > will follow whatever the settings are.

vs > … (continued) … attempting to use > with noclobber set on will result in an error, with a stderr output of 1, whereas using > will have a stderr output of 0 (no error).

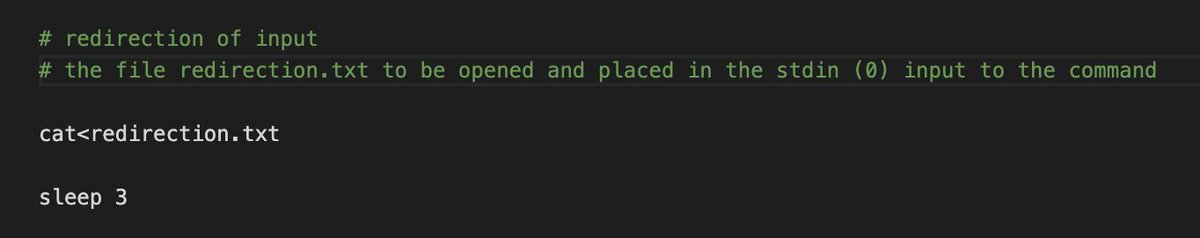

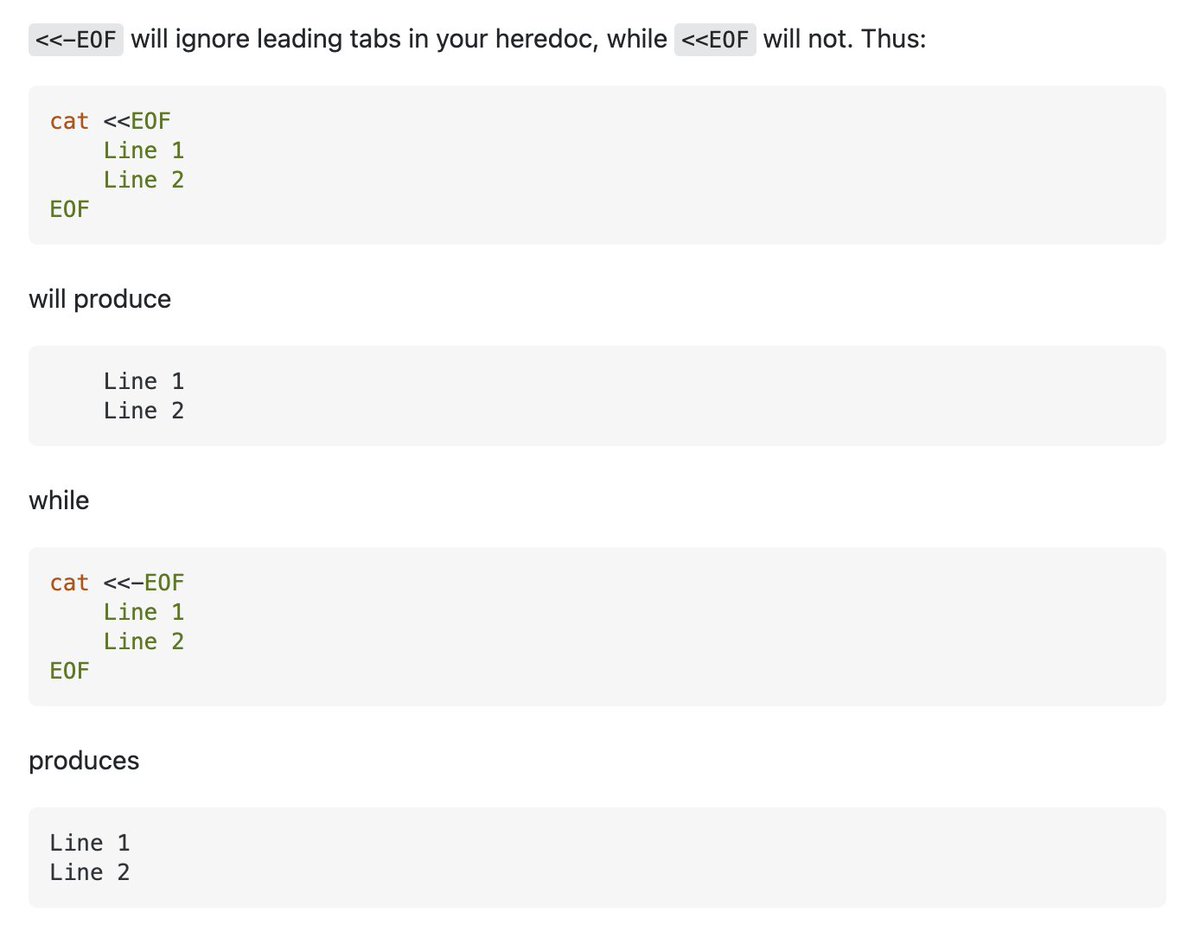

< … the contents of a file get pushed into the input (stdin, 0) of a command.

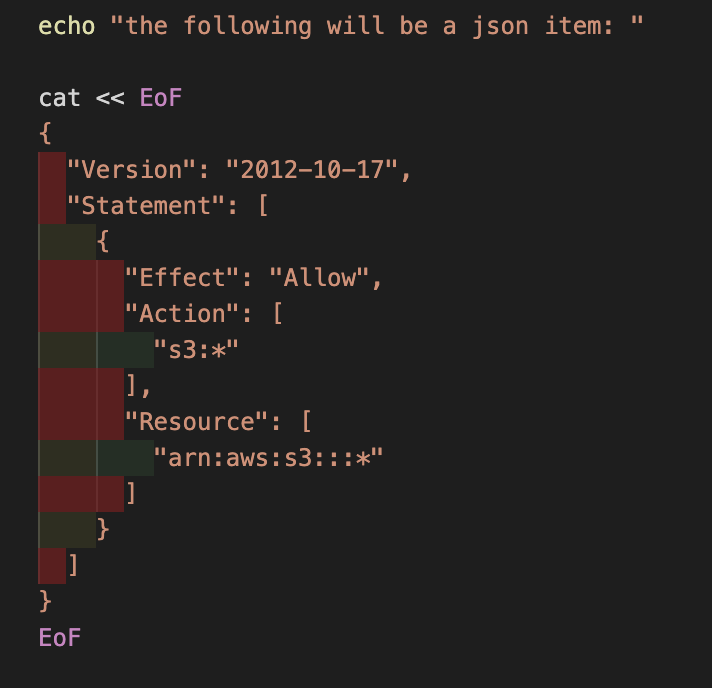

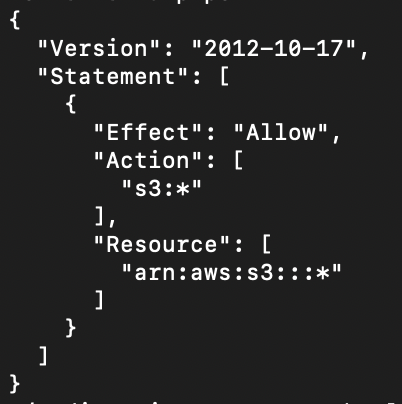

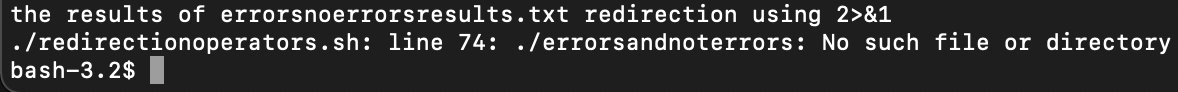

« … known as a here-document, redirects the output of the file until a particular string is found, such as “EOF” . Basically, read input from the source until a line containing only the key/delimiter is seen. This can be done in a script as well as interactive mode.

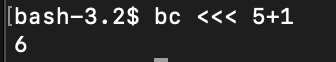

| «< … here-string pushes a string to a program input. Instead of typing in text, you give a pre-made string of text to a program. So this is an alternate method of doing echo ‘5+1’ | bc |

&> … long explanation, it’s essentially the same as 2>&1, a way to redirect errors to be able to output to a file. This is a way to view or store errors in a way that can be reviewed later. &> is the preferred form of >&

… this one is pretty simple, append. Basically it does the same thing as > but rather than overwriting the file completely, it just adds whatever you sent into it to the end of the file.

«- … this is similar to here-document, but it will ignore leading tabs.

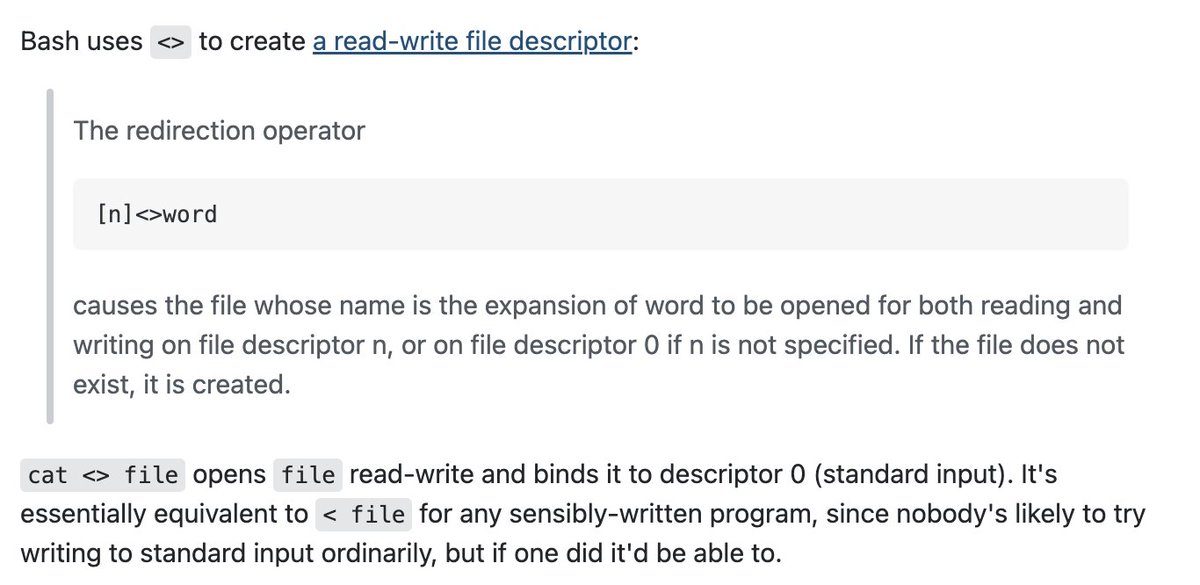

<> … is similar to < but it will work no matter what, as shown. From stack overflow, a possible use case is listed.

Now, on to special parameters in bash 1,2 addheaders.sh command-line-a-to-z-new.md command-line-a-to-z.md getimagelinks.sh getimagelinks_special.sh imagedownload.sh imagelinks.txt images replaceimageurls.sh replaceimageurls_special.sh @ # ? - $ ! _ …

- (aside) … a lot of these special characters are described here: https://tldp.org/LDP/abs/html/special-chars.html

-

… outputs 0, similar to, “true” – can be used in logic.

addheaders.sh command-line-a-to-z-new.md command-line-a-to-z.md getimagelinks.sh getimagelinks_special.sh imagedownload.sh imagelinks.txt images replaceimageurls.sh replaceimageurls_special.sh … the catch-all character, or wildcard which means, “anything.” For example, we can use it in grep to catch anything with certain leading characters.

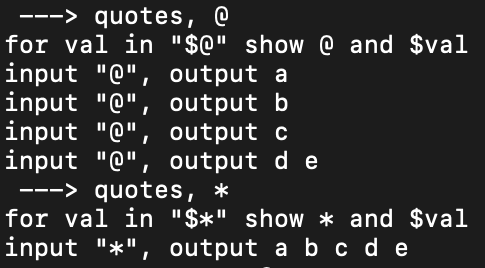

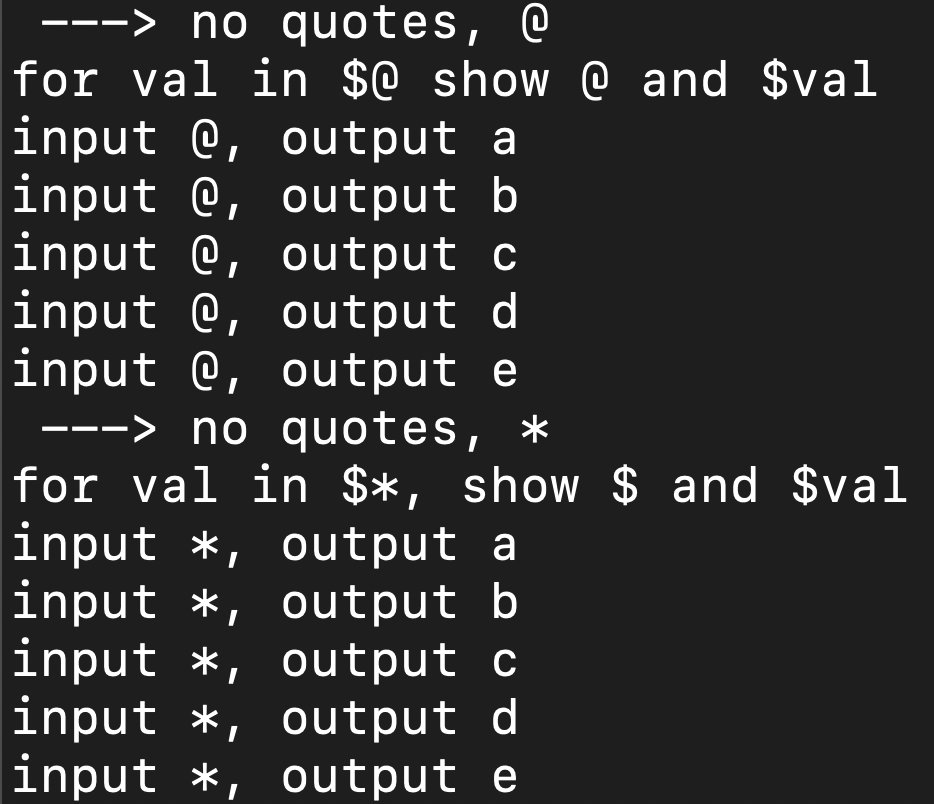

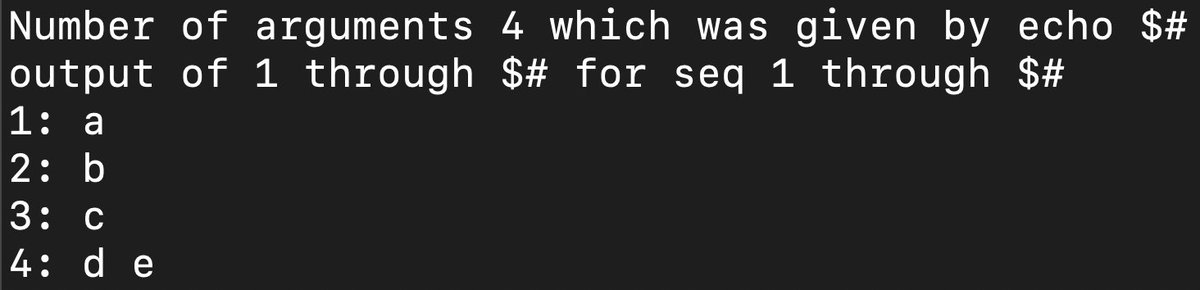

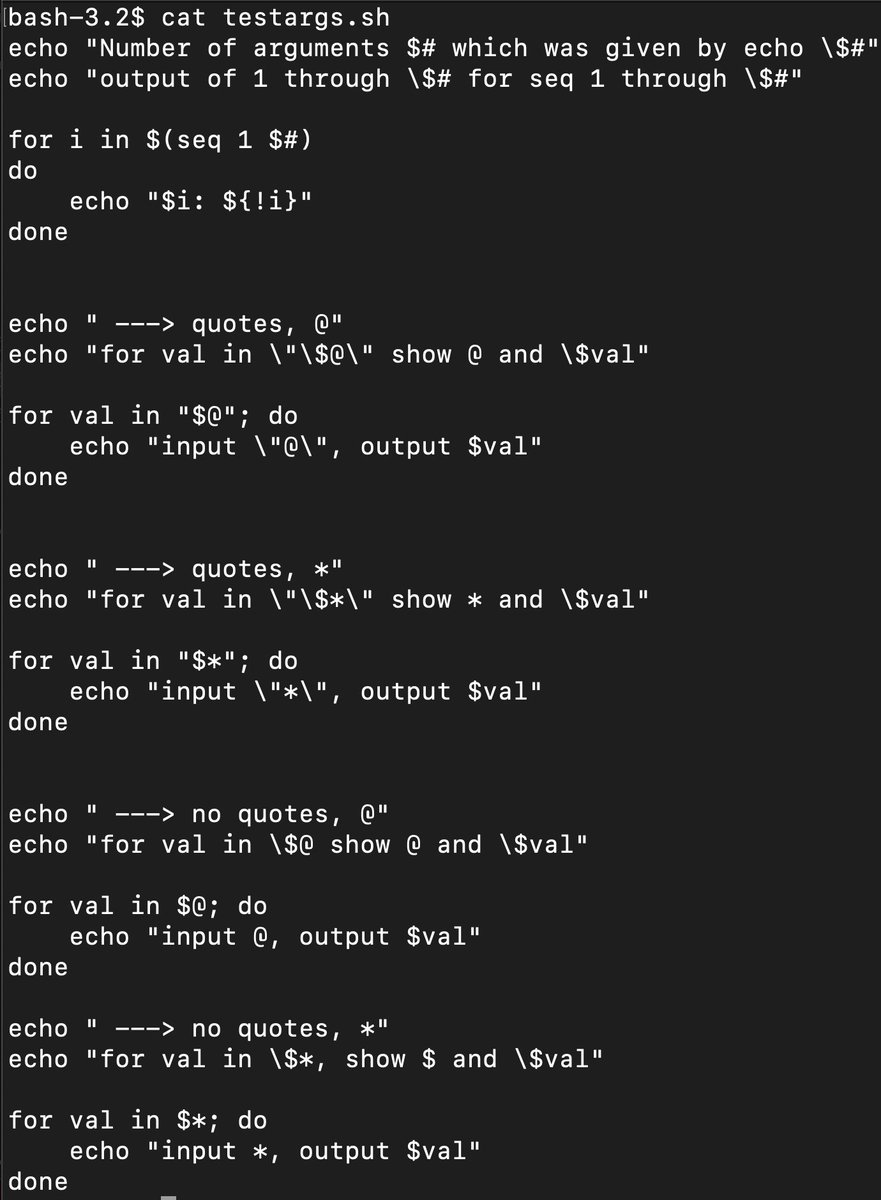

$* vs $@ vs $# … if you run ./script.sh a b c ‘d e’ then “$@” will pay attention to the ‘d e’ as a separate argument specifically while “$*” will act as a wildcard, outputting the whole thing as one long string input. Without quotes they are the same. # shows number of args.

(continued) … note that “@” means “at” so it’s being used to designate specific, typed strings between ‘’ whereas “*” being the, “whatever” symbol is just throwing everything together as one. Taking away the quotes “” takes away their special powers.

$? … shows the exit status of the previous command, pretty straightforward. So if you want to create an if statement in a script for example, which runs if the last thing was in error, you can use $?.

\(... shows the process id for the script in which it appears. So if you just do, "echo\)” it will show you the PID for that particular run of echo.

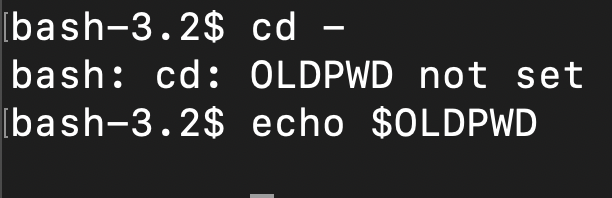

- vs ~+ … besides being minus, - also can designate the old previous working directory, OLDPWD, which is the previous previous working directory, whereas ~+ can designate the current PWD, and is equivalent to the pwd command.

So that basically covers the vast majority of symbols and special characters in bash. Bash is an interactive interface language, with a lot of pre-set settings running which are already in place when you use it. If you run commands from another location, it’s different.

If you’re using something like Python or Golang, different assumptions may be made in their various os or exec libraries about what settings are being used, and you can’t use the redirection and control operators native to bash.

So next I’ll go through some of the things I have learned about using the os package in Golang https://pkg.go.dev/os

to run what would be similar to bash scripts. I could also do similar exercises using Python.

https://pkg.go.dev/os#pkg-examples

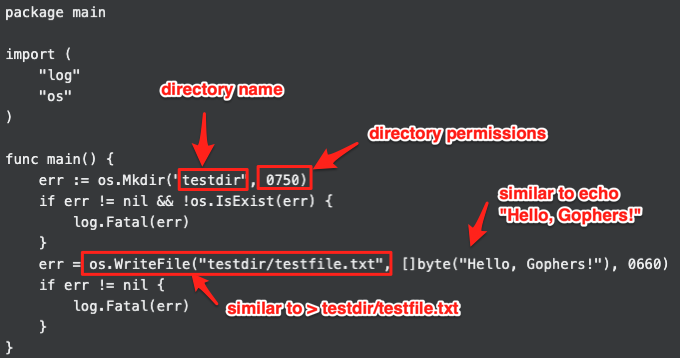

… The os package as a whole does several fundamental things that bash can do in terms of interfacing with a unix-like machine. For example, if you want to do mkdir, you could use Golang os’ Mkdir

golang os package (continued) … in the example shown, this would be the equivalent of running in bash mkdir testdir and then echo "Hello, Gophers!" > testdir/testfile.txt https://pkg.go.dev/os#example-Mkdir

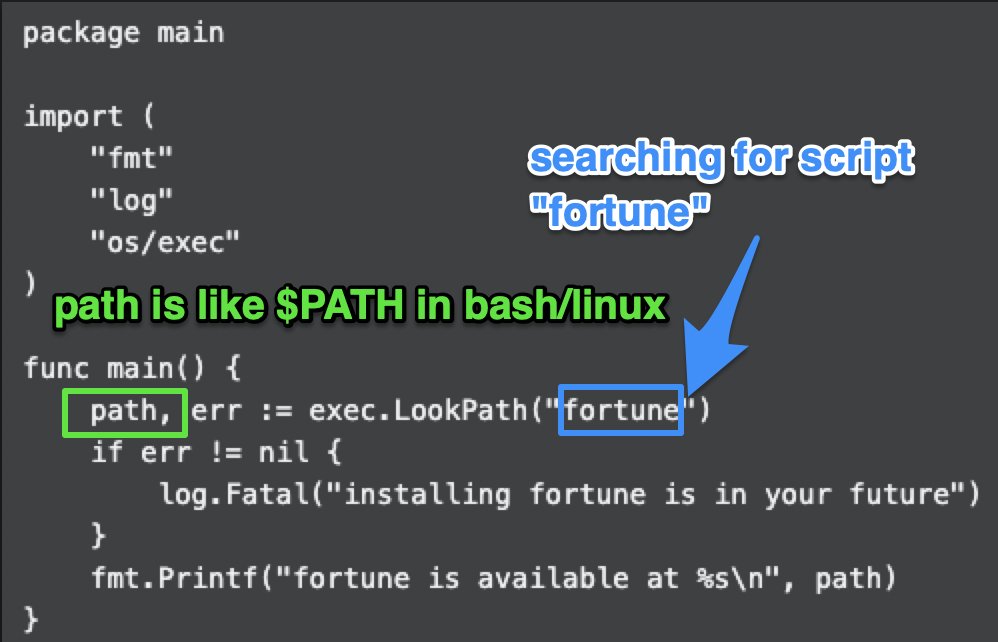

golang os/exec … basically, a part of the os package, this is the way to actually execute bash commands (vs. manipulate the directory structure and environmental variables). https://pkg.go.dev/os/exec

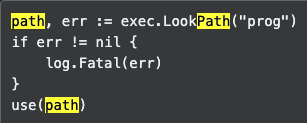

You can write your own bash scripts (or other language scripts) and then have exec.LookPath() see if it exists within path, which is like your $PATH variable. Once you executed LookPath you can then use(path)

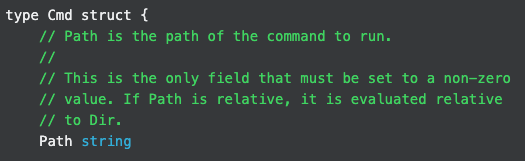

Once you use(path) then there’s a Cmd struct field Path that is a string which gets set to the path in question. https://pkg.go.dev/os/exec#Cmd

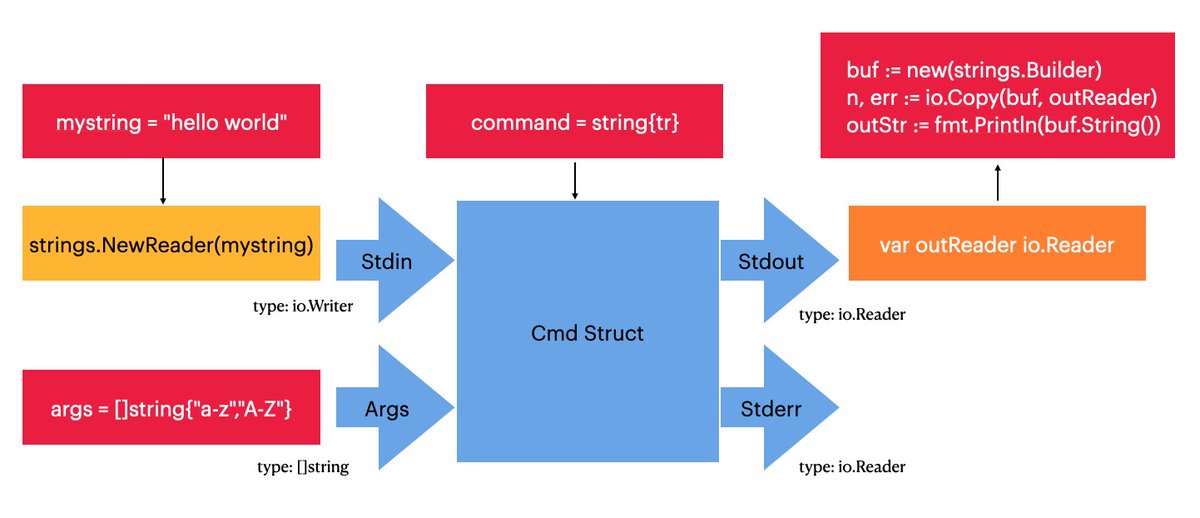

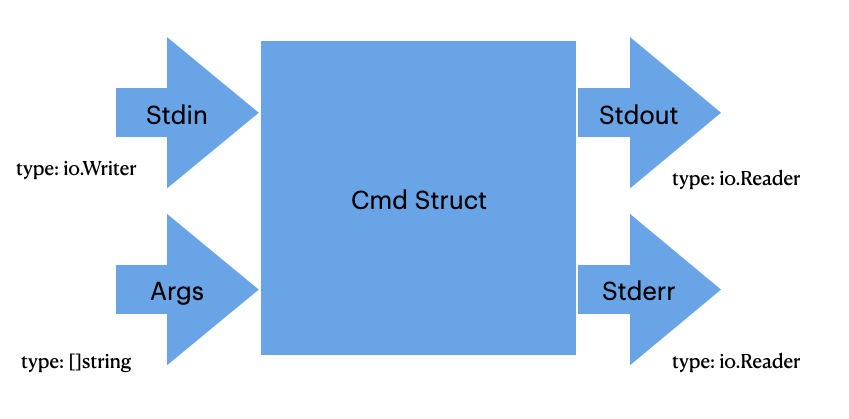

The Cmd struct represents an external command being prepared or run. It has Args, Stdin, Stdout and Stderr, just like in bash. Args can be set dynamically since it’s a string list, you can, “build” your arguments. Stdin/Stdout/Stderr can be forwarded to different variables in Go.

So basically, while in bash everything is on the terminal, in Golang you can have inputs and outputs go to the terminal, or you can have them go into variables in Golang (which would be like sending outputs to an $ENV in bash).

Note that the Stdin is of type io.Writer and Stdout is of type io.Reader https://pkg.go.dev/io#Writer

& https://pkg.go.dev/io#Reader

… the io library provides access to, “io primitives,” which in my basic reading is a way to write to disk (via io).

How does writing to disk have anything to do with Stdout/Stdin/Stderr? Well in unix-like systems, “everything is a file,” and that includes the standard inputs/outputs. So simplistically io.Reader and io.Writer are just ways of classifying that type of file.

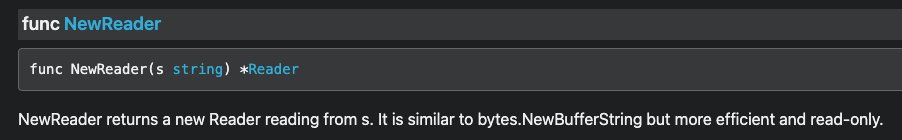

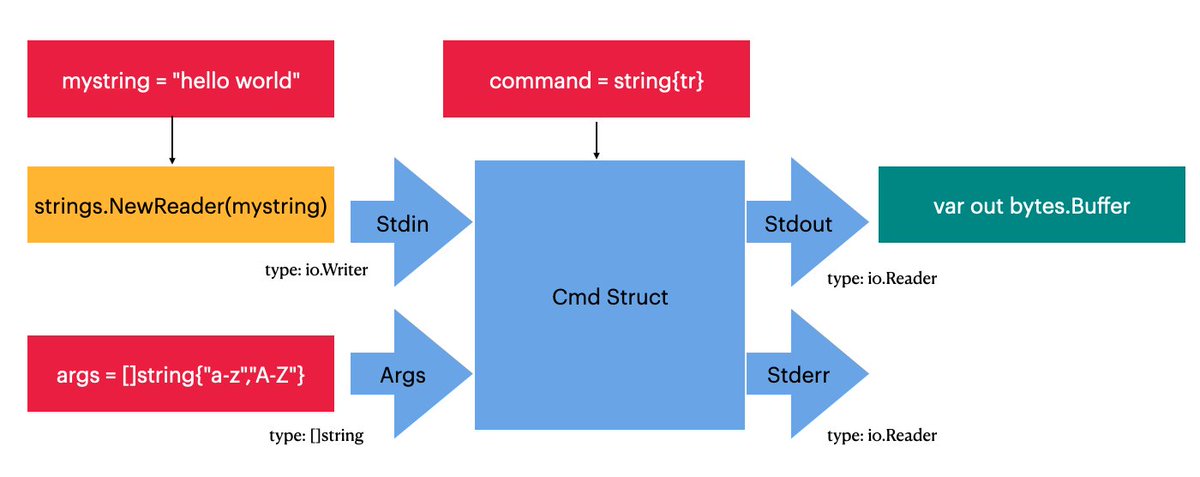

tr Example - use exec.Command() to create a Cmd struct, feeding in Args as strings in a []string (string array). Then you set the Stdin to an io.Reader using NewReader, which outputs a io.Reader type to be used as input with string “some input”

We fed, “some input” into the Stdin by setting Stdin to that io.Reader, generated by strings.NewReader() which takes in a string and outputs an io.Reader. Within exec.Command() we ran “tr” with args, “a-z” and “A-Z”, which will run tr on the stdin with those args.

So turning this into a diagram, we used strings and string lists (shown in red) as the command and argument, and generated an input that stdin would understand with NewReader(). The stdout output was set to bytes.Buffer, which means print out on the terminal.

If you wanted to convert to a string output rather than print out stdout just to the buffer, then you can create a strings.Builder object, then do, “io.Copy()” to copy that outReader into the buf, then finally print buf.String() to a variable which will be a string!